Authors:

Taharim Rahman Anon、Jakaria Islam Emon

Paper:

https://arxiv.org/abs/2408.09371

Introduction

The rapid advancement in artificial intelligence (AI) has led to significant progress in image generation technologies, resulting in highly realistic synthetic images. While these advancements bring numerous benefits, they also present significant challenges in misinformation and digital forensics. Maintaining the integrity of visual media relies crucially on the ability to differentiate between AI-generated and real images. This study introduces a novel detection framework adept at robustly identifying images produced by cutting-edge generative AI models, such as DALL-E 3, MidJourney, and Stable Diffusion 3.

Related Work

Several studies have focused on developing methods to detect AI-generated images, each with its own strengths and limitations:

- Lu et al. revealed significant difficulties even among human observers in distinguishing real photos from AI-generated ones.

- Epstein et al. evaluated classifiers trained incrementally on 14 AI generative models but noted performance drops with major architectural changes in emerging models.

- Chen et al. presented a novel detection method using noise patterns from a single simple patch (SSP) to distinguish between real and AI-generated images.

- Wang et al. proposed DIRE for detecting diffusion-generated images, demonstrating strong generalization and robustness.

- Yan et al. developed the Chameleon dataset to challenge existing detectors and proposed the AIDE model.

- Martin-Rodriguez et al. used Photo Response Non-Uniformity (PRNU) and Error Level Analysis for pixel-wise feature extraction combined with CNNs.

- Chai et al. focused on local artifacts for detection, showing strong generalization but acknowledged preprocessing artifacts and adversarial vulnerability.

- Park et al. provided a comprehensive comparison and visualization of various detection methods.

Building upon the existing research landscape, this study introduces a robust approach to tackle the challenge of generator variance—effectively distinguishing between images generated by diverse AI technologies.

Research Methodology

Data Preparation

To develop our AI-generated image detection framework, we utilize a subset of the RAISE dataset, consisting of 8,156 high-resolution, uncompressed RAW images that are guaranteed to be camera-native.

Data Generation for Training

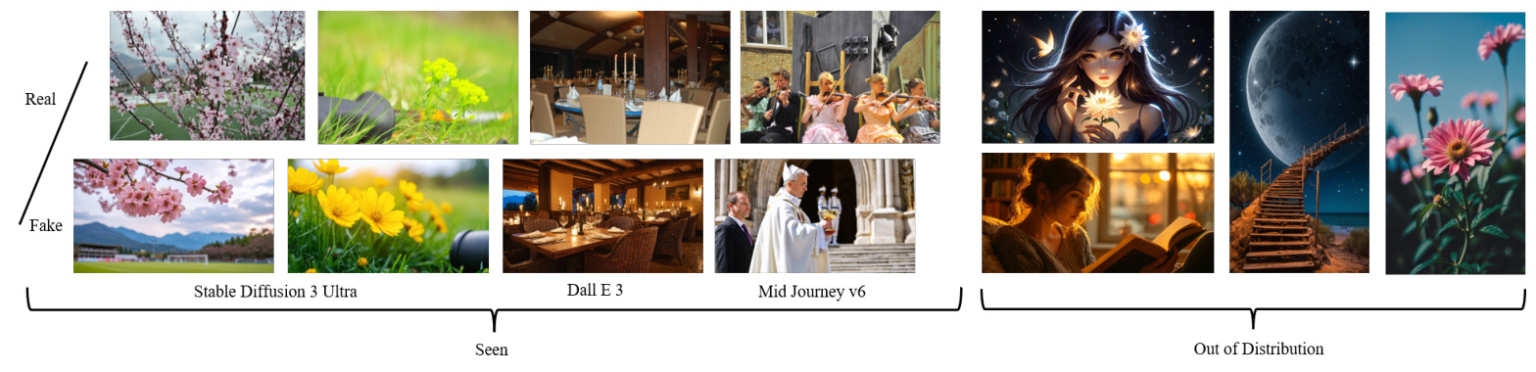

We selected 1000 images from the RAISE dataset, referred to as RAISE 1k. For each real image, we generated a detailed description using a large language model (OpenAI’s GPT-4). These descriptions were used to create synthetic images through three advanced image generation models: Stable Diffusion 3 Ultra (SD 3 Ultra), DALL-E 3, and MidJourney 6. The real images and the AI-generated images were combined to form a comprehensive dataset.

Out of Distribution Test Data for Model Validation

To ensure the robustness and reliability of our model, we incorporated a comprehensive Out of Distribution (OOD) test dataset. This dataset includes 500 real images collected randomly and 500 images generated by Adobe Firefly, DALL-E 3, and MidJourney 5.

Methodology

Our methodology comprises four critical stages: data generation, embedding generation, classifier training, and rigorous model evaluation.

Semantic Image Embedding Generation

We utilize the CLIP (Contrastive Language-Image Pretraining) model to generate embeddings for each image in our dataset. These embeddings serve as input features for the subsequent classification tasks.

Designing of the Hybrid KAN-MLP Classifier

The proposed Hybrid KAN-MLP Classifier represents a synthesis of the adaptive feature transformation of Kolmogorov-Arnold Networks (KAN) and the structural integrity of a Multilayer Perceptron (MLP). This innovative classifier is anchored by the KANLinear module, which utilizes advanced spline-based techniques for precise and high-resolution feature mapping.

MLP Classifier

In addition to our hybrid model, we employ a standalone MLP classifier as a comparative baseline within our study. The MLP is designed with multiple dense layers, formulated to learn and classify images directly from the embeddings generated by the CLIP model.

Training

To train both classifiers, we utilize binary cross-entropy as the loss function. The Adam optimizer is chosen for its robustness in managing sparse gradients and optimizing the training process.

Evaluation Metrics

To assess the performance of our trained model, we employ a variety of metrics, including confusion matrix, precision, recall, F1-score, and ROC Curve and AUC.

Experimental Design

Data Generation Process

The data generation process involves generating descriptions for real images using a large language model and then creating synthetic images using state-of-the-art models such as Stable Diffusion 3 Ultra, DALL-E 3, and MidJourney 6.

Dataset Summary

The combined dataset includes 1000 real images and 1006 AI-generated images, ensuring a balanced mix of real and synthetic images during training.

Out of Distribution Test Data

The OOD test dataset includes 500 real images and 1500 AI-generated images from Adobe Firefly, DALL-E 3, and MidJourney 5, providing a stringent test of the model’s effectiveness across a broad spectrum of real and synthetic images.

Generated Images

The generated images from given real image descriptions using generative AI models are shown below.

Captions Generated for Images

Captions generated for images in the dataset using a large language model are summarized below.

Proposed Methodology

The proposed methodology for the AI-generated image detection framework is illustrated below.

Results and Analysis

Classification Results

The classification results for real vs. generated images using the proposed and baseline approaches are summarized below.

Confusion Matrices

The confusion matrices for the proposed hybrid classification approach and the baseline approach across three datasets are illustrated below.

ROC Curves

The ROC curves illustrating the performance of the proposed hybrid classification approach on three datasets are shown below.

Overall Conclusion

This study has successfully demonstrated the efficacy of the Hybrid KAN-MLP model in distinguishing between real and AI-generated images, a crucial capability in the era of advanced digital image synthesis technologies. The robust performance of our classifier across diverse datasets underscores its potential utility in critical applications such as digital forensics and media integrity verification. Despite its strong performance, the high cost of using advanced AI services limits our ability to gather large datasets. Future work will focus on scaling these approaches to accommodate larger datasets and integrating real-time processing capabilities, ensuring that the model remains effective against evolving generative image technologies.