Authors:

Arya Hadizadeh Moghaddam、Mohsen Nayebi Kerdabadi、Mei Liu、Zijun Yao

Paper:

https://arxiv.org/abs/2408.10259

Introduction

In recent years, the proliferation of Electronic Health Records (EHRs) has enabled significant advancements in personalized healthcare analysis. Sequential prescription recommender systems have emerged as a crucial tool for supporting informed treatment decisions by analyzing complex EHR data accumulated over a patient’s medical history. However, a notable challenge in this domain is the need to disentangle the complex relationships across sequential visits and establish multiple health profiles for the same patient to ensure comprehensive consideration of different medical intents in drug recommendation.

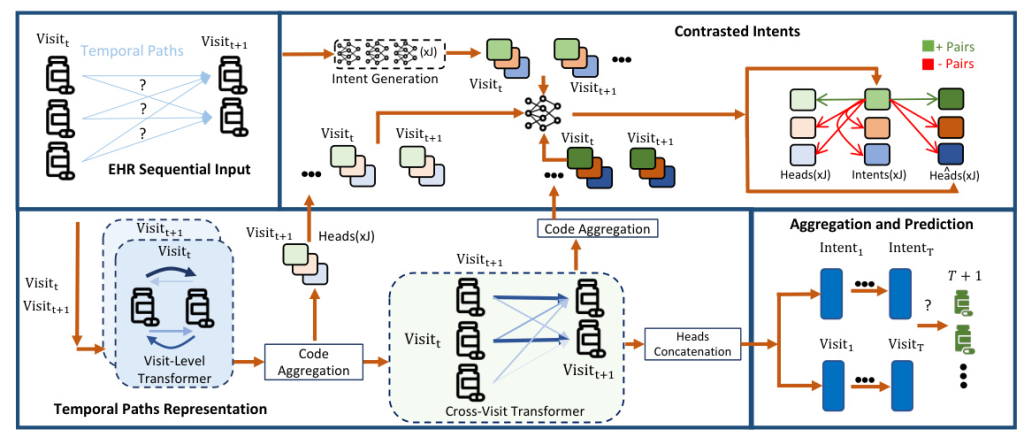

To address this challenge, the study introduces Attentive Recommendation with Contrasted Intents (ARCI), a multi-level transformer-based method designed to capture the different but coexisting temporal paths across a shared sequence of visits. This approach leverages contrastive learning to link specialized medical intents of patients to transformer heads, extracting distinct temporal paths associated with different health profiles.

Related Work

Sequential Prescription Recommendation Systems

Recent literature on drug recommendation has shown promising performance in sequential modeling applied to EHRs. Notable works include:

– Dr. Agent: Utilizes RNN with dual policy gradient agents for adaptive focus on pertinent information.

– Retain: Incorporates a dual-RNN network to capture the interpretable influence of visits and medical features.

– Transformer’s Encoder: Employs self-attention for dependency learning between healthcare features.

– Micron: Uses residual recurrent neural networks to update changes in patient health.

– SafeDrug: Utilizes a global message passing neural network to encode the functionality of prescription molecules.

– MoleRec: A structure-aware encoding method that models interactions considering DDI.

– GAMENet: Employs a memory module and graph neural networks to incorporate the knowledge graph of drug-drug interactions.

Intent-Aware Recommender Systems

Intent-aware systems capture users’ multiple intentions for enhanced recommendations. Examples include:

– Atten-Mixer: Considers both concept-view and instance-view for items using the Transformer architecture.

– ASLI: Extracts users’ multiple intents using a temporal convolutional network.

– ICL: Employs a latent variable representing the distribution of users and their intent through clustering and contrastive learning.

Research Methodology

Problem Formulation

In EHR data, each patient is represented as a time sequence of visits, with various prescribed medications recorded in each visit. The goal is to predict a distinct set of medications for the next visit, given the medication history of sequential visits.

Methodology Overview

The proposed method consists of three main components:

1. Temporal Paths Representation: Utilizes multi-level self-attention modules to generate temporal paths for enhanced embedding learning.

2. Contrasted Intents: Diversifies temporal paths by linking them with different medical intents in a contrastive learning framework.

3. Aggregation and Prediction: Employs a visit-instance attention mechanism to prioritize visits for the final prescription prediction.

Temporal Paths Representation

Visit-Level Transformer

The self-attention mechanism captures dependencies among healthcare features within the same visit. The sparse input of prescriptions is converted to a dense representation with an embedding layer, followed by a self-attention encoder to extract dependencies between prescription codes.

Cross-Visit Transformer

To capture cross-visit dependencies, a novel attention-based approach generates temporal paths over prescriptions in consecutive time steps. The Query is obtained from time ( t ), while the Key is obtained from ( t+1 ), reflecting the effect of outgoing attention and dependency from medications at ( t ) to medications at ( t+1 ).

Contrasted Intents

To ensure that multiple heads represent different considerations of a patient’s health condition, a contrastive learning framework is employed. Each head is linked to a specific medical intent, ensuring distinct and comprehensive health profiles. The contrastive loss encourages the recommendation models to bring the extracted temporal paths closer to the corresponding intents while pushing each medical intent apart from the others in the embedding space.

Aggregation and Prediction

The multi-level Transformers and intents enhance embeddings by integrating temporal and visit-level information. An interpretable visit-instance attention mechanism identifies the most crucial visits for both intents and patient embeddings. The final prediction is made using a combination of these embeddings.

Experimental Design

Datasets

Two real-world datasets are utilized for the prescription recommendation task:

– MIMIC-III: Includes health-related data linked to over 40,000 patients admitted to critical care units.

– AKI: Contains healthcare data from the University of Kansas Medical Center, focusing on patients at risk of Acute Kidney Injury.

Experimental Setup

The experiments focus on recommending the prescription for the next visit. The datasets are split into training and testing sets, and the optimal configuration for ARCI is determined through a greedy search approach. The programming language used is Python, with PyTorch for the method architecture.

Evaluation Metrics

Both classification and ranking metrics are used to evaluate ARCI comprehensively:

– Classification Metrics: PRAUC, F1, and Jaccard.

– Drug Safety Metric: DDI rate.

– Ranking Metrics: Hit@K and NDCG@K.

Results and Analysis

Performance Comparison with Baselines

ARCI outperforms state-of-the-art healthcare predictive methods and prescription recommendation systems across various metrics. The results highlight the superior performance of ARCI in capturing temporal paths and diverse medical intents.

Ablation Studies

The impact of omitting each submodule on the overall performance is investigated. The results indicate that the Cross-Visit Transformer and the Contrasted Intent module significantly enhance the model’s performance.

Including Repetitive Prescriptions

Experiments including repetitive prescriptions show that ARCI remains effective in providing reliable prescription recommendations, outperforming state-of-the-art models.

Interpretability

The Cross-Visit Transformer employs an attention matrix to capture temporal paths between consecutive time steps. The visit-instance attention mechanism highlights the significance of each visit, providing interpretable insights into the decision-making process.

Overall Conclusion

The study introduces ARCI, a multi-level transformer-based approach designed to model simultaneous temporal paths and extract distinct medical intents for facilitating prescription recommendations. ARCI outperforms state-of-the-art methods on two real-world datasets and provides interpretable insights for healthcare practitioners.