Authors:

Xinglin Wang、Peiwen Yuan、Shaoxiong Feng、Yiwei Li、Boyuan Pan、Heda Wang、Yao Hu、Kan Li

Paper:

https://arxiv.org/abs/2408.09150

Tracking Cognitive Development of Large Language Models: An In-depth Analysis of CogLM

Introduction

Background

Large Language Models (LLMs) have recently demonstrated remarkable capabilities across a wide range of Natural Language Processing (NLP) tasks, such as text comprehension, reasoning, code generation, and solving mathematical problems. Despite these advancements, there is limited understanding of the cognitive development of these models. This gap in knowledge poses challenges in comprehending the evolution of LLMs’ abilities and may hinder their future development.

Problem Statement

Inspired by Piaget’s Theory of Cognitive Development (PTC), which outlines the stages of cognitive growth in humans, this study aims to explore the cognitive development of LLMs. The primary questions addressed are:

1. At what stage have the cognitive abilities of LLMs developed compared to humans?

2. What are the key factors influencing the cognitive abilities of LLMs?

3. How do cognitive levels correlate with performance on downstream tasks?

To answer these questions, the study introduces a benchmark called COGLM (Cognitive Ability Evaluation for Language Models) based on PTC to assess the cognitive levels of LLMs.

Related Work

Existing Evaluations of LLMs

LLMs have been evaluated extensively on various tasks, with large-scale benchmarks integrating numerous evaluations across different fields. However, these benchmarks often focus on specific abilities or categories of advanced abilities, without exploring the developmental relationships between different abilities.

Cognitive Psychology and LLMs

Several studies have used cognitive psychology tools to understand LLMs’ behavior and human-like abilities. However, these studies typically assess advanced abilities without tracking the evolution of cognitive abilities across different stages.

Piaget’s Theory of Cognitive Development

PTC is a foundational theory in psychology, suggesting that intelligence develops through a series of stages. This theory has been widely applied in education, psychology, linguistics, and neuroscience, providing a framework for understanding cognitive development.

Research Methodology

COGLM Benchmark Development

To comprehensively assess the cognitive abilities of LLMs, the study undertakes the following steps:

Definition of Cognitive Abilities

Based on PTC, the study selects and redefines 10 cognitive abilities relevant to LLMs, excluding reflexes and sensorimotor aspects due to the text-based interaction of LLMs. These abilities are defined and exemplified in Table 1 of the paper.

Standardized Annotation Guidelines

To ensure the quality of COGLM, standardized annotation guidelines are established, including:

– Data Format: Multiple-choice questions are used to avoid variations in generation capabilities.

– Number of Samples: The number of samples increases with each cognitive stage.

– Qualified Annotators: Annotators with backgrounds in psychology or artificial intelligence are selected and trained.

– Annotation Quality Control: Cross-checks among annotators are conducted to identify and rectify quality issues.

Consistency with Theory

Human tests are conducted to ensure COGLM’s alignment with PTC. The correlation between participants’ ages and their questionnaire scores validates the effectiveness of the annotation guidelines.

Calibrated Cognitive Age Mapping Function

A mapping function is constructed to relate COGLM results to cognitive age, ensuring accurate assessment of cognitive abilities.

Experimental Design

Experimental Setup

Models

The study evaluates several popular LLM architectures, including:

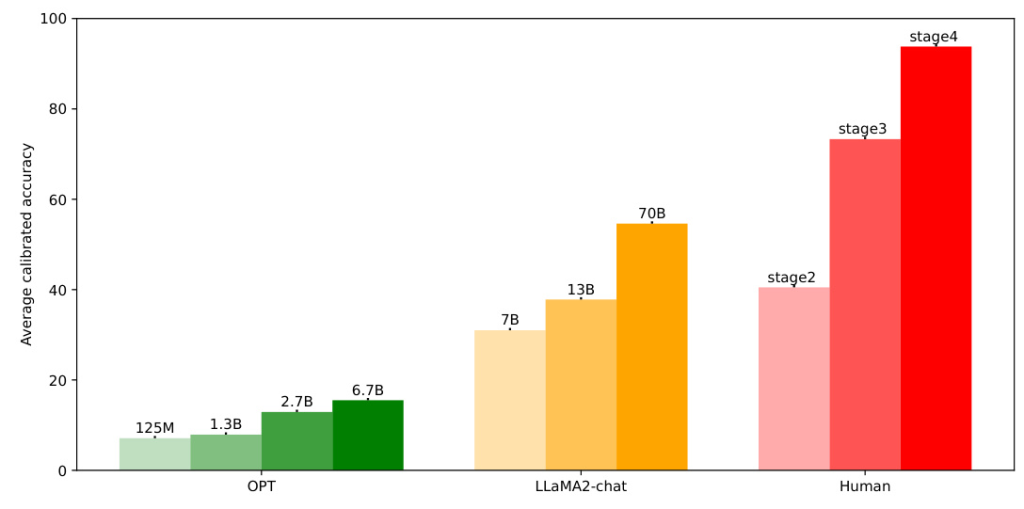

– OPT series: Models with sizes of 125M, 1.3B, 2.7B, and 6.7B.

– Llama-2 series: Models with scales of 7B, 13B, and 70B.

– GPT-3.5-Turbo and GPT-4: State-of-the-art models with unknown training and architecture details.

Evaluation

Different evaluation methods are used for text-completion and chat-completion models. For text-completion models, the option with the highest generation probability is selected, while chat-completion models are instructed to provide a single answer with explanations.

Main Results

The study finds that:

1. Advanced LLMs, such as GPT-4, have developed cognitive abilities comparable to a 20-year-old human.

2. The size of model parameters and optimization objectives are key factors influencing cognitive abilities.

3. There is a positive correlation between cognitive levels and performance on downstream tasks.

Results and Analysis

Key Factors Affecting Cognitive Abilities

Parameter Size

The study shows that cognitive abilities improve with the increase in model parameters, consistent with previous findings.

Optimization Objective

Models fine-tuned for dialogue (e.g., Llama-2-chat-70B) outperform their text-completion counterparts, suggesting that learning to chat with humans enhances cognitive abilities.

Impact of Advanced Technologies

Chain-of-Thought (COT)

COT does not significantly improve cognitive abilities, indicating that these abilities are inherent to LLMs and not easily enhanced through multi-step reasoning.

Self-Consistency (SC)

SC also brings marginal improvements, highlighting the challenge of enhancing cognitive abilities without external stimuli.

Cognitive Ability and Downstream Performance

The study finds that advanced cognitive abilities significantly impact performance on downstream tasks, with higher cognitive abilities correlating with better task performance.

Potential Applications

The study explores the use of cognitive chain-of-thought (COC) from advanced LLMs to improve the performance of early-aged LLMs, showing significant improvements in most cognitive abilities.

Overall Conclusion

The study introduces COGLM, a benchmark based on Piaget’s Theory of Cognitive Development, to assess the cognitive abilities of LLMs. Key findings include:

1. Advanced LLMs have developed human-like cognitive abilities.

2. Parameter size and optimization objectives are crucial factors influencing cognitive abilities.

3. Cognitive levels positively correlate with performance on downstream tasks.

These insights provide a novel understanding of the emergence of abilities in LLMs and guide future development of advanced LLM capabilities.

Limitations and Future Work

The study acknowledges that it does not consider the performance of LLMs at different training stages, which will be explored in future research.

Ethics Statement

The dataset used in the study is free from harmful or offensive content, and participants’ privacy is protected through anonymization and informed consent.

This detailed interpretive blog provides a comprehensive overview of the study “CogLM: Tracking Cognitive Development of Large Language Models,” highlighting its methodology, findings, and implications for the future development of LLMs.