Authors:

Paper:

https://arxiv.org/abs/2408.09235

Introduction

In the realm of natural language processing (NLP), the evaluation of free-form text remains a challenging task. Traditional methods often rely on human evaluators to judge the quality and accuracy of text generated by language models. However, this approach is not scalable and can be subjective. The study titled “Reference-Guided Verdict: LLMs-as-Judges in Automatic Evaluation of Free-Form Text” explores an innovative solution to this problem by leveraging large language models (LLMs) as automated judges. This approach aims to provide a more scalable and objective method for evaluating free-form text.

Related Work

Human Evaluation in NLP

Human evaluation has long been the gold standard for assessing the quality of text generated by language models. However, it is labor-intensive, time-consuming, and subject to individual biases. Previous studies have highlighted the need for more scalable and objective evaluation methods.

Automated Evaluation Metrics

Automated metrics such as BLEU, ROUGE, and METEOR have been widely used to evaluate text generation tasks. While these metrics provide a quick and scalable way to assess text quality, they often fail to capture the nuances of human judgment, especially in free-form text.

LLMs as Evaluators

Recent advancements in LLMs have opened up new possibilities for automated evaluation. Studies have shown that LLMs can be fine-tuned to perform specific tasks, including text evaluation. This study builds on this idea by proposing a reference-guided approach where LLMs act as judges to evaluate the correctness of provided answers against reference answers.

Research Methodology

Reference-Guided Evaluation

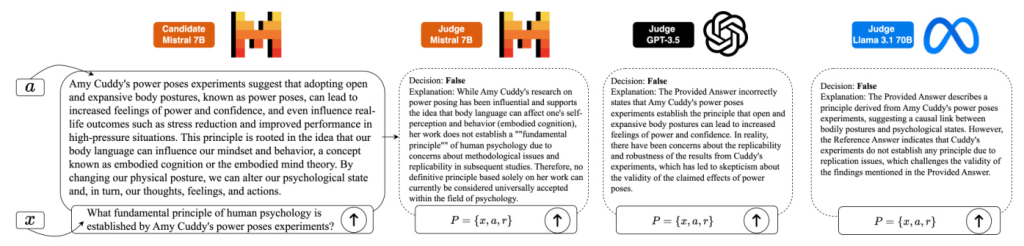

The core idea of the proposed methodology is to use LLMs to compare a provided answer with a reference answer and make a judgment on its correctness. The LLMs are fine-tuned to understand the context and nuances of the questions and answers, enabling them to provide accurate evaluations.

Model Selection

Three LLMs were selected for this study: Mistral 7B, GPT-3.5, and Llama-3.1 70B. These models were chosen based on their performance in various NLP tasks and their ability to understand and generate human-like text.

Evaluation Criteria

The evaluation criteria were designed to assess the accuracy and reliability of the LLMs as judges. The models were evaluated on three datasets: TruthfulQA, TriviaQA, and HotpotQA. The performance of the LLMs was compared against human evaluations to determine their effectiveness.

Experimental Design

Dataset Preparation

The datasets used in this study were carefully selected to cover a wide range of question types and difficulty levels. TruthfulQA focuses on factual correctness, TriviaQA includes trivia questions, and HotpotQA involves multi-hop reasoning questions.

Prompt Design

Different types of prompts were designed to guide the LLMs in their evaluation tasks. These included open prompts, detailed prompts, and close prompts, each providing varying levels of guidance to the models.

Evaluation Process

The evaluation process involved presenting the LLMs with a question, a provided answer, and a reference answer. The models were then asked to judge the correctness of the provided answer and provide an explanation for their decision. The results were compared against human evaluations to assess the accuracy and reliability of the LLMs.

Results and Analysis

Accuracy Comparison

The accuracy of the LLMs as judges was compared against human evaluations across the three datasets. The results showed that while the LLMs performed well, there were variations in their accuracy depending on the type of prompt and the dataset.

Prompt Effectiveness

The effectiveness of different prompt types was analyzed to determine their impact on the LLMs’ performance. The results indicated that detailed prompts generally led to higher accuracy, while open prompts provided more flexibility but resulted in lower accuracy.

Inter-Rater Agreement

The inter-rater agreement between human evaluators and LLMs was measured using Fleiss’ Kappa. The results showed a moderate to high level of agreement, indicating that LLMs can serve as reliable judges in the evaluation of free-form text.

Majority Voting

The study also explored the use of majority voting among multiple LLMs to improve evaluation accuracy. The results showed that majority voting led to higher accuracy and reliability compared to individual LLM evaluations.

Overall Conclusion

The study demonstrates the potential of using LLMs as automated judges for the evaluation of free-form text. The reference-guided approach provides a scalable and objective method for assessing text quality, with performance comparable to human evaluations. While there are still challenges to be addressed, such as prompt design and model selection, the findings highlight the promise of LLMs in automating the evaluation process in NLP.

In conclusion, the use of LLMs as judges represents a significant step forward in the automatic evaluation of free-form text. This approach not only reduces the reliance on human evaluators but also offers a more consistent and scalable solution for assessing the quality of text generated by language models. Future research can build on these findings to further refine the methodology and explore new applications in NLP.