Authors:

Zhiwei Li、Guodong Long、Tianyi Zhou、Jing Jiang、Chengqi Zhang

Paper:

https://arxiv.org/abs/2408.08931

Introduction

In the digital age, recommendation systems have become indispensable tools for filtering online information and helping users discover products, content, and services that match their preferences. Collaborative Filtering (CF) is widely recognized for its ability to generate personalized recommendations by analyzing the relationships between users and items based on user interaction data. However, with the enforcement of data privacy laws like GDPR, safeguarding privacy has become increasingly critical. Traditional CF methods typically require centralizing user data on servers for processing, a practice that is no longer viable in today’s privacy-conscious environment.

To address this challenge, Federated Collaborative Filtering (FedCF) has emerged, combining the principles of federated learning (FL) and CF. FedCF enables models to be trained on users’ devices, eliminating the need to upload private data to central servers, thus ensuring data privacy while still providing recommendation services. Existing FedCF methods typically combine distributed CF algorithms with privacy-preserving mechanisms, preserving personalized information into a user embedding vector. However, the user embedding is usually insufficient to preserve the rich information of the fine-grained personalization across heterogeneous clients.

This paper proposes a novel personalized FedCF method by preserving users’ personalized information into a latent variable and a neural model simultaneously. Specifically, the modeling of user knowledge is decomposed into two encoders, each designed to capture shared knowledge and personalized knowledge separately. A personalized gating network is then applied to balance personalization and generalization between the global and local encoders. Moreover, to effectively train the proposed framework, the CF problem is modeled as a specialized Variational AutoEncoder (VAE) task by integrating user interaction vector reconstruction with missing value prediction.

Related Work

Personalized Federated Learning

Standard federated learning methods, such as FedAvg, learn a global model on the server while considering data locality on each device. However, these methods are limited in their effectiveness when dealing with non-IID data. Personalized Federated Learning (PFL) aims to learn personalized models for each device to address this issue, often necessitating server-based parameter aggregation. Several studies accomplish PFL by introducing various regularization terms between local and global models. Meanwhile, some work focuses on personalized model learning by promoting the closeness of local models via variance metrics or enhancing this by clustering users into groups and selecting representative users for training.

Federated Collaborative Filtering

As privacy protection becomes increasingly important, many studies have focused on FedCF. Model-based personalized FedCF leverages users’ historical behavior data, such as ratings, clicks, and purchases, to learn latent factors that represent user and item characteristics. Early works primarily focused on modeling user preferences. In contrast, recent works have implemented dual personalization, which means they personalized both user preferences and item information. However, the nature of most existing work on matrix factorization limits their ability to model complex nonlinear relationships in data.

Variational Autoencoders

VAEs have become increasingly important in recommendation systems due to their ability to model complex data distributions and handle data sparsity issues. VAEs learn latent representations of user-item interaction data by mapping high-dimensional data to a low-dimensional latent space, which effectively captures complex patterns in user preferences and item characteristics. Recent research has focused on enhancing VAE models to improve recommendation performance, including combining VAEs with Generative Adversarial Networks, integrating VAEs with more effective priors, and enhancing VAEs with contrastive learning techniques.

Research Methodology

Framework

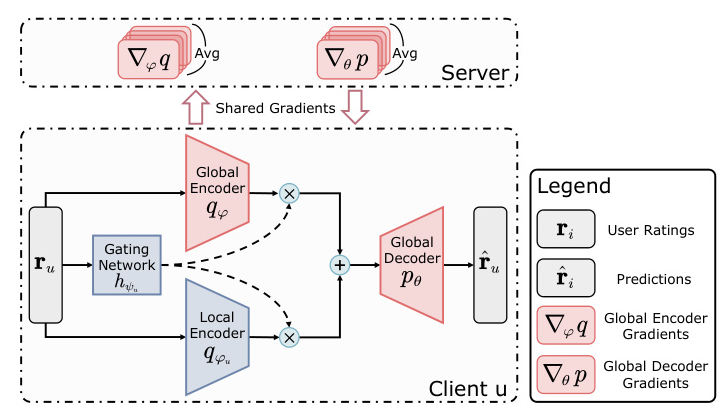

The proposed framework, FedDAE, inputs the interaction vector ( r_u ) into the global encoder ( q_\phi ), the local encoder ( q_{\phi_u} ), and the gating network ( h_{\psi_u} ), separately. By multiplying the outputs of the dual encoders by the weights generated by ( h_{\psi_u} ) based on the user’s interaction data and then summing them, FedDAE achieves adaptive additive personalization. The resulting output is then passed to the global decoder ( p_\theta ) to reconstruct the user’s interaction data ( \hat{r_u} ).

Dual Encoders

The dual-encoder mechanism is proposed to separately preserve shared knowledge across clients and client-specific personalized knowledge. Specifically, the encoder ( q_{\Phi_u} ) is implemented through a dual-encoder structure, consisting of a global encoder ( q_\phi ) and a local encoder ( q_{\phi_u} ) for each user ( u ). The parameters ( \phi ) and ( \phi_u ) capture the globally shared representation of item features and the personalized representation specific to user ( u ), respectively.

Gating Network

To effectively combine the global and local representations, a gating network ( h_{\psi_u}(r_u) = [\omega_{u1}, \omega_{u2}] ) is used, which dynamically assigns weights ( \omega_{u1} ) and ( \omega_{u2} ) to the outputs of ( g_\phi ) and ( g_{\phi_u} ) based on the data ( r_u ). This allows FedDAE to adaptively adjust the balance between shared and personalized information based on each client’s interaction data.

Reconstruction and Prediction

The VAE loss for the FedCF problem is defined as follows. By combining the log-likelihood of ( r_u ) conditioned on ( z_u ) and the variational distribution to estimate ( \theta ) for the function ( f_\theta ), the evidence lower bound (ELBO) on the ( u )-th client is given by:

[ L_\beta(r_u; \Phi_u, \theta) = E_{q_{\Phi_u}(z_u|r_u)}[\log p_\theta(r_u|z_u)] – \beta \cdot KL(q_{\Phi_u}(z_u|r_u) \parallel p_\theta(z_u)), ]

where ( KL(\cdot) ) is the Kullback-Leibler divergence, and ( \beta ) is a hyperparameter controlling the strength of regularization. By jointly maximizing the ELBO of all users, the reconstructed interactions ( \hat{r_u} ) for each user ( u ) are obtained.

Experimental Design

Datasets

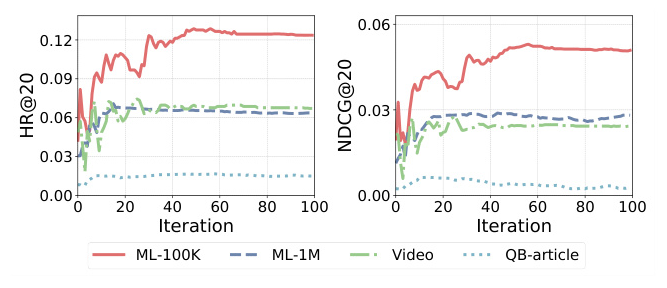

The performance of FedDAE was assessed on four widely utilized datasets: MovieLens-100K (ML-100K), MovieLens-1M (ML-1M), Amazon-Instant-Video (Video), and QB-article. Each dataset exhibits a high degree of sparsity, with the percentage of observed ratings compared to the total possible ratings exceeding 90%. The first three datasets contain explicit ratings ranging from 1 to 5, while the QB-article dataset is an implicit feedback dataset that records user click behavior.

Baselines

The efficacy of FedDAE is comparatively evaluated against several cutting-edge approaches in both centralized and federated environments for validation:

– Mult-VAE: A variational autoencoder model that uses a multinomial distribution as the likelihood function.

– RecVAE: Improves the performance of Mult-VAE by introducing a composite prior distribution and an alternating training method.

– LightGCN: Enhances recommendation performance by simplifying the design of graph convolutional networks (GCNs).

– FedVAE: Extends Mult-VAE to the FL framework, achieving collaborative filtering by only transmitting model gradients between clients and the server.

– PFedRec: Achieves the personalization of user information and item features by introducing dual personalization in the FL framework.

– FedRAP: Enhances the performance of recommendation systems by applying dual personalization of user and item information in federated learning.

Experimental Setting

Hyper-parameter tuning was performed for FedDAE, selecting the learning rate ( \eta ) from a range of values. The Adam optimizer was applied to update FedDAE’s parameters. The latent embedding dimension was fixed to 256, and the training batch size was set to 2048 for all methods. The number of layers for methods with hidden layers was fixed at 3. The number of local epochs was set to 10 for federated methods, and no additional privacy protection measures were applied.

Results and Analysis

Comparison Analysis

Table 2 presents the experimental results of all baseline methods and FedDAE on four widely used recommendation datasets, demonstrating that FedDAE outperforms all federated methods and achieves performance close to centralized methods. This is attributed to FedDAE’s ability to adaptively and individually model each user’s data distribution, retaining shared information about item features while better integrating user-specific item representations.

Ablation Study

To investigate the impact of each component on the model’s performance, a variant of the FedDAE method, named FedDAEw, was proposed. It uses a fixed weight ( w ) for each client to weigh the output of the global encoder. The performance of FedDAEw with different fixed weights was compared to FedDAE on the ML-100K dataset. The results showed that personalized information plays a crucial role in FedDAE, and the lack of personalized information results in a greater decline in recommendation performance compared to the lack of shared information.

Privacy-preservation Enhancement

The proposed framework adopts a decentralized architecture from the FL scheme, significantly reducing the risk of privacy leaks by maintaining data locality. Similar to most FedCF methods, the approach only transmits the gradients of global model parameters without sharing any raw data with third parties. Additionally, the method can be easily combined with other advanced privacy-preserving techniques, such as differential privacy, to further strengthen user privacy guarantees.

Overall Conclusion

This paper revisits FedCF from the perspective of VAEs and proposes a novel personalized FedCF method called FedDAE. FedDAE constructs a VAE model with dual encoders and a global decoder for each client, capturing user-specific representations of item features while preserving globally shared information. The outputs of the two encoders are dynamically weighted through a gating network based on user interaction data, achieving adaptive additive personalization. Experimental results on four widely used recommendation datasets demonstrate that FedDAE outperforms existing FedCF methods and various ablation baselines, showcasing its ability to provide efficient personalized recommendations while protecting user privacy. Additionally, the research reveals that the sparsity and scale of the datasets significantly impact the performance of FedDAE, suggesting that highly sparse datasets require additional strategies to enhance the model’s learning capability.