Authors:

Andrew Kiruluta、Eric Lundy、Andreas Lemos

Paper:

https://arxiv.org/abs/2408.10619

Introduction

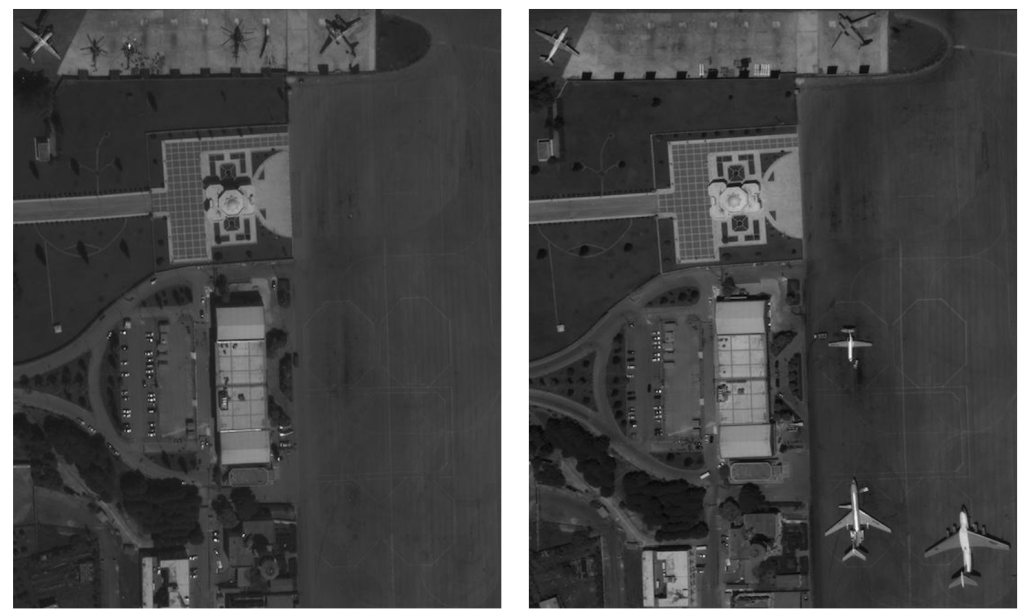

Change detection in remote sensing is a critical task that involves comparing satellite images taken at different times to identify changes in the observed scene. This capability is essential for various applications, including environmental monitoring, urban expansion analysis, disaster management, and land use classification. Traditional change detection methods, such as image differencing and ratioing, often struggle with noise and fail to capture complex variations in imagery. Recent advancements in machine learning, particularly generative models like diffusion models, offer new opportunities for enhancing change detection accuracy.

In this paper, we introduce a novel change detection framework that combines the strengths of Stable Diffusion models with the Structural Similarity Index (SSIM) to create robust and interpretable change maps. Our approach, named Diffusion Based Change Detector, is evaluated on both synthetic and real-world remote sensing datasets and compared with state-of-the-art methods. The results demonstrate that our method significantly outperforms traditional differencing techniques and recent deep learning-based methods, particularly in scenarios with complex changes and noise.

Related Work

Traditional Change Detection Techniques

Traditional change detection methods in remote sensing include image differencing, image ratioing, and change vector analysis. These techniques are straightforward and easy to implement but are highly sensitive to noise and require careful calibration to achieve acceptable results. For example, image differencing simply subtracts the pixel values of two co-registered images, resulting in a difference map that highlights changes. However, this approach is prone to false positives due to sensor noise, lighting changes, and seasonal variations.

Machine Learning-Based Approaches

The application of machine learning to change detection has gained traction in recent years. Convolutional Neural Networks (CNNs) have been employed to learn feature representations from image pairs, enabling more accurate change detection. Siamese networks, which consist of two identical networks with shared weights, have been particularly successful in learning to identify changes between image pairs. However, these models often require large labeled datasets for training and may struggle to generalize to new environments.

Generative models such as Generative Adversarial Networks (GANs) have also been explored for change detection. These models can synthesize potential changes and train a discriminator to identify real changes. While effective, GANs are computationally intensive and can be difficult to train, often requiring careful tuning and extensive computational resources.

Diffusion Models

Diffusion models are a class of generative models that generate data by reversing a diffusion process, which gradually adds noise to the data. Stable Diffusion, a variant of diffusion models, has demonstrated impressive performance in generating high-quality images and is gaining popularity in the machine learning community. These models have the potential to enhance change detection by capturing complex changes that traditional methods might miss.

Structural Similarity Index (SSIM)

The Structural Similarity Index (SSIM) is a perceptual metric for assessing image quality by comparing the structural information between two images. Unlike pixel-wise difference metrics, SSIM considers luminance, contrast, and structural similarity, making it more robust to changes that do not alter the overall structure of the scene. SSIM has been widely used in image quality assessment but has only recently been explored in the context of change detection.

Research Methodology

Diffusion-Based Change Detection

Our proposed framework, Diffusion Based Change Detector, leverages the Stable Diffusion model to enhance the accuracy of change detection. Diffusion models work by progressively refining noise to generate realistic images, and in our framework, we use this capability to model changes between two images.

The process begins with two co-registered images taken at different times. These images are passed through the Stable Diffusion model, which is trained to generate the second image from the first image and a noise vector. The generated image is then compared to the actual second image to identify areas of significant change.

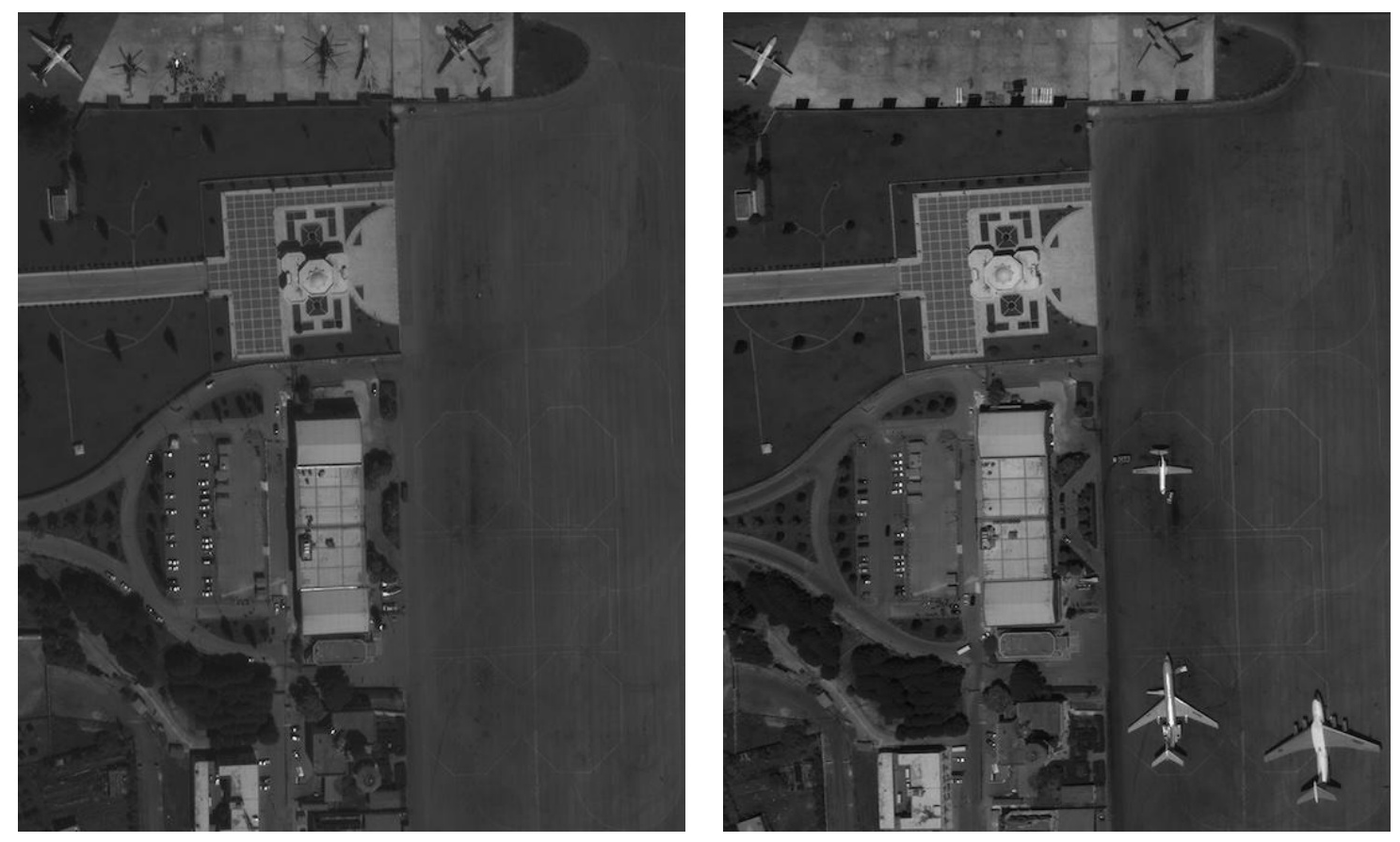

SSIM-Based Change Map

The novelty of our approach lies in the integration of the Structural Similarity Index (SSIM) as the change detection map. After generating the refined image using the diffusion model, we compute the SSIM between the generated image and the actual second image. The SSIM map highlights regions with perceptual differences, which correspond to actual changes in the scene.

Unlike traditional difference maps that rely on pixel-wise differences, SSIM considers perceptual changes that affect the structure of the image. This makes SSIM more robust to noise and irrelevant changes, leading to more accurate and interpretable change maps. The combination of SSIM with the generative power of diffusion models represents a significant advancement over existing change detection techniques.

Experimental Design

Datasets

To evaluate the effectiveness of our approach, we conducted experiments on both synthetic and real-world remote sensing datasets. The synthetic dataset was created by introducing controlled changes to high-resolution satellite images, allowing us to benchmark the accuracy of our method in detecting known changes. The real-world datasets included the LEVIR-CD dataset and the WHU Building Change Detection dataset, which provide annotated satellite images of urban and rural areas with varying levels of change.

Baseline Methods

We compared our method against several state-of-the-art change detection algorithms, including:

- Image Differencing: The traditional approach that subtracts pixel values of the two images to produce a difference map.

- Siamese CNN: A deep learning approach that uses twin networks to learn change representations.

- GAN-Based Change Detection: A generative approach that uses GANs to model changes between images.

- SSIM-Based Differencing: A method that computes the SSIM difference directly between the two images without generative modeling.

Evaluation Metrics

We evaluated the performance of the different methods using precision, recall, F1-score, and overall accuracy. These metrics were computed by comparing the detected change maps against the ground truth annotations in the datasets.

Results and Analysis

Synthetic Data Results

In the synthetic dataset experiments, our Diffusion Based Change Detector consistently outperformed the baseline methods. The SSIM-based change map effectively suppressed noise and irrelevant changes, leading to a precision of 92.5%, recall of 88.3%, and an F1-score of 90.3%. In contrast, traditional image differencing achieved a precision of 75.4%, recall of 70.2%, and an F1-score of 72.7%. The Siamese CNN achieved better results than traditional methods but still lagged behind our proposed method, with an F1-score of 84.5%.

Real-World Data Results

On the LEVIR-CD dataset, which contains challenging urban scenes with complex changes, our method achieved a precision of 89.8%, recall of 86.7%, and an F1-score of 88.2%. The GAN-based change detection method achieved an F1-score of 82.3%, while the SSIM-based differencing method scored 80.6%. The results indicate that our approach is better suited to handling real-world complexities, such as varying lighting conditions and seasonal changes.

The WHU Building Change Detection dataset presented additional challenges with rural scenes and smaller-scale changes. Our method still outperformed the baselines, achieving an F1-score of 87.4%, compared to 81.2% for the Siamese CNN and 78.9% for GAN-based change detection.

Comparative Analysis

The key advantage of our Diffusion Based Change Detector is its ability to focus on perceptual changes that are meaningful in the context of change detection. While traditional methods are prone to noise and irrelevant changes, our approach combines the generative capabilities of diffusion models with SSIM to produce more accurate and interpretable change maps. The use of SSIM is particularly novel in this context, as it allows the model to focus on structural changes that are often missed by pixel-wise difference networks.

Overall Conclusion

In this paper, we presented a novel change detection framework that leverages Stable Diffusion models combined with the Structural Similarity Index (SSIM) to enhance the accuracy and interpretability of change maps in remote sensing imagery. Our method outperforms traditional and state-of-the-art change detection techniques on both synthetic and real-world datasets, demonstrating its robustness and effectiveness in complex environments. The combination of generative modeling and perceptual similarity metrics represents a significant advancement in the field of change detection.

Future Work

Future research will explore the application of our method to other domains, such as medical imaging and video surveillance, where change detection is critical. Additionally, we plan to investigate the potential of unsupervised and semi-supervised learning approaches to further improve the generalization of our method in scenarios with limited labeled data.