Authors:

Vlad Hondru、Florinel Alin Croitoru、Shervin Minaee、Radu Tudor Ionescu、Nicu Sebe

Paper:

https://arxiv.org/abs/2408.06687

Introduction

In recent years, the field of computer vision has seen significant advancements due to the development of self-supervised learning techniques. One such technique is Masked Image Modeling (MIM), which has emerged as a powerful approach for pre-training models without the need for labeled data. This survey paper, authored by Vlad Hondru, Florinel Alin Croitoru, Shervin Minaee, Radu Tudor Ionescu, and Nicu Sebe, provides a comprehensive overview of MIM, categorizing the various approaches and highlighting key contributions in the field.

Abstract

The abstract introduces the concept of MIM, where parts of an image are masked, and a model is trained to predict the missing information. The paper identifies two main categories of MIM approaches: reconstruction-based and contrastive learning-based. It also presents a taxonomy of prominent papers, a dendrogram for hierarchical clustering, and a review of commonly used datasets. The survey concludes by identifying research gaps and proposing future directions.

Generic Framework

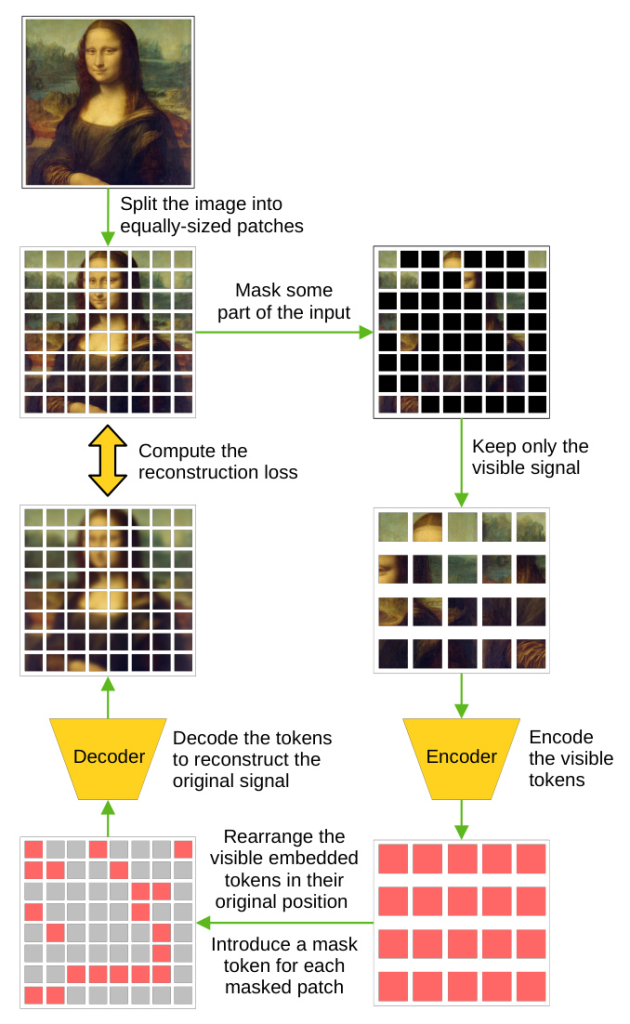

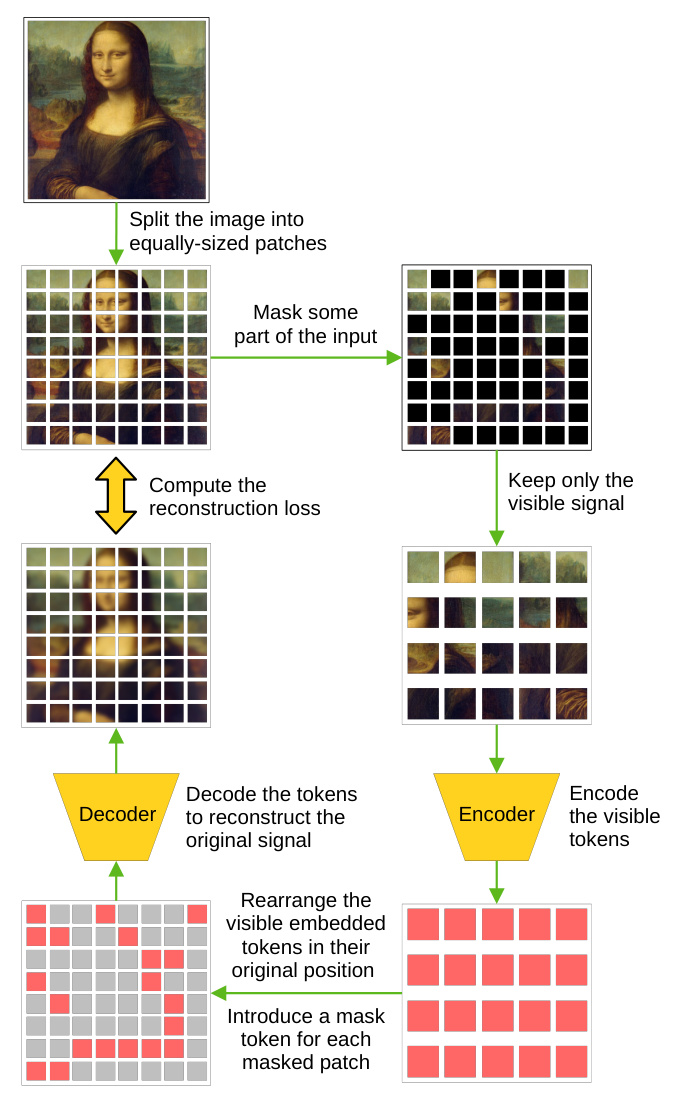

Reconstruction-Based MIM

Reconstruction-based MIM involves masking parts of an image and training a model to reconstruct the missing information. This approach typically uses an encoder-decoder architecture, where the encoder processes the visible parts of the image, and the decoder reconstructs the masked parts. The objective is to minimize the reconstruction loss between the predicted and original image patches.

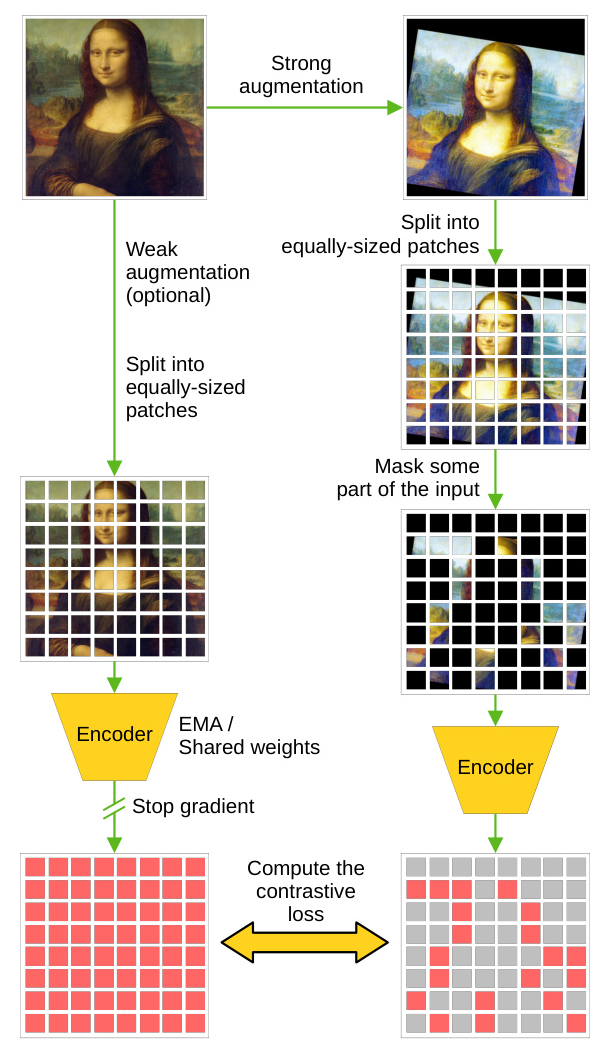

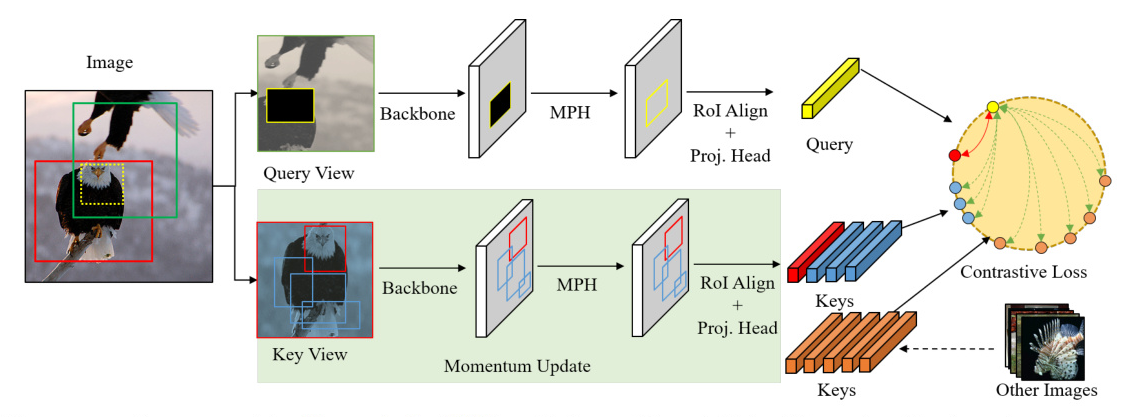

Contrastive-Based MIM

Contrastive-based MIM compares two different representations of the same input: one from an unaltered or weakly augmented image and the other from a masked and strongly augmented image. The goal is to obtain similar embeddings for both representations, using a contrastive loss to align them.

Taxonomy and Overview

The paper presents a manually-generated taxonomy of MIM research, categorizing papers based on their contributions to masking strategy, target features, neural architecture, objective function, downstream tasks, and theoretical analysis.

Masking Strategy

Early masking strategies involved random masking of image patches. Recent approaches have proposed guided masking strategies that leverage image statistics, additional networks, or self-attention modules to improve performance.

Target Features

Different target features have been explored in MIM, including raw pixels, high-level latent features, and geometric features. Some studies have demonstrated that using more suitable target features can lead to better performance on downstream tasks.

Objective Function

The primary objective function in MIM is to reconstruct the masked parts of the image. However, some studies have combined reconstruction with contrastive objectives to improve performance.

Downstream Task

MIM has been applied to various downstream tasks, including image classification, object detection, segmentation, and video recognition. The survey highlights the effectiveness of MIM in improving performance on these tasks.

Theoretical Analysis

Several papers have provided theoretical insights into MIM, exploring its underlying mechanisms and benefits. These studies have helped to better understand the strengths and limitations of MIM.

Model Architecture

While most MIM approaches use transformer-based architectures, some studies have explored the use of convolutional neural networks (CNNs) and hybrid architectures to improve performance.

Automatic Clustering

To complement the manual taxonomy, the authors applied a hierarchical clustering algorithm to the titles and abstracts of the surveyed papers. The resulting dendrogram provides an alternative categorization of the papers, highlighting clusters related to input data type, domain, downstream tasks, and more.

Datasets

The survey reviews various datasets commonly used in MIM research, including CIFAR-100, ImageNet-1K, MS-COCO, UCF101, ShapeNet, CC3M, FFHQ, LAION-400M, and Visual Genome. These datasets are used for both pre-training and evaluating the performance of MIM methods.

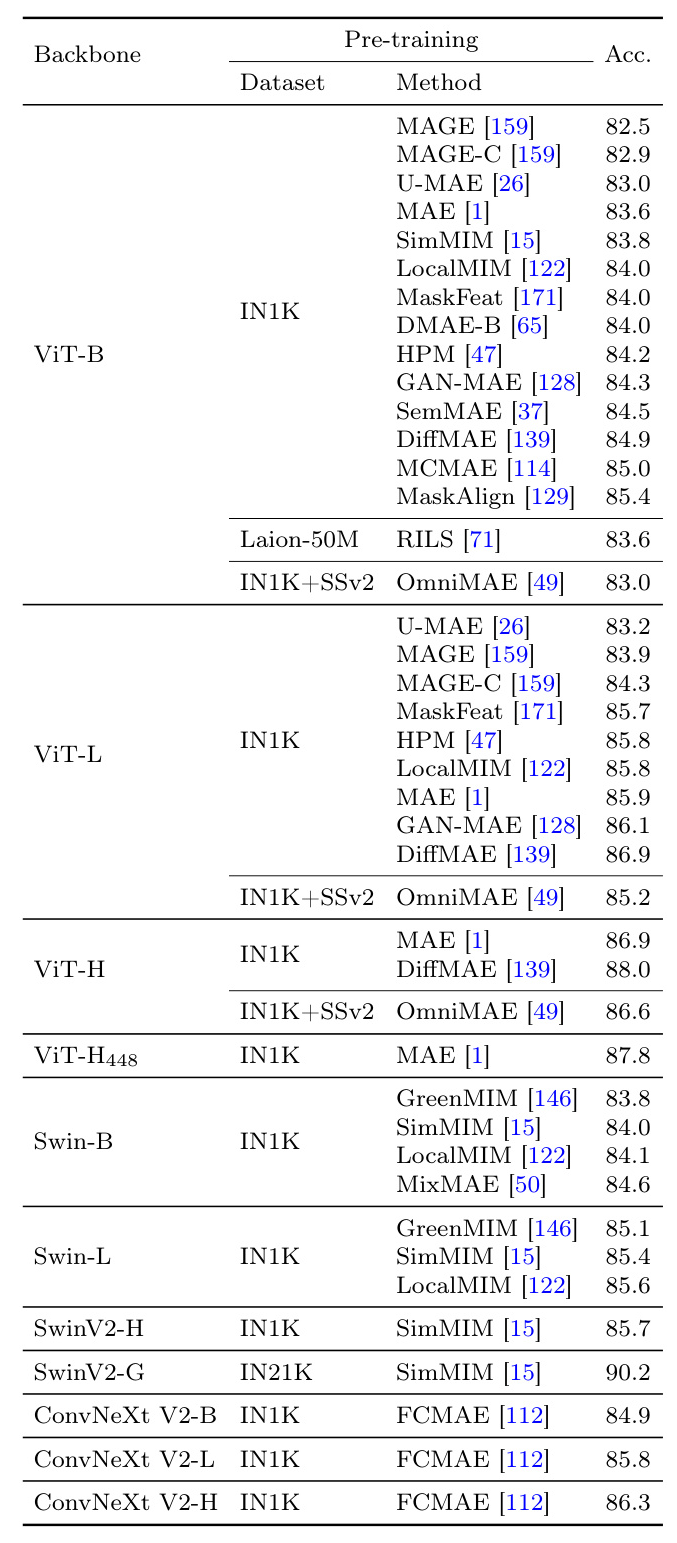

Performance Overview

The paper provides an in-depth analysis of the performance of different MIM methods on popular benchmarks such as ImageNet-1K, MS-COCO, and Kinetics-400. The results show that novel masking strategies, target features, and the integration of generative models can lead to significant improvements in performance.

Closing Remarks and Future Directions

The survey concludes by summarizing the key contributions of MIM research and proposing several future directions. These include exploring new masking strategies, combining MIM with other pre-training methods, and leveraging multimodal data to learn richer feature representations.

Illustrations

- Timeline of prominent works in Masked Image Modeling.

- Reconstruction-based MIM pipeline.

- Contrastive-based MIM pipeline.

- Multi-level classification of Masked Image Modeling papers.

- SimMIM pipeline.

- MAE pipeline.

- SdAE pipeline.

- CMAE pipeline.

- First step of AMAE pipeline.

- Second step of AMAE pipeline.

- The architecture of SSMCTB.

- The architecture of MCMAE.

- The approach proposed by Zhao et al. (2021).

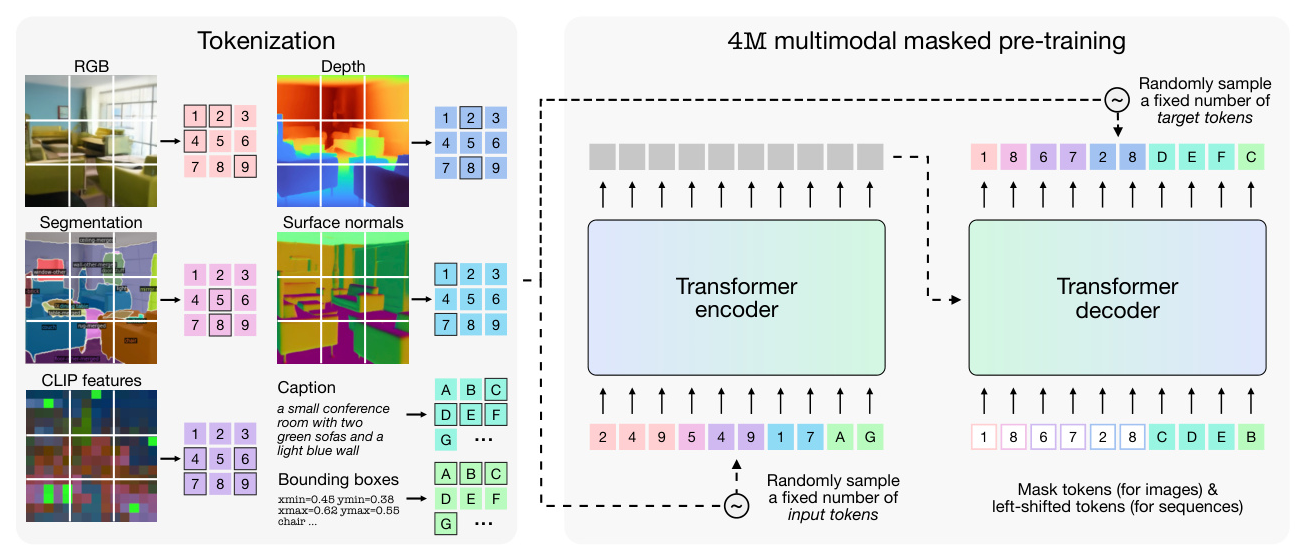

- The MAE framework for multiple modalities proposed by Mizrahi et al. (2023).

- The pipeline of MST as proposed by Li et al. (2021).

- The knowledge distillation pipeline proposed by Wang et al. (2023).

- Hierarchical clustering dendrogram.

- Sample images from CIFAR-100 dataset.

- Sample images from ImageNet-1K dataset.

- Sample images from MS-COCO dataset.

- Sample images from FFHQ dataset.

- Sample images from LAION-400M dataset.

- Statistics of datasets used in MIM literature.

- Performance on ImageNet-1K of different MIM pre-training schemes.

- Performance on MS-COCO of different MIM pre-training schemes.

- Performance on Kinetics-400 of different MIM pre-training schemes.

This survey provides a comprehensive overview of Masked Image Modeling, highlighting its potential as a powerful self-supervised learning technique in computer vision. By categorizing and analyzing recent research, the authors offer valuable insights and directions for future work in this rapidly evolving field.

Datasets:

ImageNet、MS COCO、CIFAR-100、UCF101、FFHQ、Kinetics、Visual Genome、Kinetics 400、LAION-400M