Authors:

Syed Rifat Raiyan、Zibran Zarif Amio、Sabbir Ahmed

Paper:

https://arxiv.org/abs/2408.10360

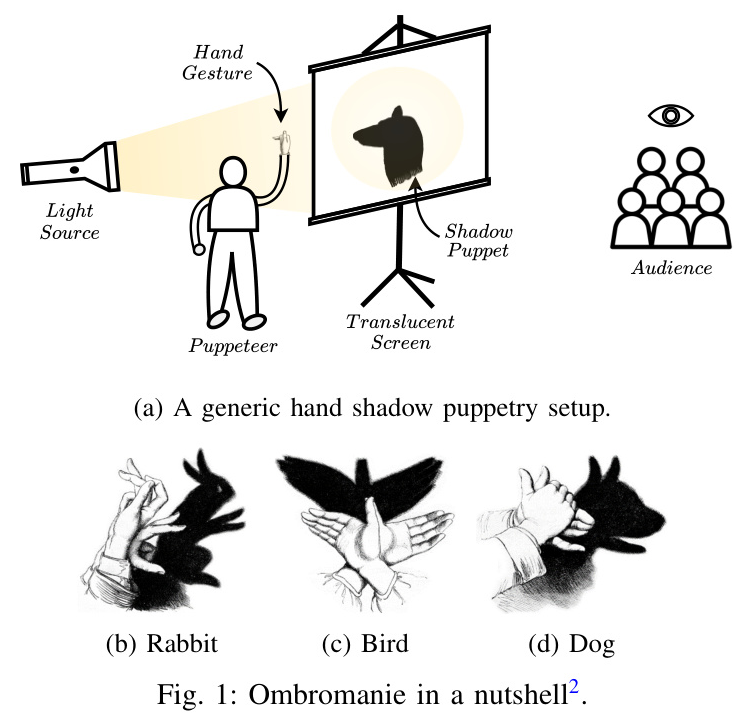

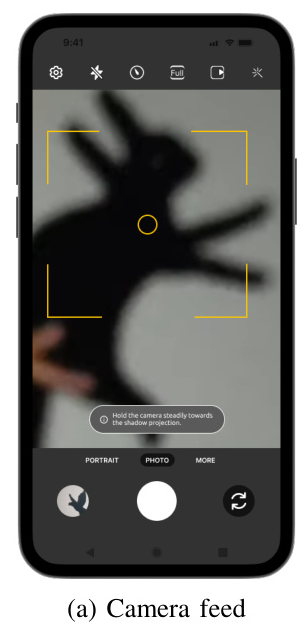

Hand shadow puppetry, also known as shadowgraphy or ombromanie, is a captivating form of theatrical art and storytelling. However, this ancient art form is on the brink of extinction due to the dwindling number of practitioners and changing entertainment standards. To address this issue, researchers Syed Rifat Raiyan, Zibran Zarif Amio, and Sabbir Ahmed have introduced HaSPeR, a novel dataset aimed at preserving and proliferating hand shadow puppetry. This blog delves into their study, exploring the dataset, methodologies, experimental design, and findings.

1. Introduction

Background

Hand shadow puppetry involves creating silhouettes of animals and objects using hand gestures, finger movements, and dexterous gestures. Despite its rich history and cultural significance, this art form is facing extinction. The lack of resources and practitioners has made it difficult to study and preserve this art.

Problem Statement

The primary objective of this study is to create a comprehensive dataset, HaSPeR, consisting of 8,340 images of hand shadow puppets across 11 classes. This dataset aims to facilitate the development of AI tools for recognizing, classifying, and potentially generating hand shadow puppet performances.

2. Related Work

Image Classification and Recognition

Previous works in hand shadow image classification include Huang et al.’s SHADOW VISION, which used a 3-layer neural network for recognizing infrared shadow puppet images. However, their methodology is considered obsolete due to advancements in deep learning models.

Recent studies have explored convolutional models for Indonesian shadow puppet recognition, using datasets with fewer classes and samples compared to HaSPeR. These studies laid the groundwork for benchmarking feature extractor models for hand shadow puppet images.

3D Modeling and Human Motion Capture

Early works in silhouette-based 3D modeling, such as Brand’s study on mapping 2D shadow images to 3D body poses, have influenced subsequent research in human motion capture and analysis. These studies have leveraged human silhouette templates for various applications.

Robotics and Human-Computer Interaction

Huang et al. introduced computer vision-aided shadow puppetry with robotics, enabling the creation of complex shadow forms using robotic arms. Other studies have explored gesture recognition for controlling shadow puppets, using sensors like Microsoft Kinect and Leap Motion.

3. Research Methodology

Dataset Construction

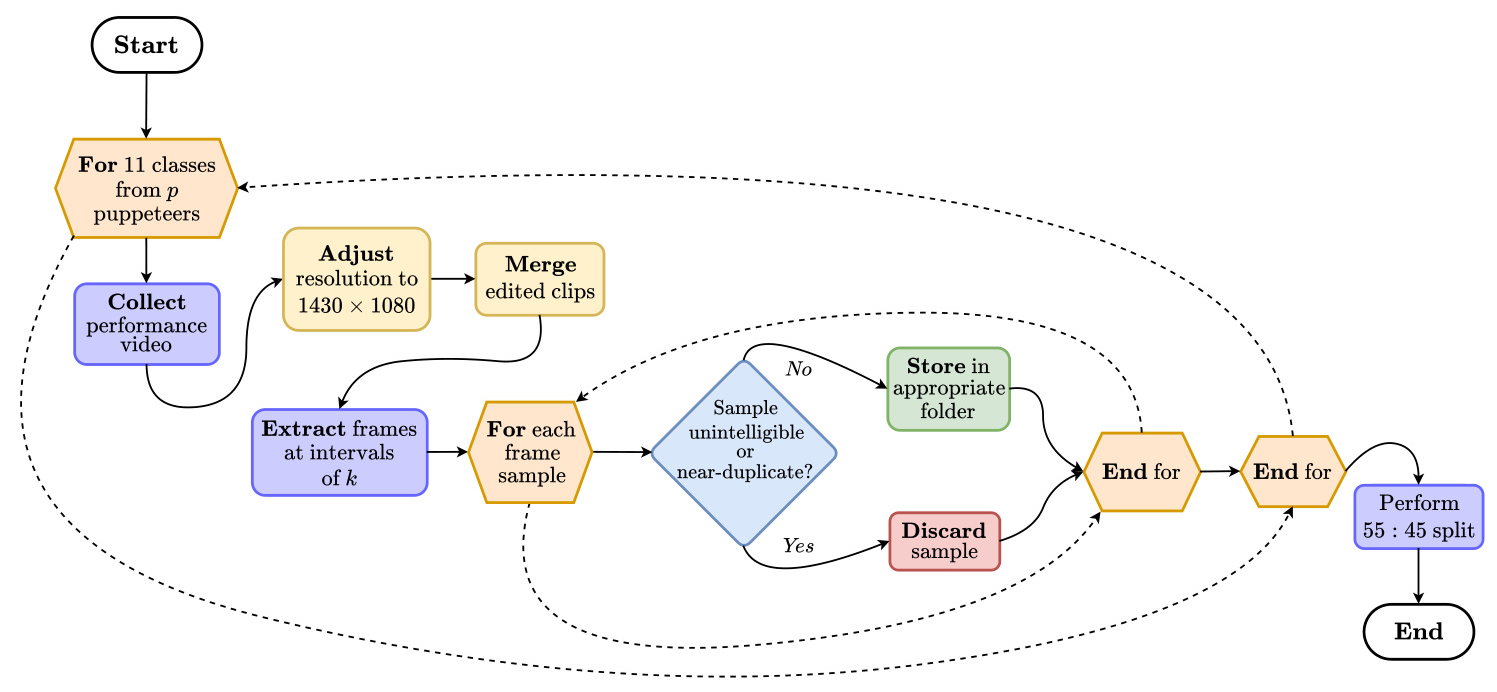

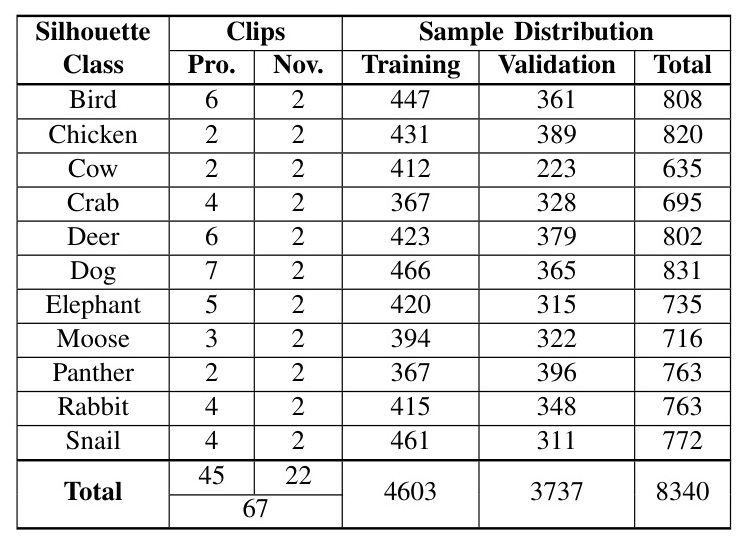

The dataset construction process involves three main tasks: procuring performance clips, extracting frames, and categorizing each sample frame with proper labels.

Collating Shadowgraphy Clips

The researchers sourced 45 professional and 22 amateur shadowgraphy clips from YouTube and volunteer shadowgraphists, respectively. These clips were recorded using OBS Studio and downsampled to a resolution of 1430 × 1080.

Extracting Samples

Frames were extracted at reasonable intervals to avoid redundancy. The extracted frames were manually scrutinized by annotators to ensure quality and diversity.

Labeling

The samples were labeled and categorized into 11 classes, with a 55:45 split between training and validation sets. The dataset includes samples with varying backgrounds, silhouette properties, and hand anatomies.

4. Experimental Design

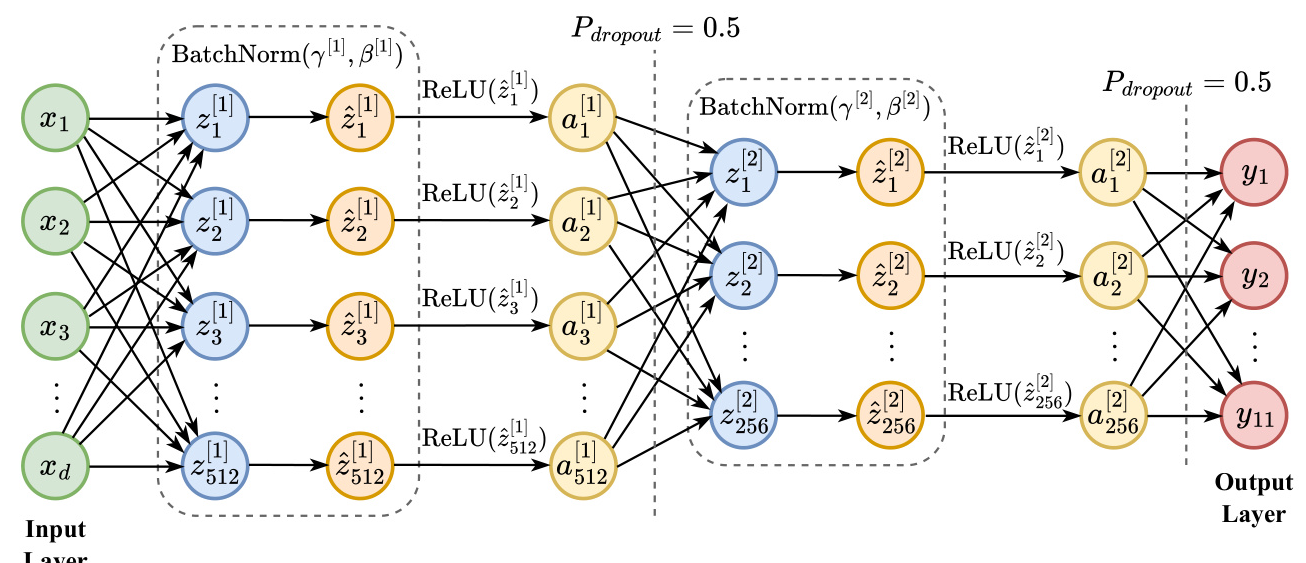

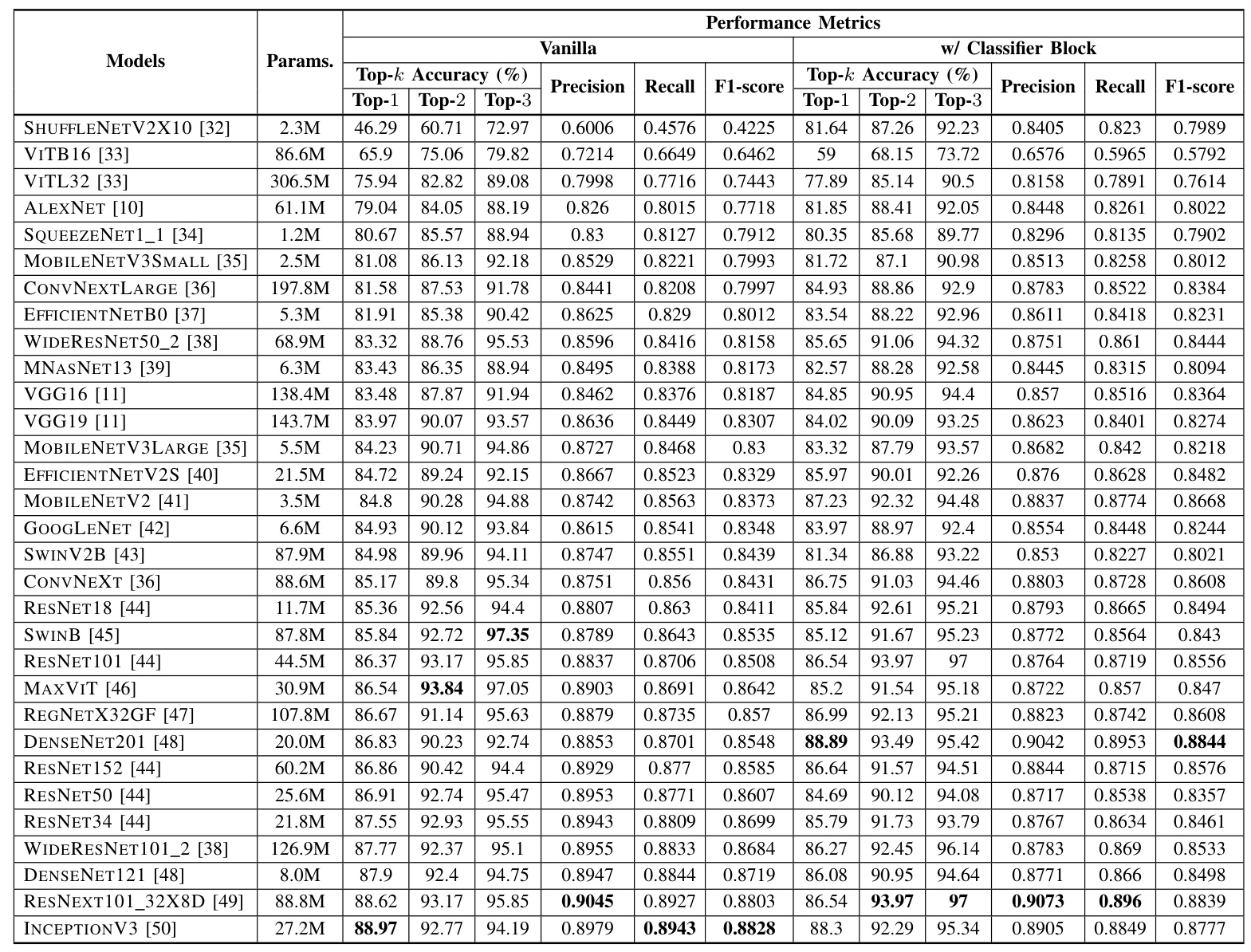

Baseline Models

The researchers used 31 pretrained image classification models as baselines, including conventional CNNs and CNNs augmented with attention mechanisms. These models were fine-tuned on the HaSPeR dataset.

Performance Metrics

Top-k validation accuracy, Precision, Recall, and F1-score were used as evaluation metrics. The models were trained using Stochastic Gradient Descent (SGD) with a learning rate of 0.001 and momentum of 0.9.

Data Augmentation and Preprocessing

Data augmentation techniques such as Random Resize, Random Perspective, Color Jitter, and Random Horizontal Flip were employed to generate diverse training samples. The input images were resized using Bicubic Interpolation.

5. Results and Analysis

Performance Analysis

The INCEPTIONV3 model achieved the highest top-1 accuracy of 88.97%, followed by MOBILENETV2 with 87.23%. Transformer architectures like SWINB and MAXVIT demonstrated competence in top-2 and top-3 accuracy scores.

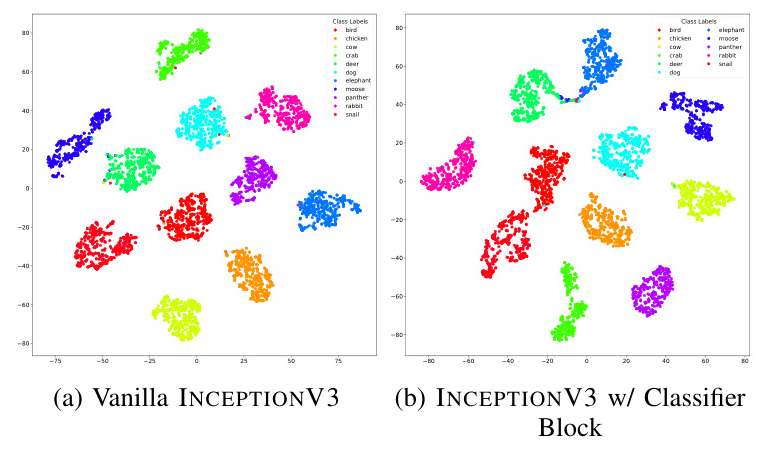

Feature Space Visualization and Analysis

t-Distributed Stochastic Neighbor Embedding (t-SNE) was used to visualize the learned feature space of INCEPTIONV3. The visualizations showed well-clustered classes with minimal overlaps, indicating the model’s ability to determine decision surfaces effectively.

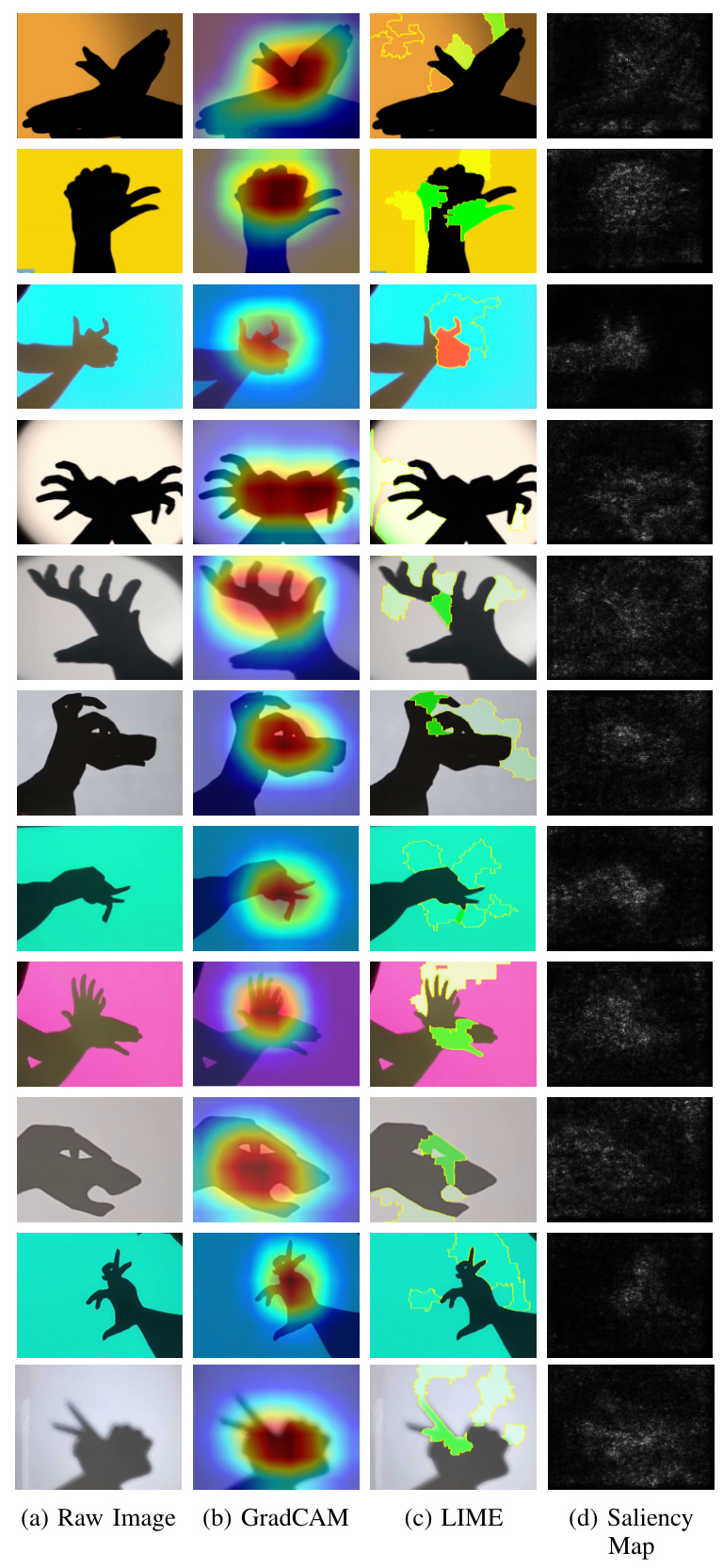

Qualitative Analysis and Explainability

Explainable AI (XAI) techniques such as GradCAM, LIME, and Saliency Maps were used to understand the decision-making process of the INCEPTIONV3 model. The visualizations highlighted the model’s focus on common-sense distinguishing traits.

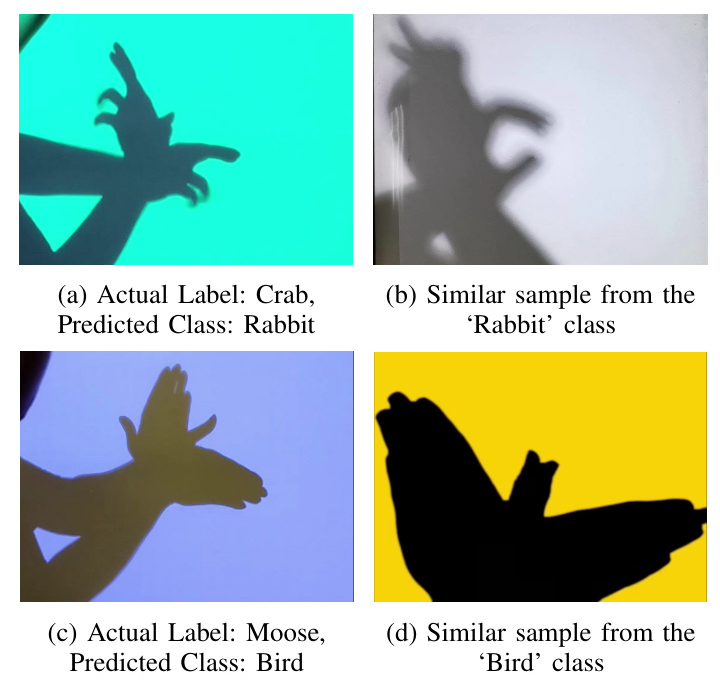

Error Analysis

The confusion matrix revealed that the ‘Panther’ class had the highest misclassification rate, primarily due to inter-class similarity with ‘Dog’ and ‘Elephant’ classes. Misclassifications often occurred between visually similar classes.

6. Overall Conclusion

The HaSPeR dataset represents a significant step towards preserving the art of hand shadow puppetry. The study established a performance benchmark for various image classification models, with INCEPTIONV3 emerging as the best-performing model. The dataset and findings offer valuable insights for developing AI tools to teach and preserve ombromanie.

Future work includes enriching the dataset with more diverse samples and exploring gesture detection technologies for classifying hand shadow puppets. The researchers hope that HaSPeR will inspire further exploration and innovation in the field of hand shadow puppetry.