Authors:

Eito Ikuta、Yohan Lee、Akihiro Iohara、Yu Saito、Toshiyuki Tanaka

Paper:

https://arxiv.org/abs/2408.10846

Introduction

In the realm of computer vision, image harmonization is a critical task that involves seamlessly integrating a foreground object from one image into the background of another to produce a cohesive composite. Traditional methods have primarily focused on color and illumination adjustments to achieve visual harmony. However, the selective transfer of geometrical features such as holes, cracks, droplets, and dents from one material to another, independently of material-specific surface texture, remains a complex challenge. This study introduces “Harmonizing Attention,” a novel training-free approach leveraging diffusion models for texture-aware geometry transfer.

Related Work

Image Harmonization

Traditional image harmonization methods have focused on color-to-color transformations to match visual appearances, which can be divided into non-linear and linear transformations. Recent advancements in deep learning have led to sophisticated image composition and harmonization techniques, including diffusion-model-based methods like ObjectStitch and TF-ICON. These methods primarily focus on style transfer, encompassing attributes such as color schemes, brushstrokes, textures, and patterns.

Painterly Image Harmonization

Painterly image harmonization integrates a photographic foreground into an artistic background, resulting in a visually coherent painting. Techniques like PHDiffusion and TF-GPH have adapted GAN frameworks to diffusion models, providing flexible options for attention-based image editing methods.

Research Methodology

Objective

The objective is to synthesize an image that seamlessly integrates surface geometry information from a source image into a target background image, guided by a foreground mask, while maintaining textural continuity with the target image.

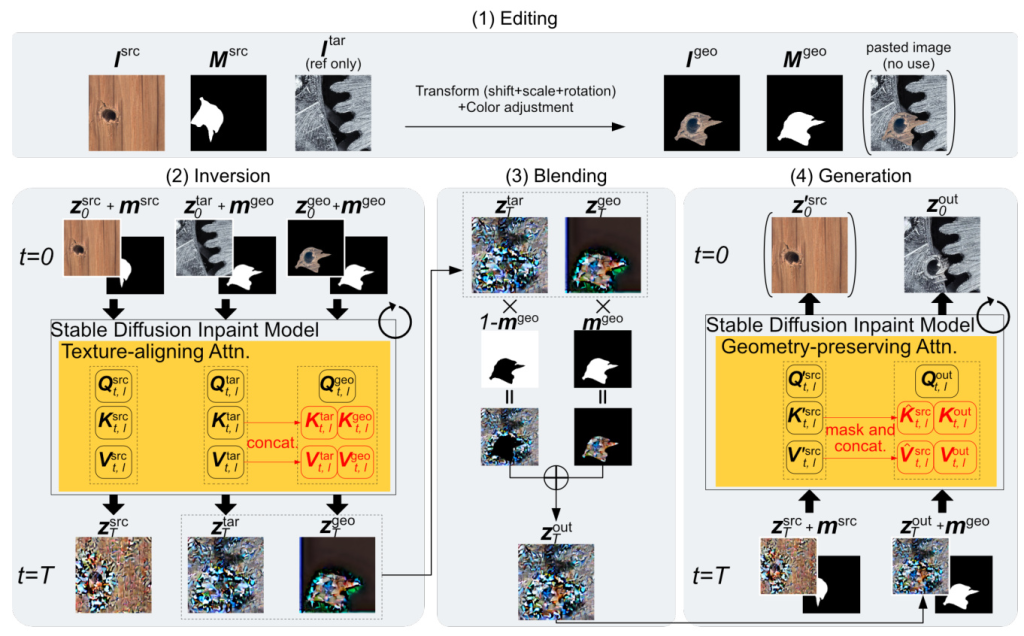

Harmonizing Attention Framework

The proposed framework leverages Stable Diffusion (SD) and encompasses both inversion and generation processes. The key aspect is modifying self-attention computation during both inversion and generation to query additional information from the source and target images, enabling a more coherent and context-aware transfer process.

Key Components

- Texture-aligning Attention: Used during the inversion process to align the geometry image with the target domain.

- Geometry-preserving Attention: Used during the generation process to preserve the geometry while ensuring seamless integration.

Experimental Design

Setup

The experiments use the publicly available SD inpainting model checkpoint on HuggingFace. Both source and target images are cropped to a uniform size of 512 × 512 pixels. The number of diffusion steps is set to 25, and the DDIM sampler is employed for both inversion and generation processes.

Datasets and Metrics

Images from MVTec AD and Pixabay are used. The performance is evaluated using metrics such as LPIPS, CLIP, and DISTS, which assess image harmonization quality. A total of 150 generated images are used for quantitative performance evaluation.

Baselines

Four diffusion-model-based methods are selected for comparison: PHDiffusion, TF-GPH, TF-ICON, and Paint by Example. Suitable prompts are manually set for generating each sample with TF-ICON.

Results and Analysis

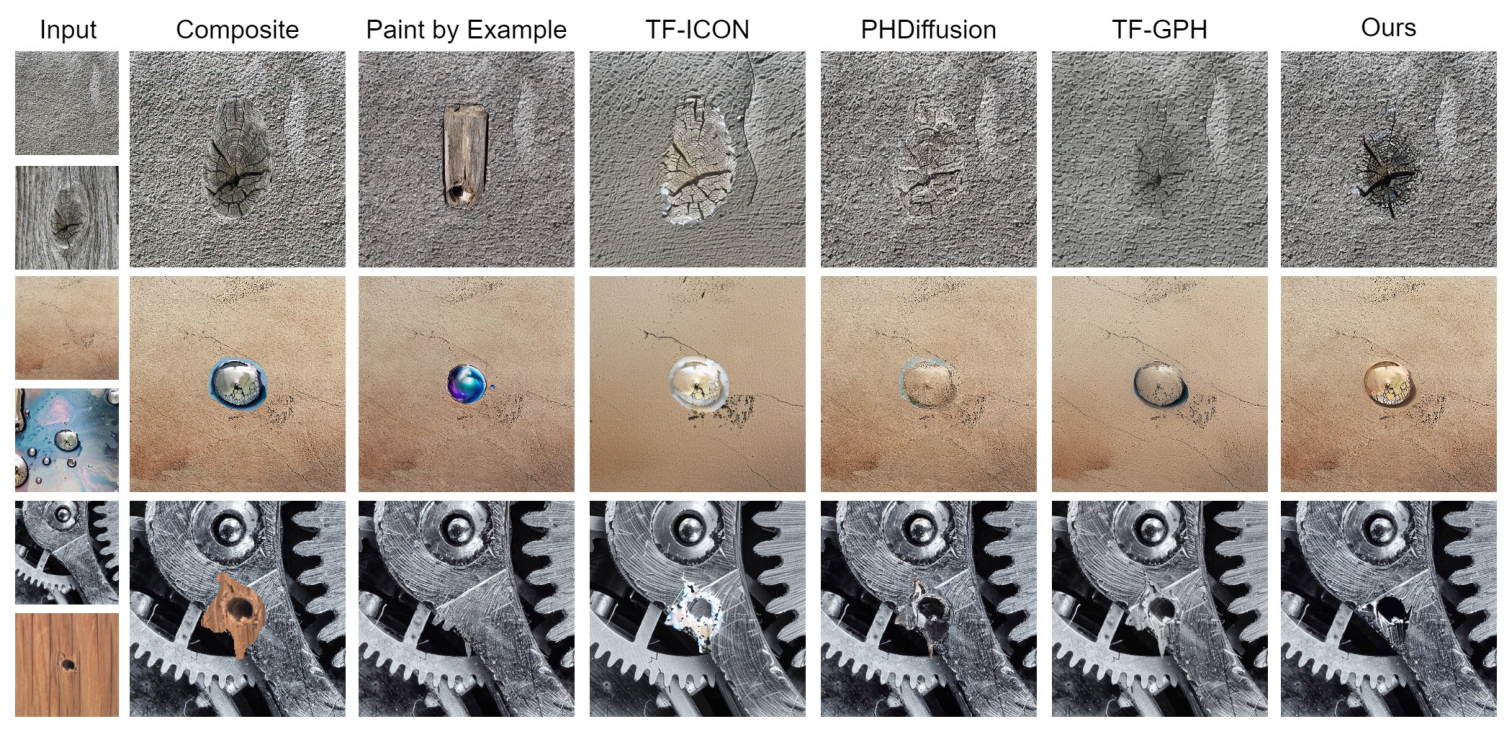

Qualitative Comparison

The proposed method, Harmonizing Attention, excels in preserving the geometry and structural details of foreground objects while adapting the texture and material properties to match the background. This dual capability results in the most natural and realistic geometry integration across various scenarios.

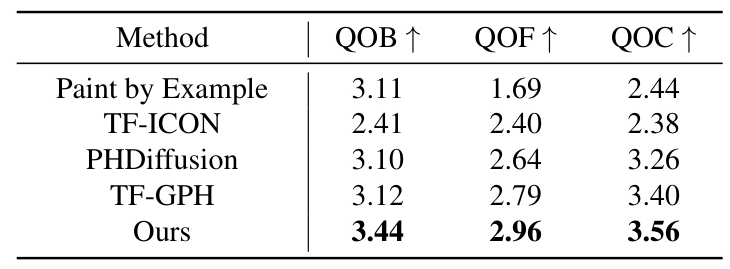

User Study

A user study with 105 participants rated images based on background preservation, foreground preservation, and seamless composition. The results consistently showed that Harmonizing Attention outperformed existing methods across all evaluated metrics.

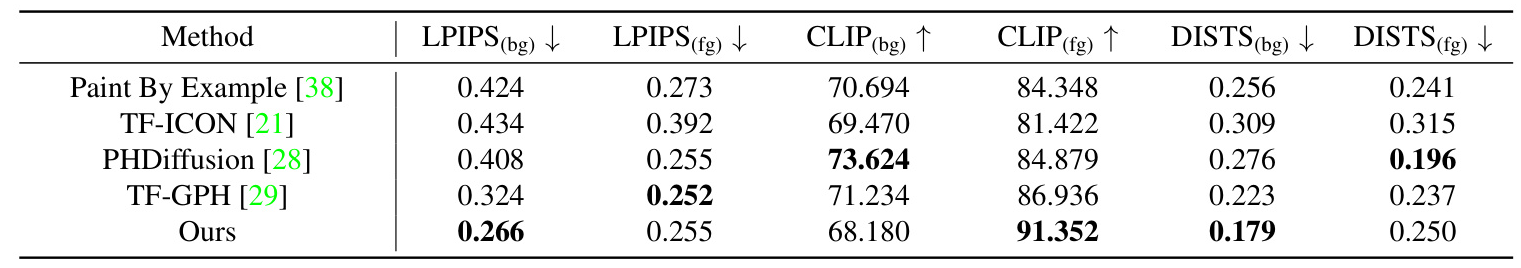

Quantitative Comparison

The method achieved the lowest LPIPS(bg) and DISTS(bg) scores, indicating superior preservation of background structural integrity. It also excelled in foreground semantic consistency, evidenced by the highest CLIP(fg) score.

Ablation Studies

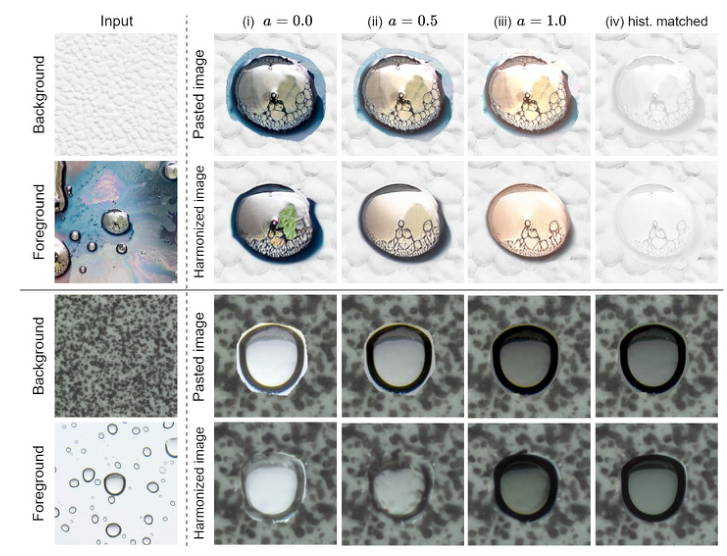

Effectiveness of Color Shift

The color shift method’s efficacy was assessed by varying the parameter and comparing it with histogram matching. Results showed that a modest shift in the color of the source image to that of the target image is effective in transferring geometry.

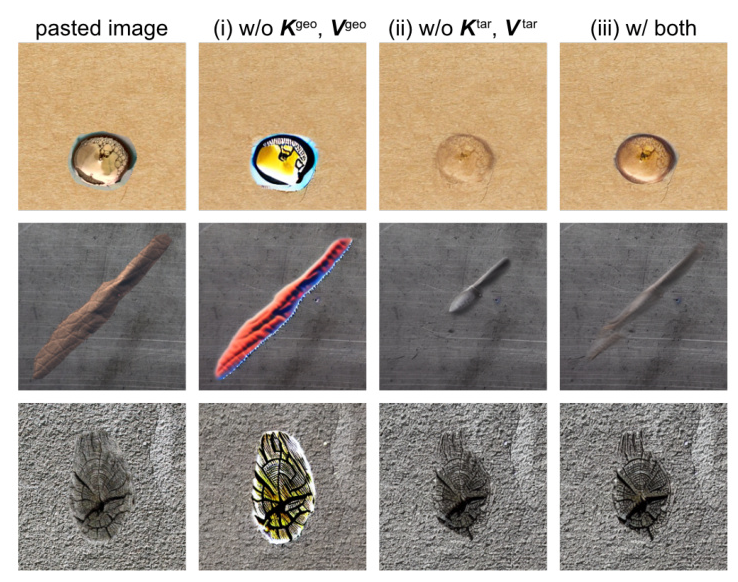

Effectiveness of Texture-aligning Attention

An ablation study comparing different inversion configurations demonstrated that using both geometry-derived and target-derived attention components yields results that are more harmoniously blended with the target image.

Effectiveness of Geometry-preserving Attention

Comparing generation results under different conditions showed that source-image-derived attention plays a key role in maintaining geometry information, but its influence needs to be balanced by using self-attention simultaneously.

Limitations

The primary limitation lies in the difficulty of transferring extremely large or small geometries. The ratio of texture-related to geometry-related information in the customized attention calculation is strongly dependent on the size of the geometry transfer area. Future improvements could focus on dynamically adjusting attention based on transfer area size and developing more robust attention mechanisms.

Overall Conclusion

Harmonizing Attention introduces a novel approach that facilitates the effective capture and transfer of material-independent geometry while preserving material-specific textural continuity. The method uses custom Texture-aligning and Geometry-preserving Attention during inversion and generation processes, respectively, enabling simultaneous referencing of source geometry and target texture information. This approach achieves effective geometry transfer without requiring additional training or prompt engineering, improving the creation of photorealistic composites and expanding the horizons of computer vision applications.