Authors:

Huang Lei、Jiaming Guo、Guanhua He、Xishan Zhang、Rui Zhang、Shaohui Peng、Shaoli Liu、Tianshi Chen

Paper:

https://arxiv.org/abs/2408.08506

Introduction

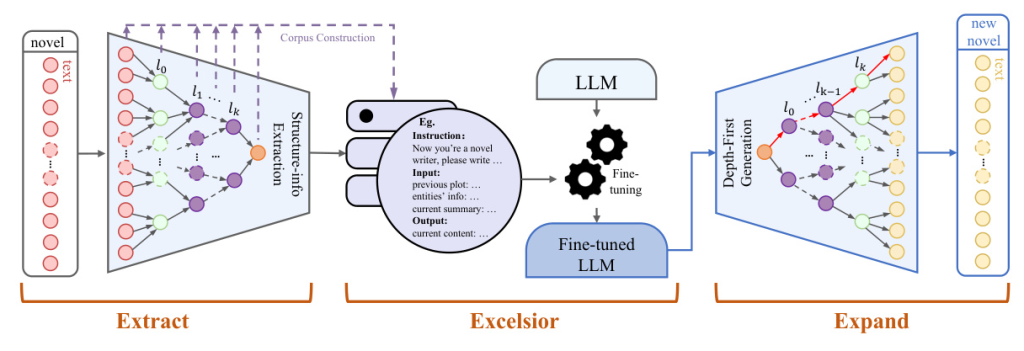

The generation of long-form texts such as novels using artificial intelligence has been a persistent challenge. Traditional methods using large language models (LLMs) often result in novels that lack logical coherence and depth in character and event depiction. This paper introduces a novel method named Extracting, Excelsior, and Expanding (Ex3) to address these issues. Ex3 extracts structural information from raw novel data, fine-tunes LLMs with this data, and uses a tree-like expansion method to generate high-quality, long-form novels.

Related Work

Long Context Transformers

LLMs face limitations in managing lengthy texts and retaining extensive contextual memory. Various methods have been proposed to enhance the attention mechanisms and encoding of positional information in models to support longer inputs. However, these methods do not significantly improve the output length required for full-length novels.

Automatic Novel Generation

Hierarchical approaches have been explored where LLMs generate outlines followed by additional prompts to create novels. Despite these efforts, issues such as inconsistencies and logical confusion arise as the text length increases. Existing methods heavily rely on the linguistic capabilities of LLMs without deeply exploring and learning from novel texts.

Extract, Excelsior, and Expand

The Ex3 framework consists of three main processes: Extracting, Excelsior, and Expanding.

Extracting

This process involves reverse-engineering the hierarchical structure information from raw novel texts to reconstruct the logical layout and narrative design. The steps include:

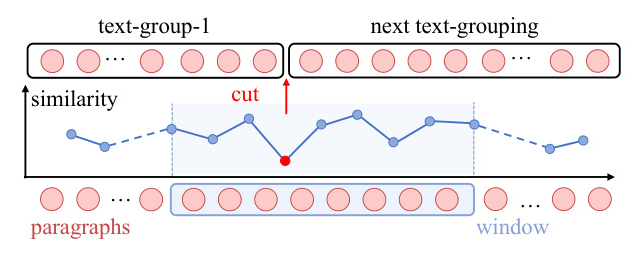

Grouping the Text by Similarity

The novel text is split into smaller parts based on semantic similarity to maintain the coherence of the semantic information. This method groups stories or scenes in the text while maintaining semantic integrity.

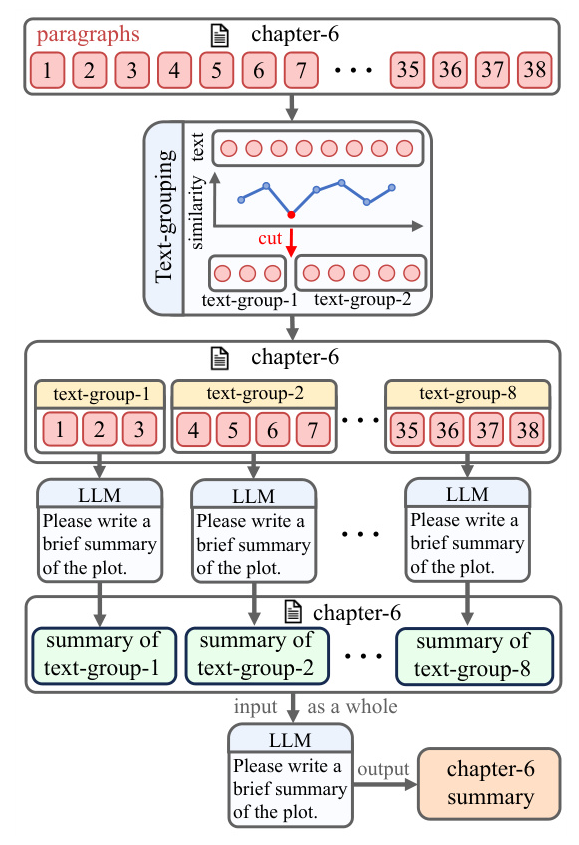

Chapter Summarizing

Chapters are divided into smaller sections, and summaries are generated for each section. These summaries are then merged to form a composite overview, which is used to generate a coherent chapter summary.

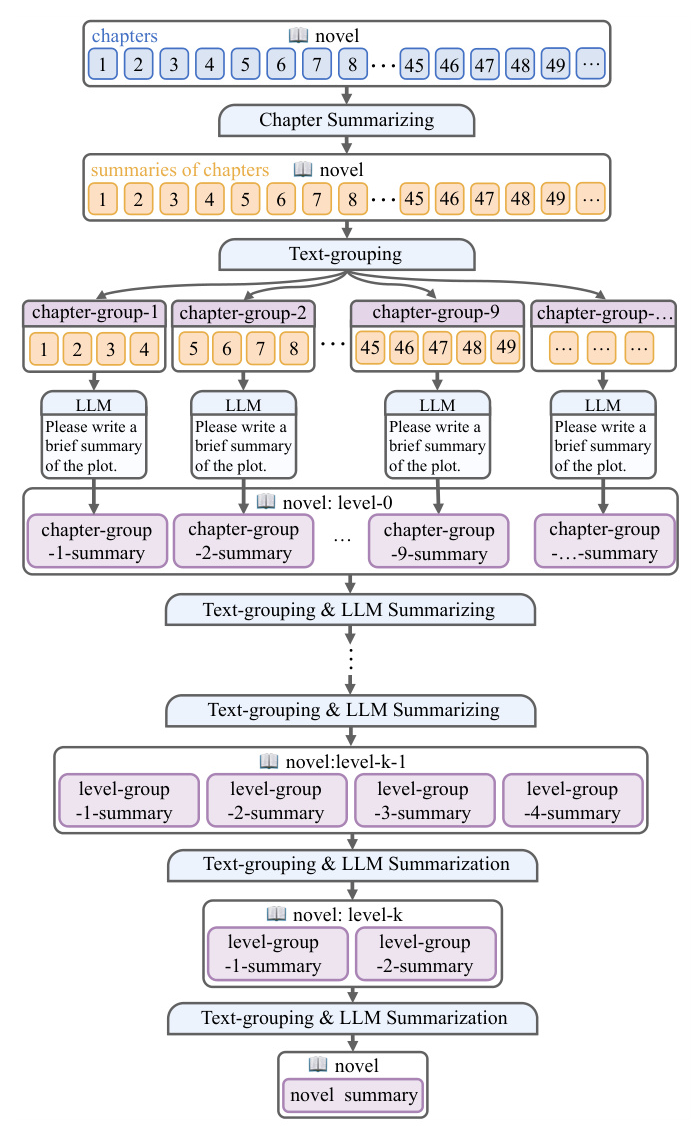

Recursively Summarizing

A recursive summarizing method is used to obtain hierarchical structure information. Chapter summaries are grouped by semantic similarity, and outlines are generated for each group. This process is repeated iteratively to obtain outlines with varying levels of information granularity.

Entity Extraction

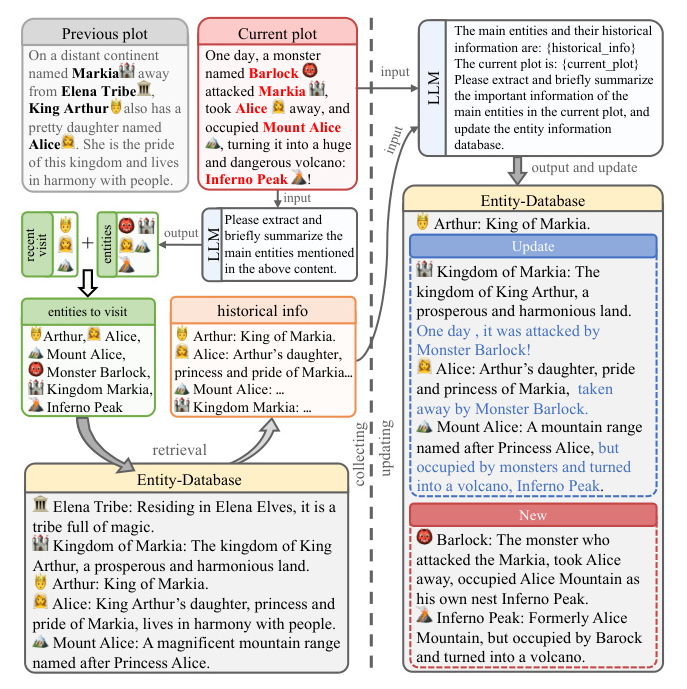

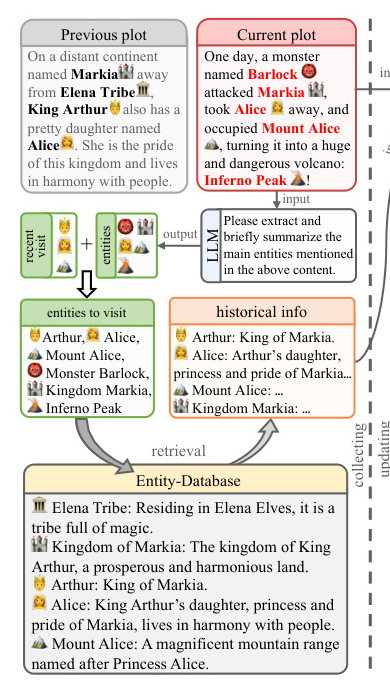

Entity extraction ensures the alignment of entity information throughout the text, enhancing coherence. The method maintains an entity information database, focusing on segmented paragraph groups as the primary objects.

Excelsior

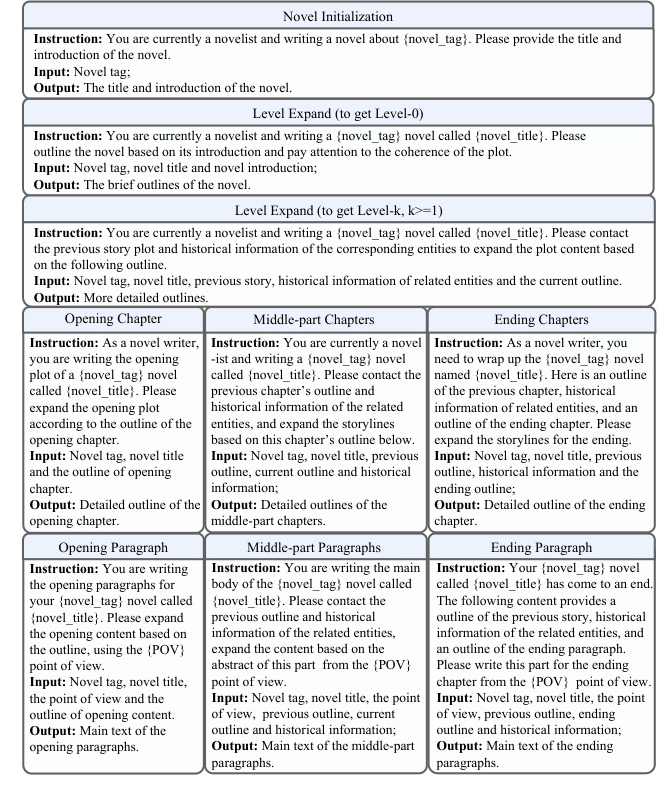

In this stage, tree-like structure information is formed from the novel text. Five distinct categories of prompts are developed to construct corpora for supervised fine-tuning. Approximately 800 web novels are collected to construct corpora, covering various genres. The fine-tuned model can generate text with a writing style closely aligned with the genre of novels.

Expanding

A depth-first writing mode is employed to leverage the structure information for expansive writing. The theme and hierarchical depth of the novel are manually specified. The writing assistant outputs the novel title and introduction, and the subsequent writing process is fully automated. The fine-tuned writing assistant is responsible for text expansion, while another general-purpose LLM handles entity extraction and updates the entity information database.

Evaluation

Experiment Setup

The effectiveness of Ex3 is evaluated by comparing the generated novels with those produced by several baselines using the same premise. Two sets of novels with different lengths are generated for experimentation: long-length novels (~10k words) and medium-length novels (~4k words).

Baselines

The following baselines are used for comparison:

1. Rolling-GPT

2. DOC

3. RE3

4. RECURRENTGPT

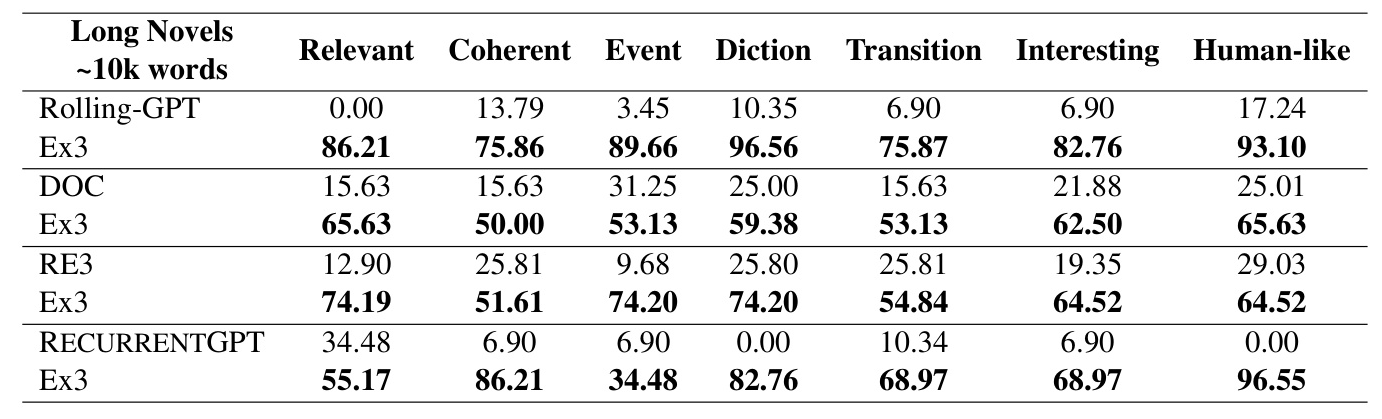

Human Evaluation

Human readers assess the generated novels based on metrics such as relevance, coherence, interest, event, diction, transition, and human-like quality. Ex3 is found to produce better novels in terms of plot development, literary devices, and overall human-like quality.

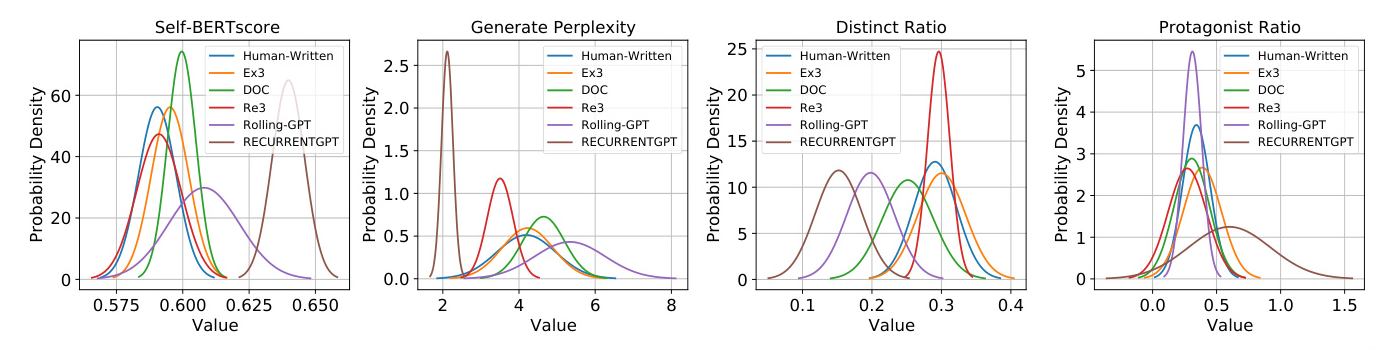

Automation Metrics

Automation evaluation experiments are designed to compare the distribution of different metrics between human-written novels and generated novels. Metrics include Self-BERTscore, Generation Perplexity, Distinct Ratio, and Protagonist Ratio. The results show that Ex3’s performance is comparable to human-written novels.

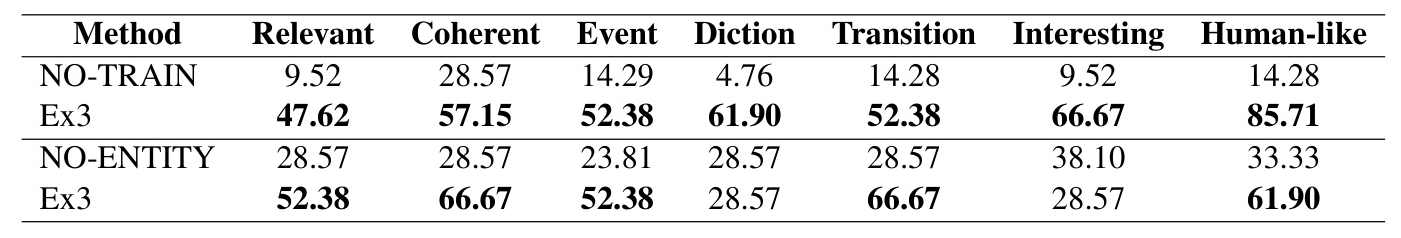

Ablation Study

Ablation experiments are conducted to understand the effect of different components in Ex3. The results indicate that the fine-tuned LLM and entity extraction module significantly enhance the quality of the generated novels.

Conclusion

Ex3 is an automatic novel writing framework that extracts structure information from raw novel data, fine-tunes LLMs, and uses a tree-like expansion method to generate high-quality, long-form novels. The experimental results demonstrate that Ex3 outperforms previous methods in producing coherent and engaging novels.

Acknowledgement

The authors thank Meng Yuan from Cambricon Technologies for her helpful discussions and contributions to data collection. This work is partially supported by various grants and research programs.

Limitations

Ex3 has some limitations, including the inability to comprehensively evaluate its performance on longer novels due to time and resource constraints. The training and generation primarily focused on Chinese novels, and further testing is needed for other languages. Future work will focus on optimizing the mechanism, expanding to multiple languages, and exploring interactive generation modes.

Ethics Statement

Large language models can produce false and harmful content. The use of audited human novel data to fine-tune the LLM reduces the potential for harmful content. The application of the system may require censorship and restraint to prevent negative impacts on the field of novel writing.