Authors:

Jiao Chen、Suyan Dai、Fangfang Chen、Zuohong Lv、Jianhua Tang

Paper:

https://arxiv.org/abs/2408.09972

Introduction

Background

The rapid advancement of intelligent transportation and autonomous driving technologies has brought about significant challenges in motion planning systems. Traditional methods often rely on fixed algorithms and models, which struggle to adapt to dynamic traffic conditions and personalized driver needs. Integrating large language models (LLMs) into autonomous vehicles can enhance system personalization and adaptability, enabling better handling of complex and dynamic open-world scenarios.

Problem Statement

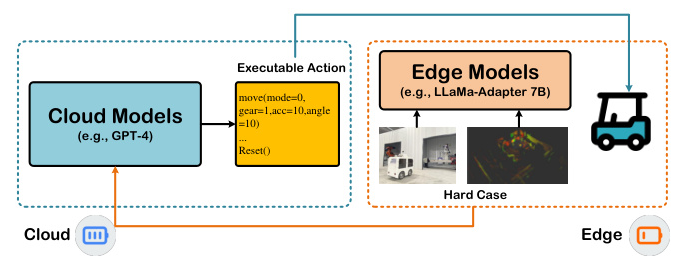

Despite the potential of LLMs, traditional edge computing models face significant challenges in processing complex driving data in real-time. This study introduces EC-Drive, a novel edge-cloud collaborative autonomous driving system with data drift detection capabilities. EC-Drive aims to reduce inference latency and improve system efficiency by optimizing communication resource use through selective data upload to the cloud for processing by larger models like GPT-4.

Related Work

Motion Planning in Autonomous Driving

- Rule-based Methods: These generate paths based on predefined rules considering environmental constraints like road geometry and traffic signals. They are simple and efficient but rigid and struggle with unexpected changes.

- Optimization-based Methods: These use optimization algorithms to compute optimal trajectories by minimizing a cost function considering factors like time, energy, safety, and comfort. They are precise but computationally intensive.

- Learning-based Methods: These use machine learning to adapt to dynamic environments by learning from past data. They provide adaptability but require significant data and resources.

Large Models

Large models based on the Transformer architecture, such as LLMs, vision models, time series models, and multimodal models, have gained widespread attention due to their extensive knowledge and advanced data processing capabilities. However, deploying these large models for real-time applications remains challenging due to their computational demands.

Motion Planning with LLMs

Recent advancements have shown the potential of LLMs in enhancing decision-making processes in autonomous vehicles. Examples include PlanAgent, which uses multimodal LLMs for motion planning, and TrafficGPT, which applies LLMs in smart transportation for traffic management. However, these models are often suitable for offline scenarios rather than latency-critical online scenarios.

Research Methodology

Problem Statement

In edge-cloud collaborative intelligent driving systems, small-scale LLMs are deployed on edge devices for real-time motion planning, while large-scale LLMs on the cloud provide support for complex scenarios. The system uses data drift detection algorithms to selectively upload challenging samples to the cloud for processing by larger models like GPT-4.

System Architecture

The proposed system architecture integrates edge and cloud components to enhance the overall performance of autonomous driving systems. The vehicle employs small-scale LLMs for routine driving tasks and real-time sensor data processing, while large-scale LLMs in the cloud handle more complex scenarios.

Edge Models

LLaMA-Adapter, a parameter-efficient tuning mechanism based on the LLaMA language model, is used for real-time motion planning on edge devices. It processes real-time sensor data, including text, vision, and LiDAR inputs, to make preliminary driving decisions.

Cloud Models

Large-scale LLMs like GPT-4 in the cloud offer advanced computational power for handling complex and dynamic driving scenarios. Real-time data from various onboard sensors is collected and preprocessed for model inference.

Edge-Cloud Collaboration Workflow

The system uses the Alibi Detect library to monitor edge model performance. If anomalies or low-confidence predictions are detected, the data is uploaded to the cloud for detailed inference, ensuring safe and efficient operation.

Experimental Design

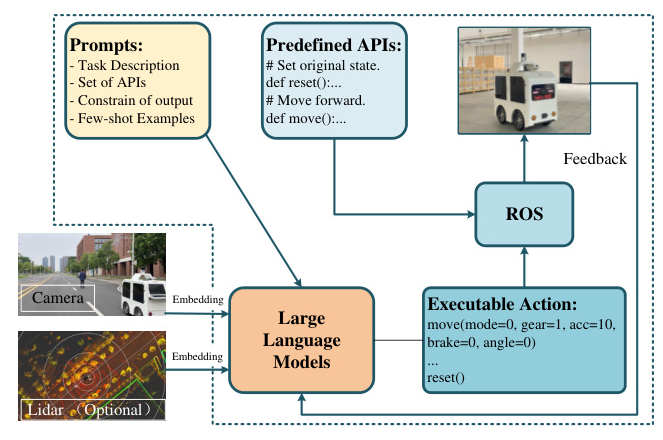

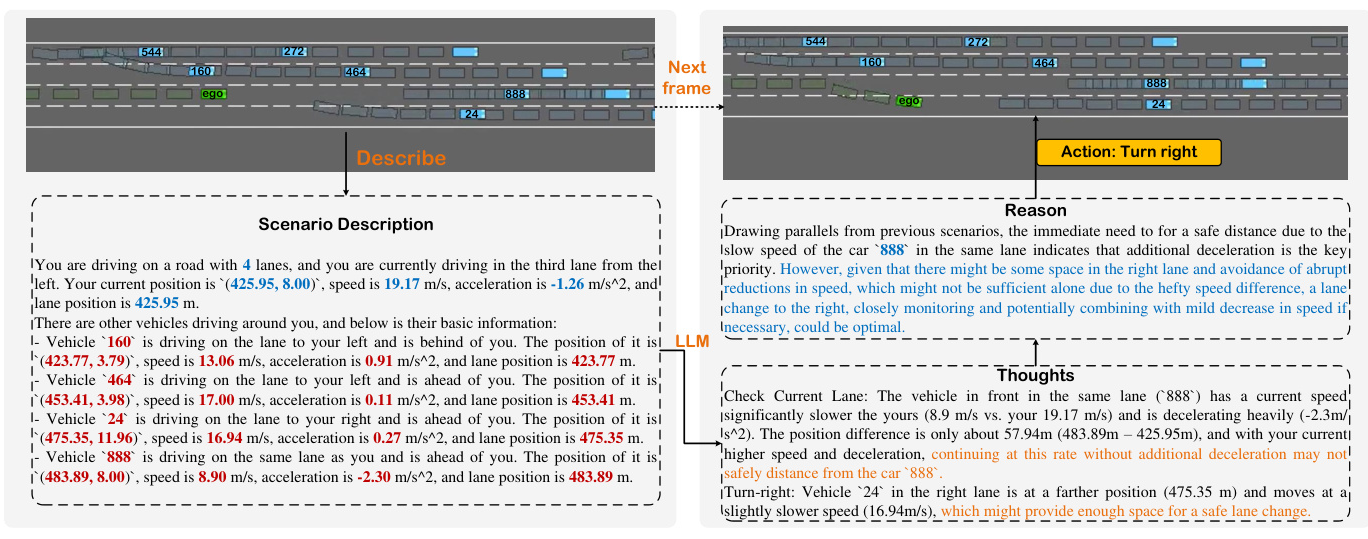

Driving on Edge

The current driving scene is transcribed into descriptive text, including the speed, acceleration, and position of the ego vehicle and surrounding vehicles. The scene description is embedded into vectors and input into the LLaMA-Adapter, which generates sequential reasoning logic and performs step-by-step logical reasoning for decision-making.

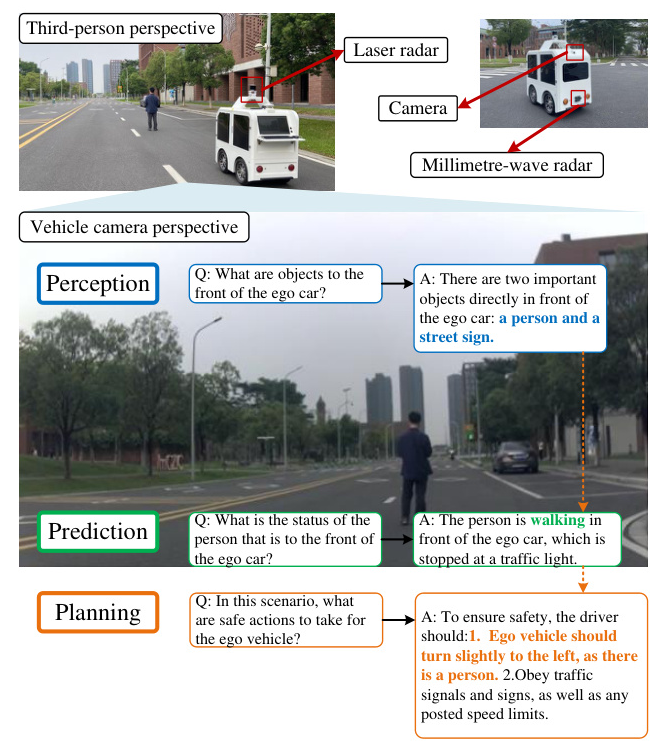

Driving on Cloud

Edge models face significant challenges in real incremental scenarios. The system selectively uploads data to the cloud-based foundational model, powered by GPT-4, for queries, enhancing motion planning performance. The cloud model’s inference process encompasses perception, prediction, and planning stages.

Edge-Cloud Collaborative Motion Planning

Data collected by autonomous vehicles at the Guangzhou International Campus of South China University of Technology is used as the testing benchmark. The dataset comprises images captured from the perspective of autonomous vehicles. The inference outcomes of different models in the same scenario are compared to evaluate performance.

Results and Analysis

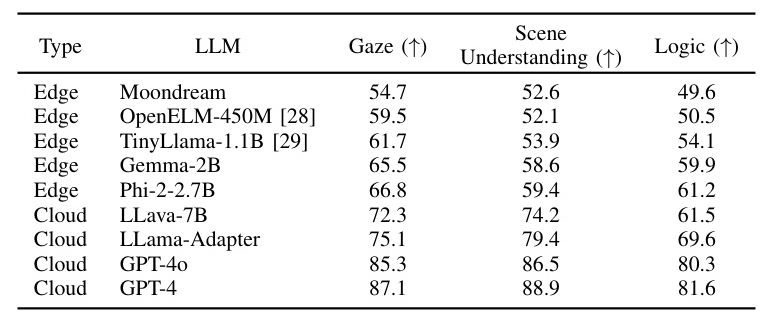

Performance Metrics

The performance of LLMs is evaluated based on three metrics: Gaze, Scene Understanding, and Logic. Cloud-based LLMs significantly outperform edge-based small-scale models in all three metrics. However, edge-based models demonstrate significant benefits in environments with limited computational resources or where low-latency responses are required.

Comparative Analysis

The experimental results validate the effectiveness and efficiency of the edge-cloud collaborative framework. The edge model is capable of making quick inferences in most cases, while the cloud model demonstrates high accuracy in complex scenarios, enhancing overall system safety and reliability.

Overall Conclusion

This study extensively investigates the application performance of LLMs in autonomous driving systems, leveraging edge computing, cloud computing, and edge-cloud collaborative processing. The edge-cloud collaboration framework enhances inference speed, conserves communication resources, and significantly reduces system latency. The experimental results provide crucial theoretical and practical guidance for the future development of autonomous driving technologies.