Authors:

Paper:

https://arxiv.org/abs/2408.08821

Introduction

In the realm of online recommender systems, deep learning has emerged as a powerful tool for capturing user preferences by analyzing complex user-item interactions. However, many existing methods rely heavily on unique user and item IDs, which limits their performance in zero-shot learning scenarios where training data is scarce. Inspired by the success of language models (LMs) and their strong generalization capabilities, this study introduces EasyRec, a novel approach that integrates text-based semantic understanding with collaborative signals. EasyRec employs a text-behavior alignment framework, combining contrastive learning with collaborative language model tuning, to ensure a strong alignment between the text-enhanced semantic space and collaborative behavior information.

Preliminaries

In recommender systems, we have a set of users ( U ) and a set of items ( I ), along with their interactions (e.g., clicks, purchases). The primary goal is to estimate the probability ( p_{u,i} ) of a future interaction between a user ( u ) and an item ( i ). Text-based zero-shot recommendation is crucial for addressing the cold-start problem, where new users and items lack sufficient interaction data. By leveraging textual descriptions, language models can construct semantic representations to enable recommendations in these scenarios.

Methodology

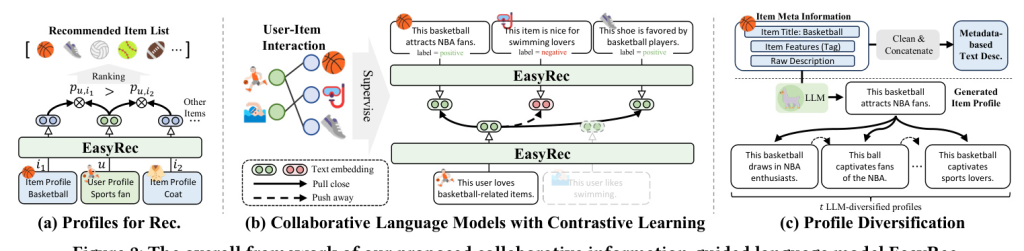

Collaborative User and Item Profiling

To generate textual profiles for users and items, EasyRec leverages large language models (LLMs) to inject collaborative information into the profiles. This approach captures both semantic and collaborative aspects, enhancing the profiles’ richness.

Item Profiling

Given raw item information (e.g., title, categories, description), EasyRec generates comprehensive item profiles by incorporating user-provided textual information (e.g., reviews). This process ensures that the profiles reflect both semantic and collaborative aspects.

User Profiling

User profiles are generated by considering their collaborative relationships, using the profiles of their interacted items. This approach captures the collaborative signals that reflect user preferences.

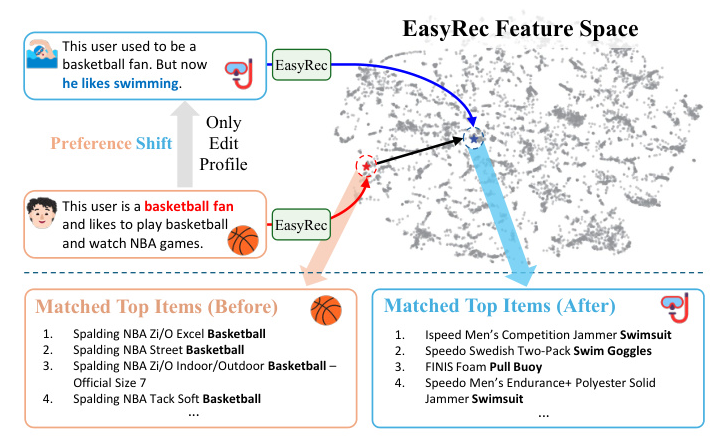

Advantages of Collaborative Profiling

- Collaborative Information Preserved: The approach captures both the semantics of user/item characteristics and their interaction patterns, enabling better recommendations even for zero-shot users and items.

- Fast Adaptation to Dynamic Scenarios: The profiling approach allows the system to effectively handle time-evolving user preferences and interaction patterns.

Profile Embedder with Collaborative LM

To address the limitations of directly encoding textual profiles, EasyRec employs a collaborative language modeling paradigm that integrates semantic richness with high-order collaborative signals.

Bidirectional Transformer Encoder as Embedder

A multi-layer bidirectional Transformer encoder is used as the embedder backbone, providing efficient encoding and flexible adaptation. The encoder generates effective text representations, enabling faster inference in recommendation systems.

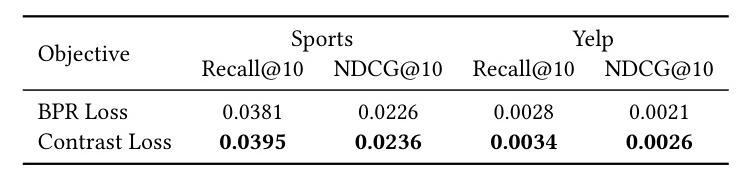

Collaborative LM with Contrastive Learning

Contrastive learning is used to fine-tune the collaborative language model, capturing high-order collaborative signals. This approach brings related item embeddings closer together in the feature space, enhancing the model’s ability to capture complex global user-item relationships.

Augmentation with Profile Diversification

To enhance the model’s generalization ability, EasyRec employs profile diversification, generating multiple profiles per entity. This approach introduces controlled variations in the profiles while preserving their core semantic meaning, improving model performance and robustness.

Evaluation

Experimental Settings

Datasets

Diverse datasets across various domains and platforms were curated to assess EasyRec’s capability in encoding user/item textual profiles into embeddings for recommendation. The dataset statistics are shown in Table 1  .

.

Evaluation Protocols

Two commonly used ranking-based evaluation metrics, Recall@N and NDCG@N, were employed to assess performance in both text-based recommendation and collaborative filtering scenarios.

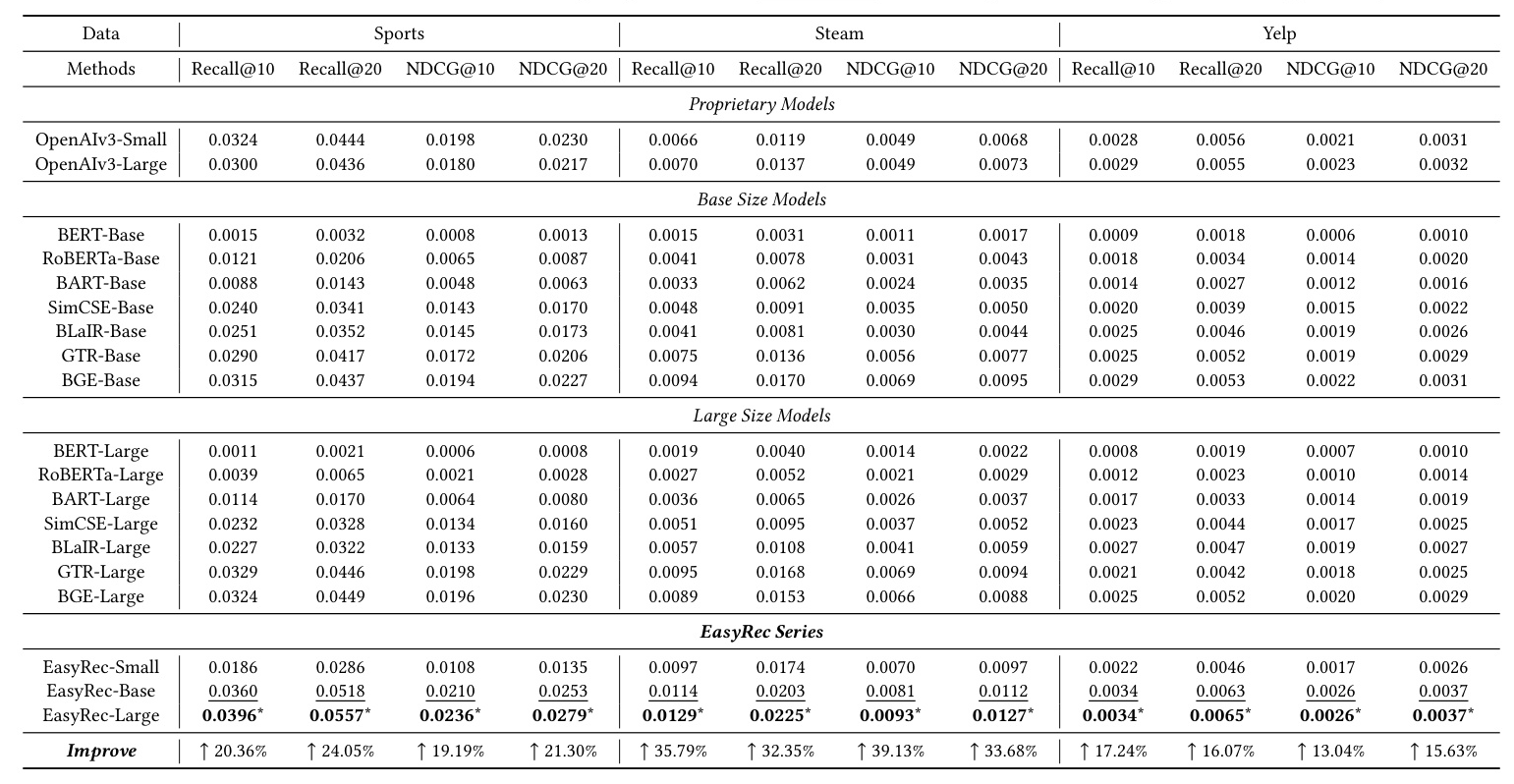

Performance Comparison for Text-based Recommendation (RQ1)

Baseline Methods and Settings

A diverse set of language models were included for comparative evaluation, covering general-purpose contextual encoders, models tailored for dense retrieval, and pre-trained models for recommendation.

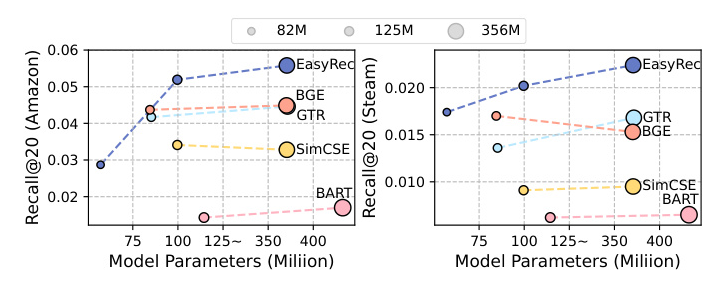

Result Analysis

EasyRec consistently outperformed other models across diverse datasets, demonstrating its effectiveness in text-based recommendation scenarios. The model’s performance improved with increased size, reflecting a scaling law  .

.

Performance of Text-enhanced CF (RQ2)

EasyRec was integrated with two widely used ID-based methods, GCCF and LightGCN, to assess its effectiveness in enhancing collaborative filtering models. The results showed that EasyRec consistently achieved the highest performance, highlighting the advantages of incorporating collaborative information into language models  .

.

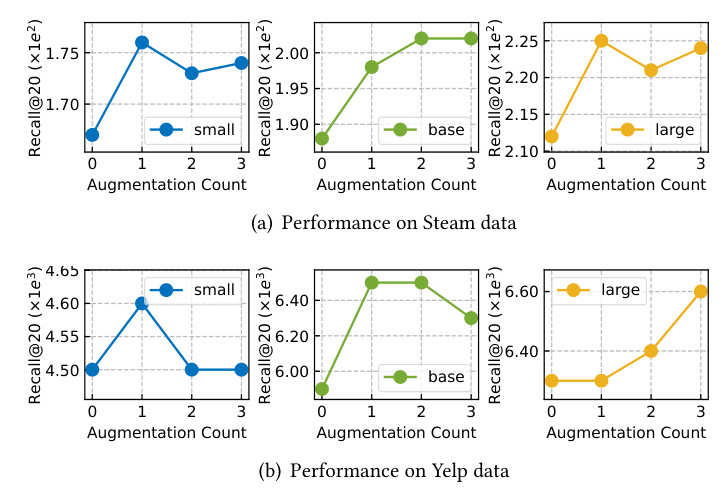

Effectiveness of Profile Diversification (RQ3)

Profile diversification using LLMs improved model performance, particularly for larger models. This finding underscores the effectiveness of data augmentation in enhancing model generalization and robustness  .

.

Model Fast Adaptation Case Study (RQ4)

EasyRec demonstrated the ability to efficiently adapt to shifts in user preferences and behavior dynamics over time. By modifying user profiles, the system adjusted recommendations without further training, highlighting its efficiency and flexibility  .

.

Conclusion

EasyRec effectively integrates language models to enhance recommendation tasks, demonstrating superior performance across various scenarios. The innovative methodology combines collaborative language model tuning with contrastive learning, capturing nuanced semantics and high-order collaborative signals. Extensive experiments validated EasyRec’s superiority over existing models, showcasing its robust and generalized performance. Future investigations will explore the integration of multi-modal information to further enhance EasyRec’s capabilities.