Authors:

Shyam K Sateesh、Sparsh BK、Uma D

Paper:

https://arxiv.org/abs/2408.10328

Introduction

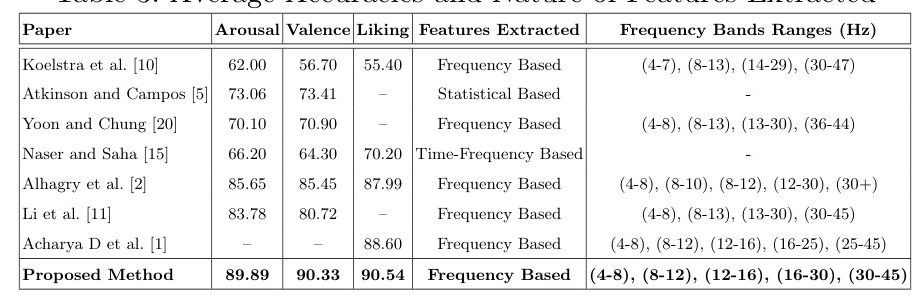

Emotion recognition from electroencephalogram (EEG) signals is a burgeoning field, particularly in neuroscience and Human-Computer Interaction (HCI). EEG signals provide a descriptive temporal view of brain activity, making them indispensable for understanding complex human emotional states. This study aims to enhance the predictive accuracy of emotional state classification by applying Long Short-Term Memory (LSTM) networks to analyze EEG signals. Using the DEAP dataset, which contains multi-channel EEG recordings, the study leverages LSTM networks’ ability to handle temporal dependencies within EEG data. The results demonstrate significant improvements in emotion recognition, achieving accuracies of 89.89%, 90.33%, 90.70%, and 90.54% for arousal, valence, dominance, and likeness, respectively.

Related Work

Foundational Datasets and Initial Studies

The DEAP dataset, detailed by Koelstra et al., has been foundational in the field, providing a rich data source for subsequent research. Significant correlations were found between participant ratings and EEG frequencies, with single-trial classification performed for arousal, valence, and liking scales using features extracted from EEG, peripheral, and MCA modalities.

LSTM Networks in Emotion Recognition

Alhagry et al. proposed LSTM networks for emotion recognition from raw EEG signals, demonstrating high average accuracies across three emotional dimensions and outperforming traditional techniques. Nie et al. explored the relationship between EEG signals and emotional responses while watching movies, achieving an impressive average testing accuracy of 87.53% using a Support Vector Machine (SVM).

Advanced Machine Learning Techniques

Li et al. provided a comprehensive overview of EEG-based emotion recognition, integrating psychological theories with physiological measurements and reviewing various machine learning techniques. Zheng et al. developed an innovative approach by integrating deep belief networks with hidden Markov models for EEG-based emotion classification, achieving higher accuracy than traditional classifiers.

Feature Extraction and Classification Approaches

Bhagwat et al. used Wavelet Transforms (WT) and Hidden Markov Models (HMM) to classify four primary emotions. Lin et al. utilized EEG data and machine learning to enhance emotional state predictions during music listening, achieving an average classification accuracy of 82.29% using an SVM. Naser and Saha applied advanced signal processing techniques to improve feature extraction for emotion classification from EEG signals.

Research Methodology

Dataset Description

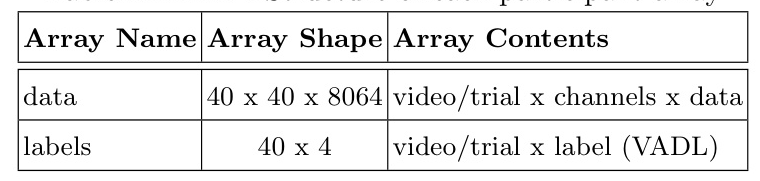

The DEAP dataset consists of EEG data recordings from 32 participants aged 19 to 37, with each participant presented with 40 one-minute music video clips to elicit emotional responses. Participants rated their experience on a 1 to 9 scale for arousal, valence, dominance, and liking. The dataset includes EEG signals and labels for each trial, with the EEG data array having dimensions of 40x40x8064 for 40 trials, 40 channels, and 8064 data points per channel per trial.

Data Acquisition and Structure

EEG and peripheral physiological signals were acquired simultaneously while participants viewed each music video clip. The EEG data was recorded at 512 Hz through a 32-channel system, reduced to 128 Hz during analysis. The dataset includes arrays for EEG signals and labels, structured to facilitate the training of emotion recognition models.

Experimental Design

Valence, Arousal, and Dominance Model

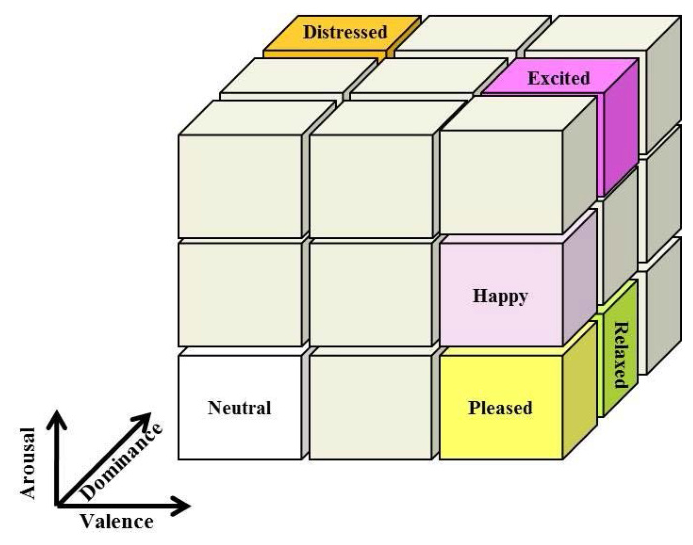

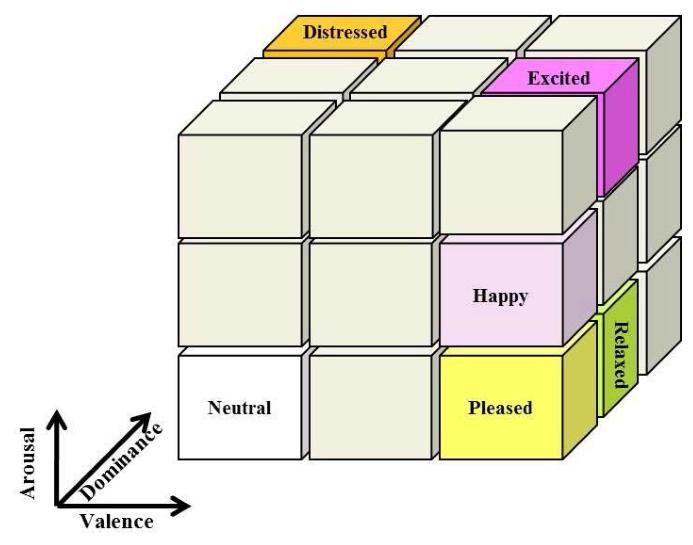

The Valence-Arousal-Dominance (VAD) model classifies emotions into three significant aspects: valence, arousal, and dominance. Valence measures the mood’s positivity or negativity, arousal measures activation level, and dominance measures control over the emotional state. This model is effective in characterizing human emotions through EEG features.

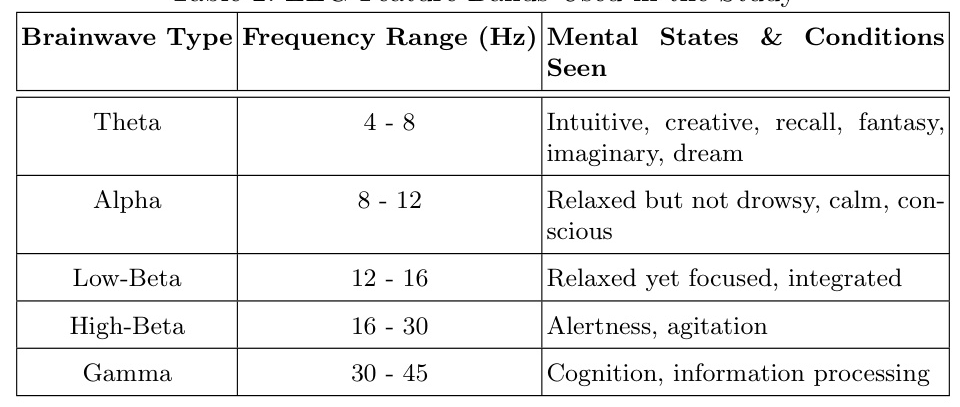

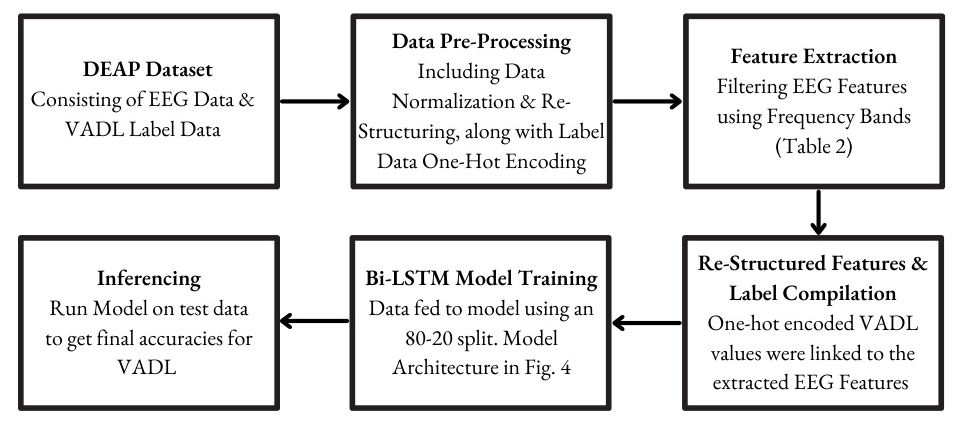

Pre-Processing Methods

Feature extraction involved capturing significant information from EEG signals, focusing on specific channels and frequency bands relevant to emotional processing. The chosen channels included frontal and temporal regions, with frequency bands segmented into theta, alpha, low beta, high beta, and gamma ranges. The Fast Fourier Transform (FFT) process was applied to selected channels, with data normalized to zero mean and unit variance.

LSTM Architecture

The LSTM network architecture was designed to handle EEG data sequentially and temporally. The model includes a Bidirectional LSTM layer with 128 units, followed by multiple LSTM layers with varying numbers of neurons and dropout layers to prevent overfitting. The final output layer uses a softmax activation function to output the probability distribution over the classes.

Results and Analysis

Model Performance

The LSTM-based model demonstrated outstanding performance in emotion recognition from EEG data, achieving accuracies of 90.33% for valence, 89.89% for arousal, 90.70% for dominance, and 90.54% for likeness. These results underline the model’s efficacy in capturing complex emotional states through advanced feature extraction and a robust LSTM architecture.

Overall Conclusion

This study successfully showcases the efficacy of LSTM networks in accurately classifying emotional states from EEG data. The customized LSTM architecture, incorporating bidirectional layers and strategic dropout stages, adeptly handles the complexities of EEG signals. The results demonstrate significant improvements in emotion recognition, paving the way for advancements in cognitive neuroscience and human-computer interaction. Future work can further build upon this model with more robust neural networks and additional physiological signals, refining the precision and application of EEG-based emotion recognition in creating empathetic user interfaces.