Authors:

Jayanta Mandi、Marco Foschini、Daniel Holler、Sylvie Thiebaux、Jorg Hoffmann、Tias Guns

Paper:

https://arxiv.org/abs/2408.06876

Introduction

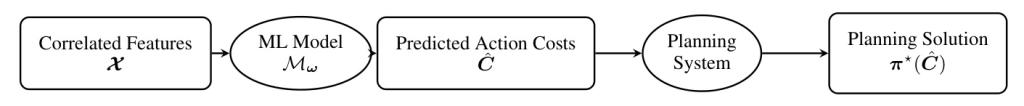

Automated planning is a critical component in many applications, such as transportation logistics, where determining the optimal route is essential. However, specifying action costs, like travel time, can be challenging due to varying factors like weather conditions. This paper explores the use of Decision-Focused Learning (DFL) to predict these action costs based on input features, aiming to optimize the overall planning solution rather than just the prediction accuracy.

Predict-then-Optimize Problem Formulation

In traditional approaches, prediction and planning are treated as separate tasks. However, DFL integrates these tasks, training the machine learning (ML) model to optimize the downstream planning solution directly. This approach has shown promise in various combinatorial optimization problems, and this paper investigates its application in automated planning.

Background

Planning Problem Definition

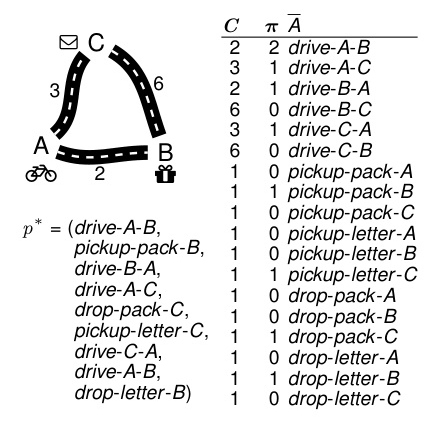

The planning problem is defined using the STRIPS formalism, where a planning problem is a tuple (P, A, s0, g, c). Here, P is a set of propositions, A is a set of actions, s0 is the initial state, g is the goal state, and c is the cost function mapping actions to their costs. The objective is to find a sequence of actions that transitions from the initial state to the goal state with minimal cost.

Predict-then-Optimize Problem

In domains like transportation, action costs depend on contextual features such as weather or traffic. These costs can be predicted using ML models, forming the basis of the Predict-then-Optimize problem. The ML model predicts action costs, which are then used to generate an optimal plan.

Vector Representation

To facilitate the use of neural networks, the planning problem is represented in vector form. The action count vector π represents the number of times each action occurs in a plan, and the cost vector C represents the costs of these actions.

Regret

Regret measures the difference between the realized cost of the solution made using predicted costs and the true optimal cost. It is a key metric in evaluating the quality of the predicted costs in the Predict-then-Optimize problem.

Decision-Focused Learning

Gradient Descent Training

In DFL, the ML model is trained to minimize regret using gradient descent. However, planning is not a differentiable operation, making it challenging to compute gradients. The Smart Predict-then-Optimize (SPO) technique addresses this by providing a convex upper bound of the regret, which can be used to compute subgradients for training.

SPO Technique

The SPO technique proposes a subgradient for the regret, allowing gradient-based training. This subgradient is used to update the ML model parameters to minimize regret.

DFL in the Context of Planning

Regret Evaluation with Negative Action Costs

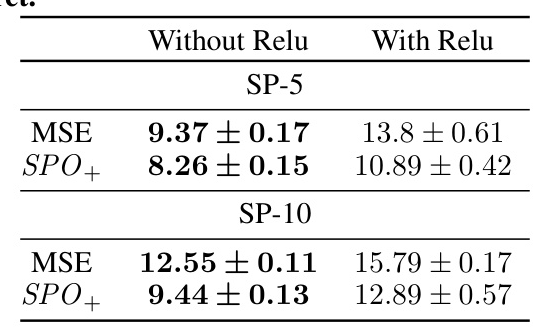

Predicted action costs can turn negative, which is not supported by planning systems. Two methods are proposed to handle this: thresholding (setting negative costs to zero) and add-min (adding a scalar to make all costs positive).

Training with Negative Action Costs

During training, the focus is on producing useful gradients. The SPO subgradient is adapted to handle negative action costs, ensuring the ML model receives feedback to correct these predictions.

Explicit Training Penalty

An explicit penalty is added to the loss function if any predicted action costs are negative. This penalty guides the ML model to avoid negative predictions, improving the overall training process.

Scaling up DFL for Planning Problems

Use of Planning Techniques

To expedite training, planning techniques without optimality guarantees or relaxed planning problems are used. These provide useful gradients while reducing computational costs.

Use of Solution Caching

Solution caching replaces solving the optimization problem with a cache lookup strategy, significantly reducing computational costs. The cache is formed using solutions from the training data and a percentage of predicted action costs.

Experimental Evaluation

Benchmark Set

Experiments are conducted on domains like Shortest Path, Transport, and Rovers. These domains have meaningful action costs impacting solution quality, making them suitable for evaluating the proposed methods.

Generation of Training Data

Synthetic data is generated for training and evaluation, following a process that ensures the action costs are positive and meaningful.

Planning and Learning Setup

A linear model is used to predict action costs from features, and the Fast Downward planning system is used for DFL training and evaluation. Experiments are conducted on small and large problem instances to evaluate the effectiveness of the proposed methods.

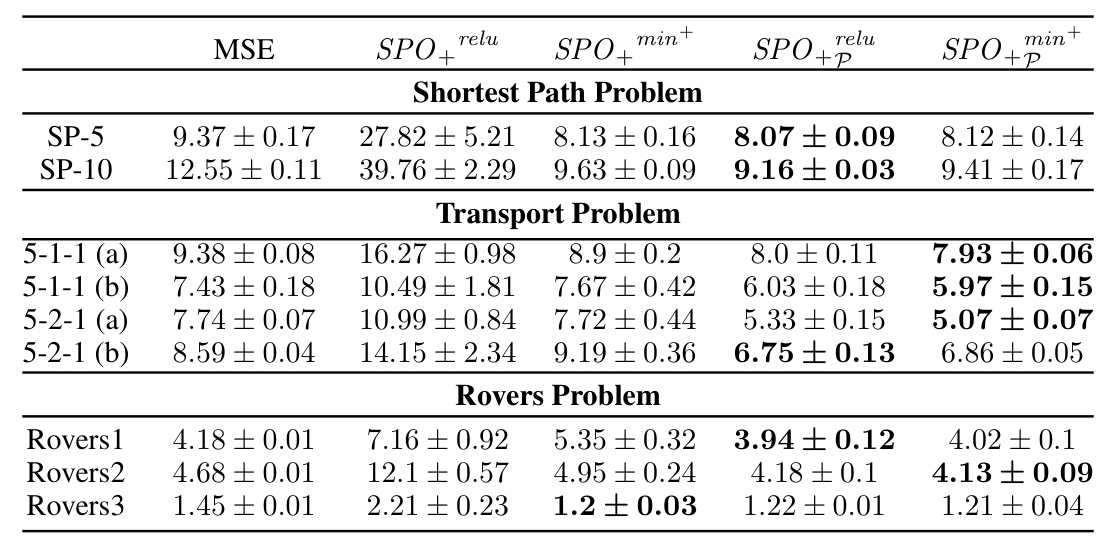

Results

The results demonstrate that the proposed DFL approach consistently yields lower regret compared to minimizing the mean square error (MSE) of predicted action costs. Explicit training penalties and solution caching further improve the training process, reducing computational costs without compromising decision quality.

Conclusion

This paper investigates the application of DFL in automated planning, specifically for predicting action costs. The proposed methods effectively handle negative action costs and reduce computational costs, leading to superior planning solutions. Future work includes further reducing computational costs and exploring DFL for state-dependent action cost prediction.

Acknowledgments

This research received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 101070149, project Tuples.

This blog post provides a detailed overview of the paper “Decision-Focused Learning to Predict Action Costs for Planning,” highlighting the key concepts, methods, and experimental results. The illustrations included help visualize the problem formulation and experimental setup, enhancing the understanding of the proposed approach.