Authors:

Matthew Barthet、Diogo Branco、Roberto Gallotta、Ahmed Khalifa、Georgios N. Yannakakis

Paper:

https://arxiv.org/abs/2408.06346

Introduction

Affective computing (AC) aims to create systems that can recognize, interpret, and simulate human emotions. One of the most challenging tasks within AC is to autonomously generate new contexts that elicit desired emotional responses from users. This concept is known as the affective loop. The unpredictability and subjectivity of human emotions make this task particularly difficult.

In this paper, the authors introduce a novel method for autonomously generating content that elicits a desired sequence of emotional responses. They focus on the domain of racing games, leveraging human arousal demonstrations to generate racetracks that elicit specific arousal traces for different player types. The proposed framework, called experience-driven reinforcement learning (EDRL), uses a simulation-based approach to reward the racetracks it generates.

Related Work

Affective Computing in Games

Affective computing has been applied in various contexts, including text, videos, audio, and games. Video games offer a unique form of human-computer interaction, allowing users to play an active role during consumption. Collecting reliable affect labels for games is challenging, but modern data collection platforms like CARMA and the PAGAN framework enable real-time collection of such labels.

Procedural Content Generation (PCG) for Affective Computing

PCG has evolved to be a critical area of research within generative media. Experience-driven PCG (EDPCG) aims to generate content that elicits a particular player experience. Recent advancements have combined EDPCG with reinforcement learning (RL) to generate game levels tailored to individual players. However, EDRL has yet to tackle affect-aware generation using human affect annotations in a continuous manner.

Experience-Driven Content Generation

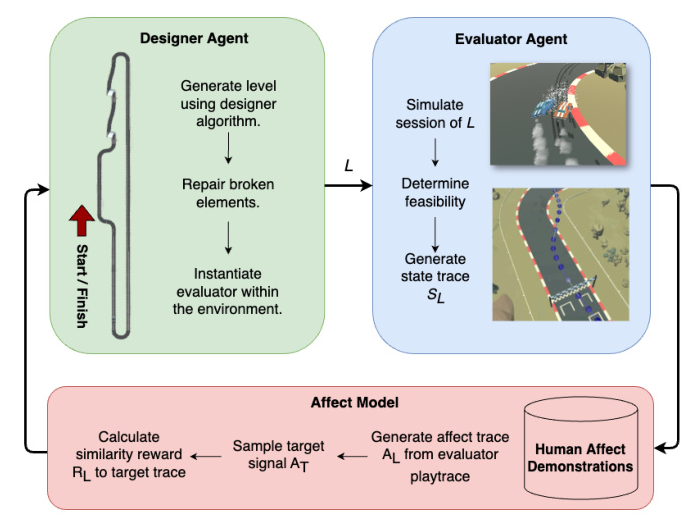

The proposed approach builds upon the EDRL framework by using a data-driven approach for evaluating generated levels through an evaluator agent combined with an affect model.

Designer

The designer generates the stimuli (racetrack) that will be passed to the evaluator during optimization. Two implementations are tested: a search-based generator using an evolutionary RL method and an RL designer using a variant of the Go-Explore algorithm called Go-Blend.

Evaluator

The evaluator ensures the feasibility of the generated stimuli and generates state and affect traces for the given stimulus. It simulates the playback of the stimuli using an AI agent and tailors the affect model to the human demonstrations of a specific player type through clustering.

Reward Function

The reward function guides the designer to generate the desired stimuli. It measures the similarity between a generated affect trace and the desired affect trace using a distance metric. The reward function can be used by either a traditional RL method or an evolutionary RL method.

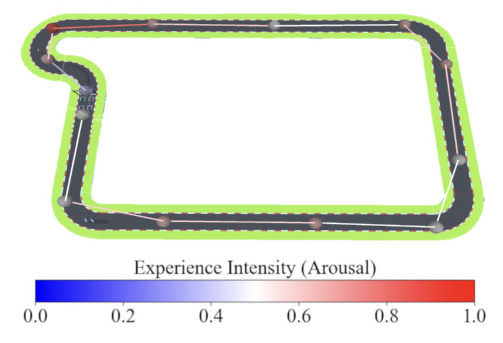

Arousal-Driven Racetrack Generation

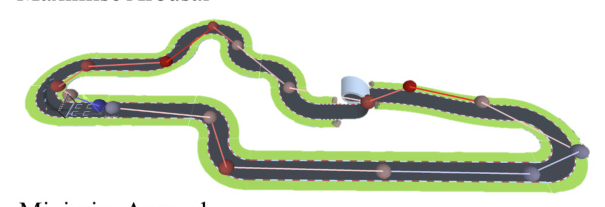

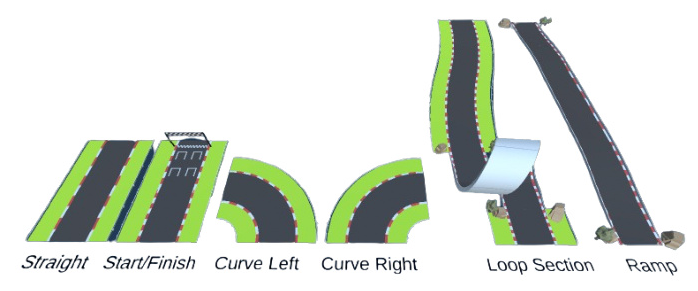

The case study platform is the Solid Rally racing game from the AGAIN dataset. The game features a 3D real-time rally driving experience, where players race against opponent cars around a circuit.

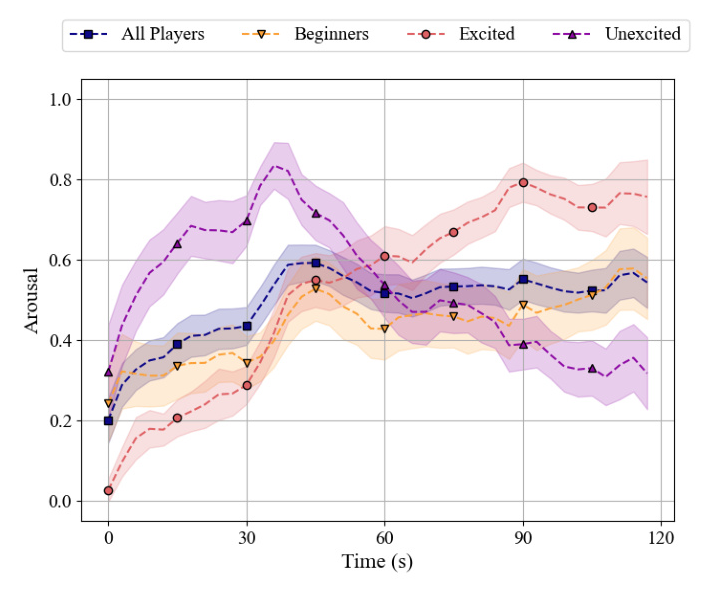

Arousal Model for Solid Rally

The arousal model evaluates the quality of the generated racetracks using a dataset containing continuous arousal traces. The dataset is clustered based on the player’s score and arousal traces, yielding three clusters: Excited experts, Unexcited experts, and Beginners.

Arousal-Driven PCG for Solid Rally

Two designer approaches are implemented: an evolutionary algorithm (EDPCG) and an EDRL agent using Go-Blend. The game is converted into an Open-AI Gym environment to facilitate the creation of new racetracks and communication between the internal game state and the generator’s code.

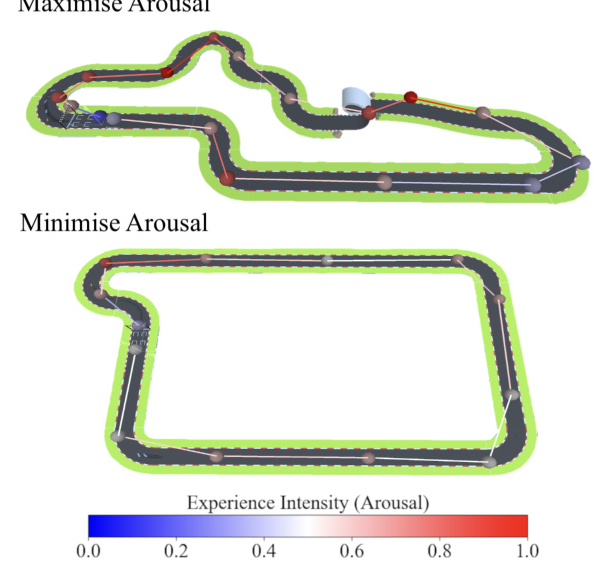

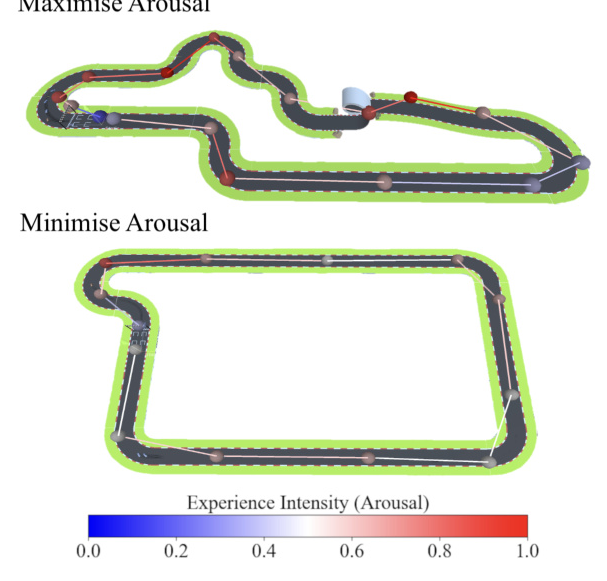

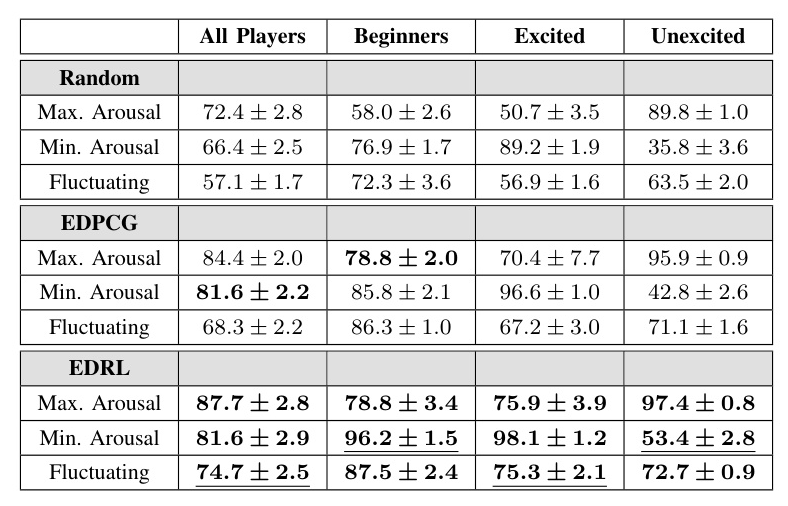

Experimental Protocol

The generators are evaluated across three scenarios: Minimise Arousal, Maximise Arousal, and Fluctuating Arousal. The accuracy of the output arousal signal elicited from the generated track to the target signal is measured. Comparisons are made across 10 runs for each experiment configuration.

Results

The results show that both the EDPCG and EDRL designers outperform the random designer across all player clusters and scenarios. Certain scenarios were more challenging than others, with varying performance across player types due to their dissimilar affective patterns.

Discussion

The proposed approach generates affect-aware content in a continuous manner via the EDRL framework. The generated content can be tailored to a target affective pattern for a particular user. Future investigations should employ more complex reward functions and test across different game genres and domains to validate the potential of EDRL for affect-driven generation.

Conclusions

The novel framework expands current experience-driven content generation frameworks to generate content that elicits tailor-made continuous affective patterns for specific user types. The EDRL method appears to be more efficient and robust compared to other methods. The proposed methods are directly applicable to any affective interaction domain in need of personalized content creation.

Ethical Impact Statement

The study uses an existing dataset of human demonstrations collected from crowd workers on the Amazon Mechanical Turk platform. The dataset is publicly available, and participants gave their consent for their data to be stored and utilized anonymously. There is no significantly negative application of the methods used in this paper, and no added privacy or discrimination risk.