Authors:

Paper:

https://arxiv.org/abs/2408.11019

An Overlooked Role of Context-Sensitive Dendrites: A Detailed Interpretive Blog

Introduction

Background and Problem Statement

The study of dendrites, particularly in pyramidal two-point neurons (TPNs), has traditionally focused on the apical zone, which receives feedback (FB) connections from higher perceptual layers. This feedback is used for learning. However, recent advancements in cellular neurophysiology and computational neuroscience have revealed that the apical input, which includes feedback and lateral connections, is far more complex and diverse than previously understood. This multifaceted input, referred to as context, has significant implications for ongoing learning and processing in the brain.

The apical tuft of TPNs receives signals from neighboring cells (proximal context), other parts of the brain (distal context), and overall coherent information across the network (universal context). This integrated context can amplify or suppress the transmission of feedforward (FF) signals, depending on their coherence. The study aims to explore the role of these complex context-sensitive TPNs (CS-TPNs) in integrating context with FF somatic current, thereby enhancing learning and processing efficiency.

Related Work

Previous Studies and Theories

- Integrate-and-Fire Pyramidal Neurons: Traditional models of pyramidal neurons, which form the basis of current deep learning, focus on a single point of integration [1, 2].

- Burst-Dependent Synaptic Plasticity (BDSP): Recent studies have used BDSP to solve the online credit assignment problem in TPNs, but these models still rely on point neuron concepts [5, 6].

- Conscious Multisensory Integration (CMI) Theory: This theory suggests that the apical tuft of TPNs receives modulatory sensory signals from various sources, playing a crucial role in selectively amplifying or suppressing FF signals [13-15].

Limitations of Previous Work

Previous models have predominantly focused on feedback information at the apical zone for learning, neglecting the diverse and complex nature of apical input. This has limited the understanding of the true computational potential of TPNs.

Research Methodology

Proposed Methodology

The study integrates features of CMI-inspired CS-TPNs into a spiking TPNs-inspired local BDSP rule. This approach aims to demonstrate accelerated local and online learning compared to the BDSP approach alone. The methodology involves:

- Integration of Contextual Inputs: Incorporating proximal (P), distal (D), and universal (U) contexts into the apical dendrites of CS-TPNs.

- Flexible Integration of Currents: Moment-by-moment integration of contextual current with FF somatic current at the soma, amplifying coherent signals and attenuating conflicting ones.

- Simulation and Validation: Spiking simulation results to validate the efficient information processing capabilities of CS-TPNs.

Key Equations and Models

The study uses differential equations to represent the dynamics of somatic membrane potential and apical dendrite dynamics. The MOD function captures the complex interactions between somatic and dendritic currents, enabling intrinsic adaptation mechanisms.

markdown

\dot{V}_{s}=\frac{1}{\tau_{s}}(V_{s}-E_{L})+\frac{1}{C_{s}}Mod(I_{s},I_{c})-\frac{1}{C_{s}}w_{s}

Experimental Design

Planning and Designing the Experiment

The experiments are designed to test the context-sensitive operation of CS-TPNs in both shallow and deep neural networks. The key components include:

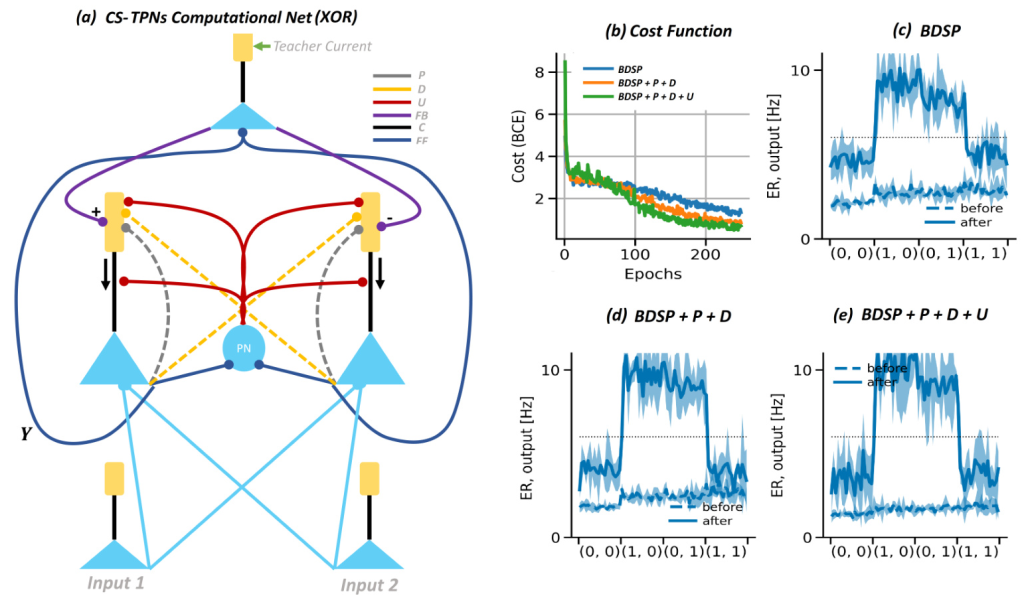

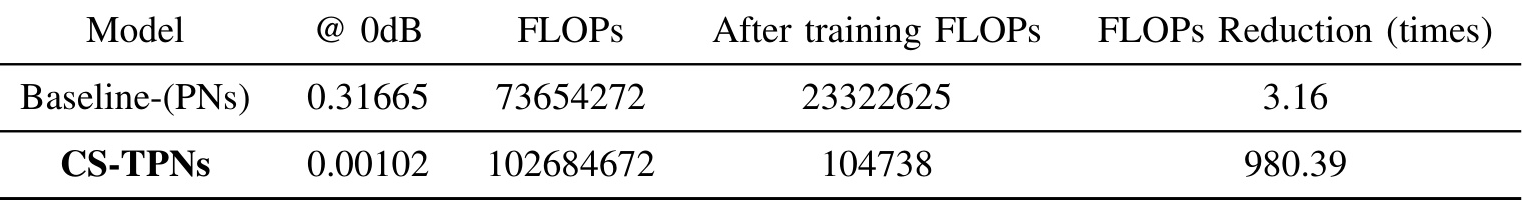

- Shallow Spiking XOR Task: A 3-layer network structure is used to perform an XOR function, comparing the performance of CS-TPNs with context-insensitive TPNs.

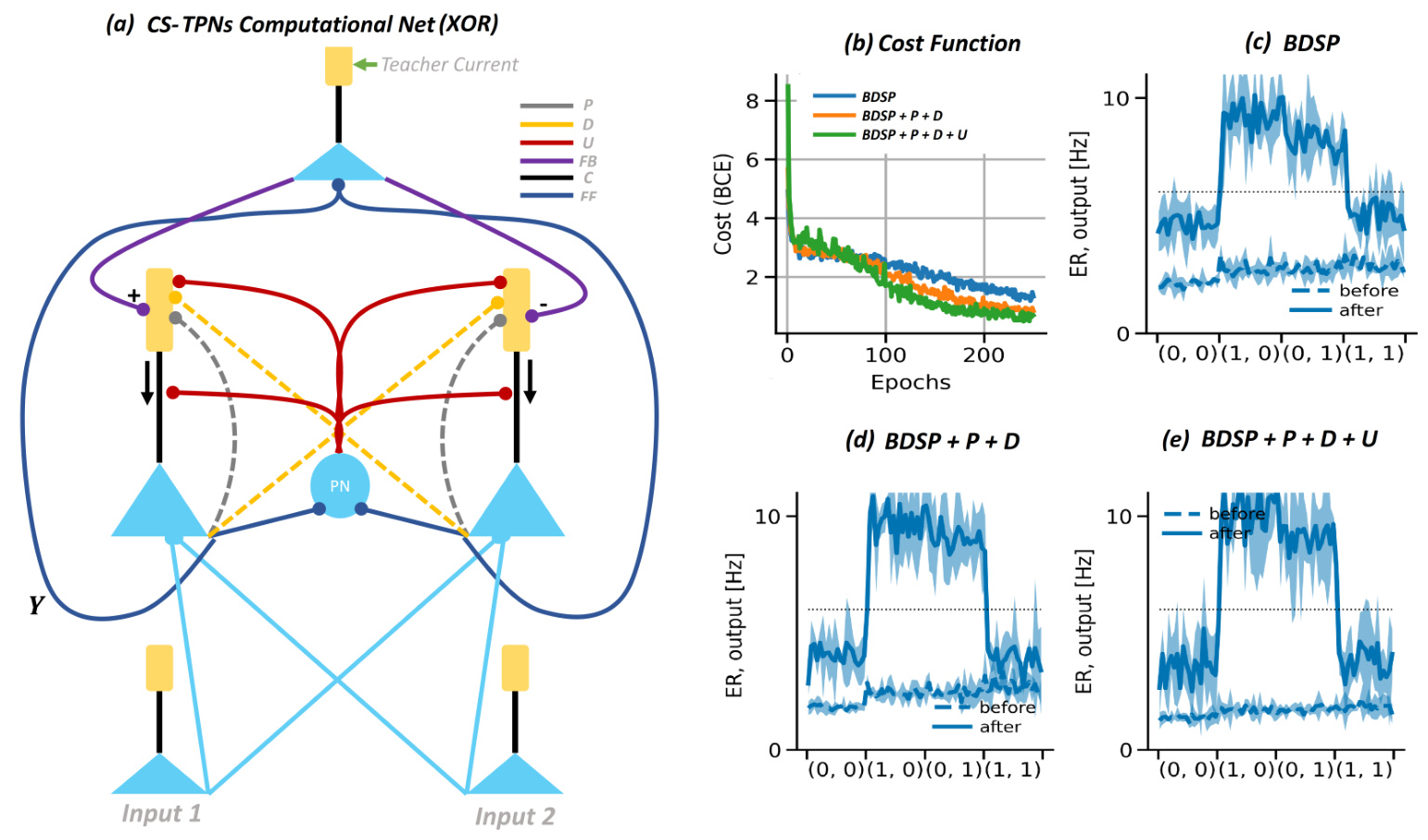

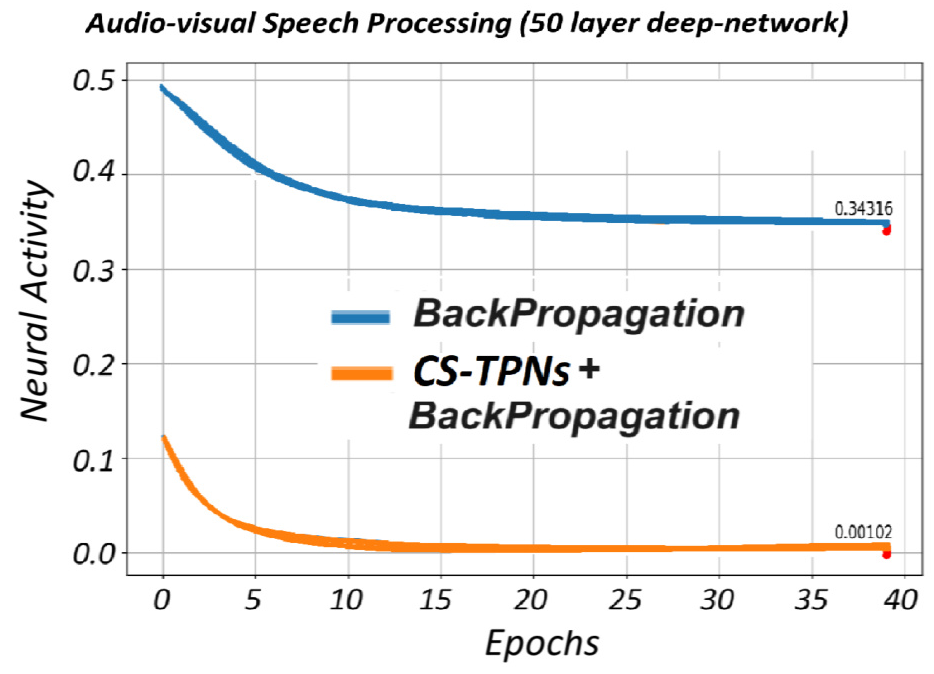

- Deep AV Speech Processing: A 50-layer deep CNN composed of CS-TPNs is used for audio-visual (AV) speech processing, demonstrating the scalability and efficiency of CS-TPNs in larger networks.

Data Preparation

- AV Dataset for Speech Enhancement: The AV ChiME3 dataset, created by blending clean Grid videos with ChiME3 background noises, is used for training and evaluation.

- Network Architecture: The two-layered CNN composed of CS-TPNs is scaled to a 50-layered CNN, integrating audio and visual modalities for feature extraction and reconstruction of clean audio signals.

Results and Analysis

Shallow Spiking XOR Task

The results show that the CS-TPNs+BDSP network learns faster and requires fewer events (both singlets and bursts) compared to the BDSP alone. The network with CS-TPNs distinguishes between high and low outputs more clearly, demonstrating efficient information processing.

Deep AV Speech Processing

The 50-layer deep CNN composed of CS-TPNs requires significantly fewer neurons and demonstrates better generalization capabilities compared to a deep CNN composed of PNs. The results reveal the universal applicability of CS-TPNs in efficient information processing.

Comparative Analysis

The study compares the neural activity, perceptual evaluation of speech quality (PESQ), and short-time objective intelligibility (STOI) between the baseline PNs-inspired CNN and the proposed CS-TPNs inspired CNN. The CS-TPNs inspired CNN generalizes better with up to 330x fewer neurons for all SNRs.

Overall Conclusion

Summary of Findings

The study demonstrates that CS-TPNs, inspired by CMI theory, offer a more efficient and effective approach to information processing compared to traditional PNs-based models. The flexible integration of contextual and somatic currents enables faster learning with fewer neurons, making CS-TPNs a promising approach for future neural network designs.

Future Work

Future research will focus on further scaling up the proposed artificial and spiking TPNs inspired neural nets for a range of real-world problems. The goal is to explore the full potential of CS-TPNs in various applications, including large language models and other complex tasks.

Acknowledgments

The research was supported by the UK Engineering and Physical Sciences Research Council (EPSRC). The authors acknowledge the contributions of several professors and researchers for their support and encouragement.

This blog provides a comprehensive overview of the study on context-sensitive dendrites, highlighting the innovative approach and significant findings. The integration of contextual inputs into TPNs offers a new perspective on neural network design, paving the way for more efficient and scalable models.