Authors:

Guhong Chen、Liyang Fan、Zihan Gong、Nan Xie、Zixuan Li、Ziqiang Liu、Chengming Li、Qiang Qu、Shiwen Ni、Min Yang

Paper:

https://arxiv.org/abs/2408.08089

Introduction

Artificial intelligence (AI) technologies, particularly large language models (LLMs), are rapidly transforming the traditional legal industry. From automated text generation to interactive legal consulting, AI applications in the legal domain are becoming increasingly widespread. However, significant challenges remain in handling complex legal queries and simulating real court environments. Existing legal AI systems often struggle to comprehensively simulate the legal reasoning process and multi-party interactions.

To address these limitations, the paper presents AgentCourt, an innovative LLM-based system designed for the simulation of civil courts. AgentCourt involves multiple roles, including judges, attorneys, plaintiffs, and defendants, providing a more authentic and comprehensive legal scenario simulation. This system excels not only in handling standard legal queries but also in analyzing complex real-world cases.

Related Work

Large Language Models in the Legal Domain

AI applications in the legal domain have made significant strides, particularly with the advent of LLMs. These models have shown potential in various legal tasks, including case prediction, legal research, and document analysis. Despite these advancements, current AI legal systems still face limitations in handling complex legal queries and simulating real court environments.

Large Language Models for Real World Simulation

LLM-based multi-agent systems represent a new direction in AI research, leveraging collaborative agents to address complex problems. These systems excel in utilizing cognitive synergy and knowledge sharing, thereby enhancing overall decision quality and interaction capabilities. Notable applications include Agent Hospital, which simulates a hospital environment with LLM-driven autonomous agents representing patients, nurses, and doctors.

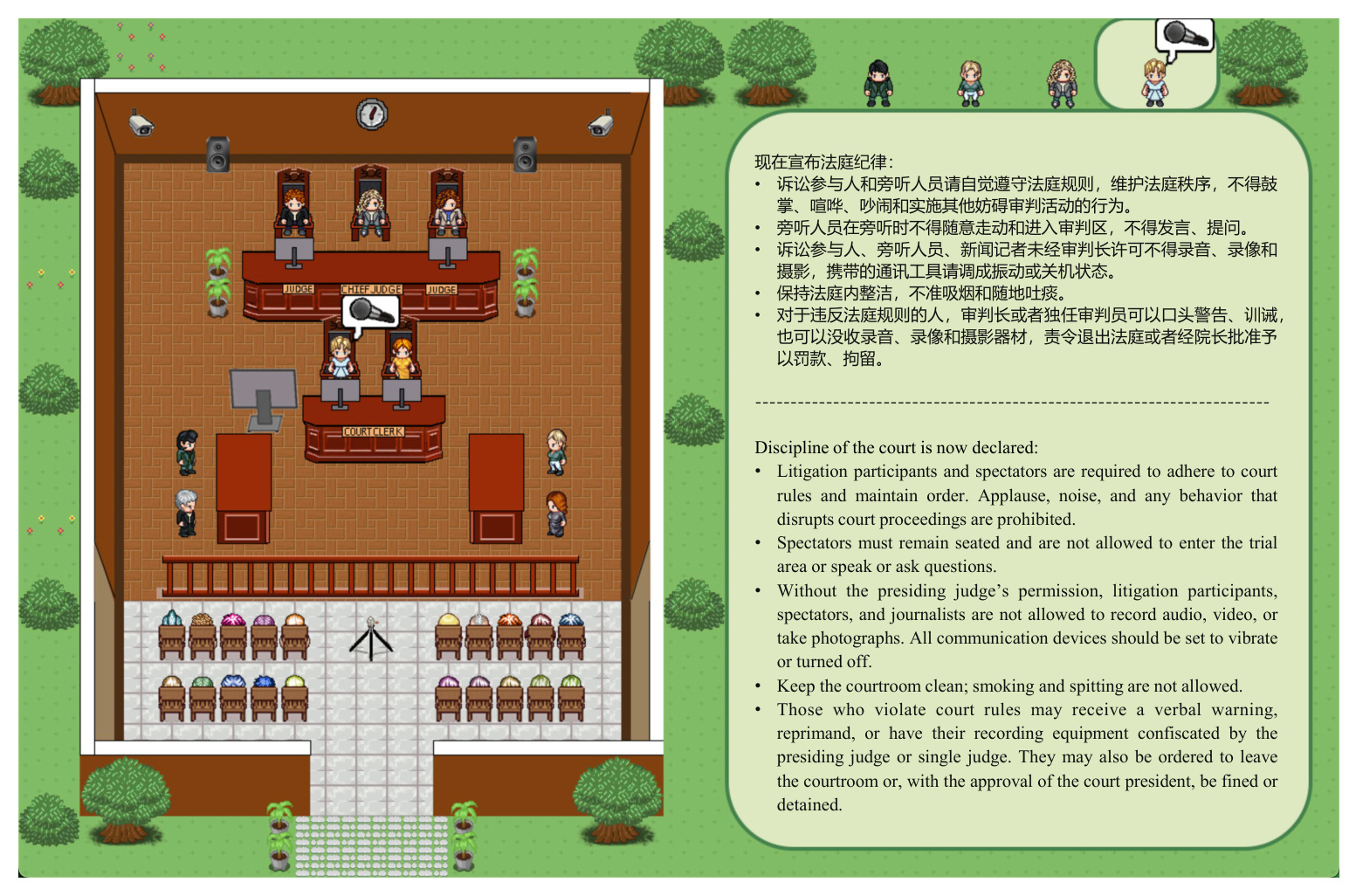

Court Simulacrum Visualization Settings

Inspired by previous studies, the court sandbox simulation environment is designed using Pygame to clearly demonstrate the entire process within the court. Two distinct scenarios are managed: the law firm, where plaintiffs and defendants interact with their lawyers, and the court, where the trial proceedings take place.

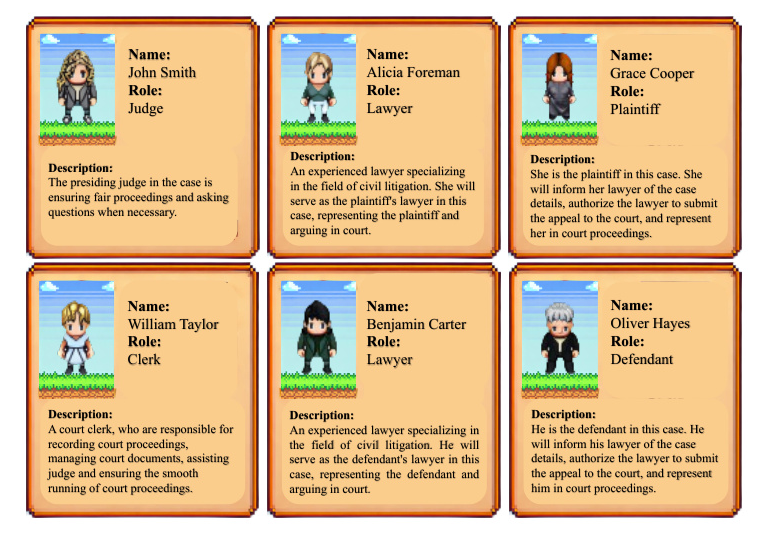

Agent Settings

To accurately recreate real litigation scenarios, six distinct roles are designed: plaintiff, defendant, plaintiff’s lawyer, defendant’s lawyer, judge, and court clerk. Each role is powered by ERNIE-Speed-128K and can be easily extended.

Plaintiff and Defendant Agents

The simulation begins before a case has occurred, necessitating two agents to play the roles of a potential plaintiff and defendant. These agents autonomously seek legal assistance from a law firm, obtaining a complaint or an answer during their interactions with the lawyer.

Lawyer Agents

Two lawyer agents are designed to communicate with their respective clients to gather relevant information about the case. They engage in court debates in accordance with the prescribed procedures, championing the interests of their respective clients.

Judge Agent

In the court, the judge is responsible for overseeing the entire process, listening to the arguments from both lawyers, and asking questions when appropriate. The judge summarizes and evaluates each round of the lawyers’ arguments before delivering the final judgment.

Court Clerk Agent

To create a more realistic court environment and to facilitate the evolution of the agent, a court clerk agent is designed to announce the commencement of the trial and document the entire process of the trial.

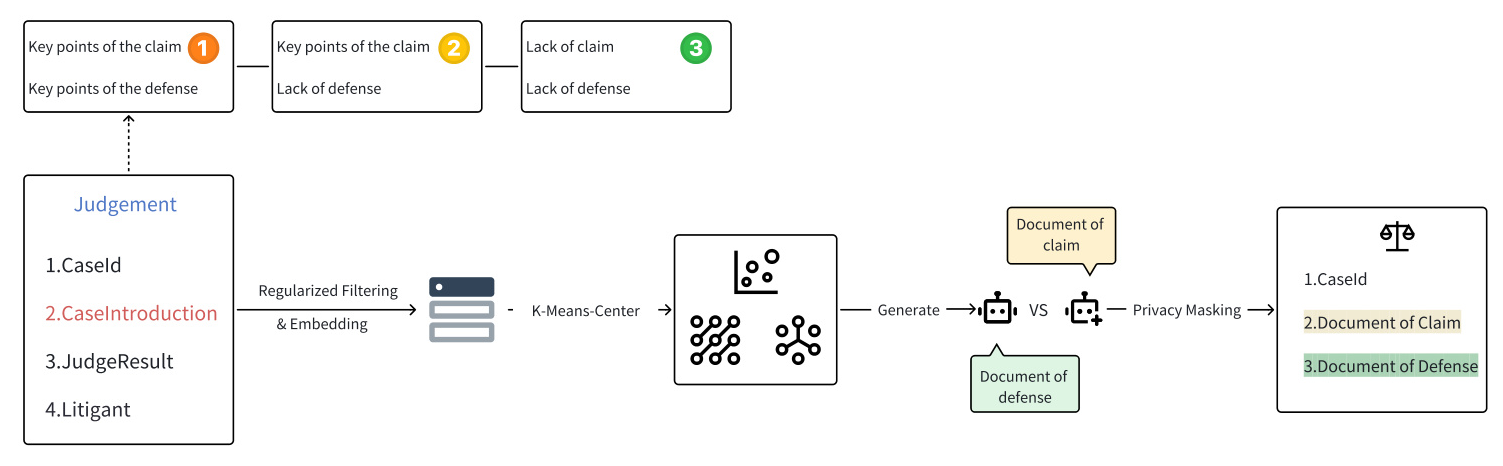

Data Settings and Processing

The data settings and processing methodology encompass regularized filtering, BERT embedding, and privacy masking. Leveraging the China Judgement Website, a dataset of 10,000 civil judgements was compiled. Preprocessing focused on enhancing dataset quality, resulting in a curated dataset of 1,000 training and 50 test samples.

Interactions Settings

Case Generation

Two resident agents randomly encounter cases and seek legal assistance from law firms, transforming into Plaintiff and Defendant Agents. The law firm randomly assigns lawyer agents to communicate with them and submit the complaint and defense documents to the court.

Court Proceedings

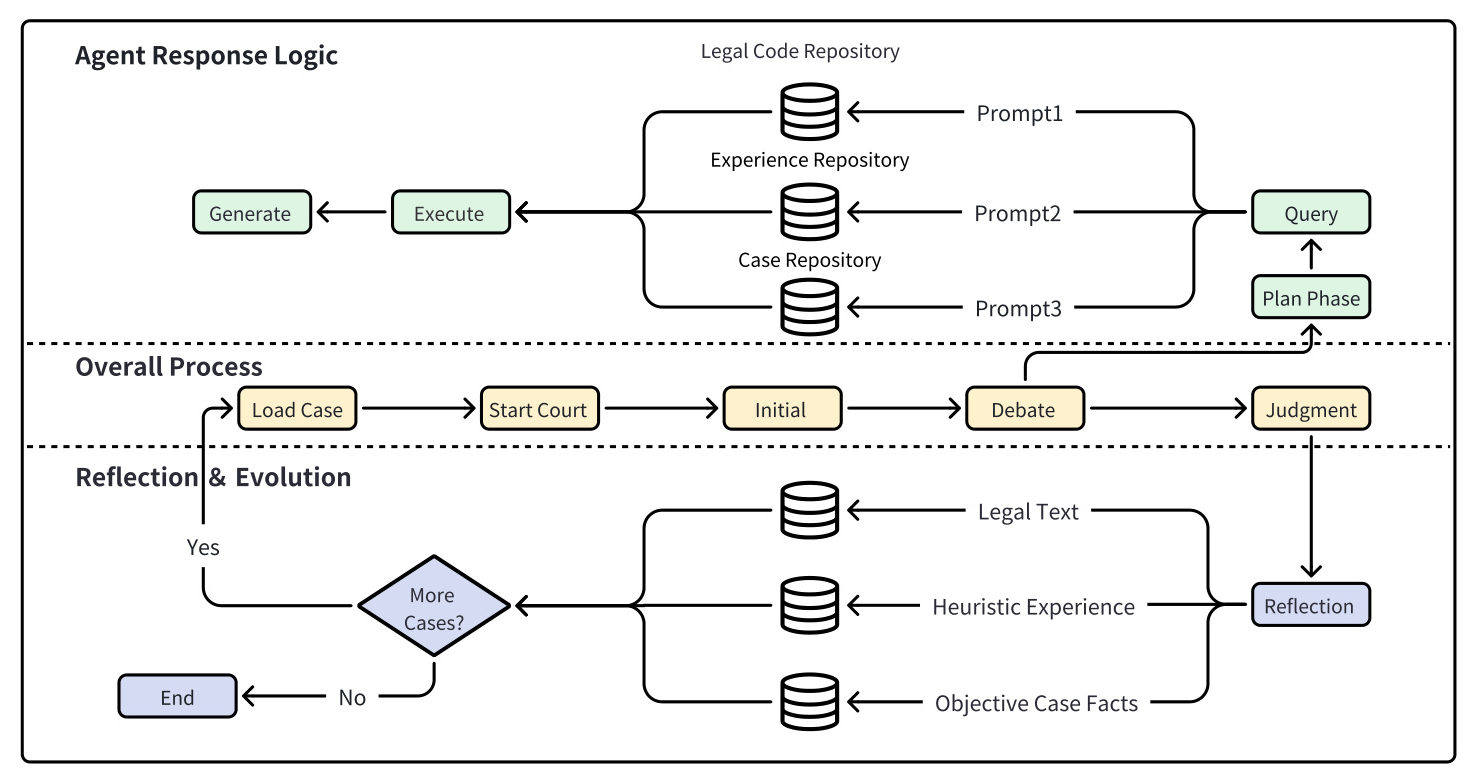

The full process of the simulation is illustrated in Figure 4. The DEBATE ROUNDS parameter is a hyperparameter set to accelerate the iteration process. This can be adjusted based on specific requirements or to more closely simulate real-world proceedings.

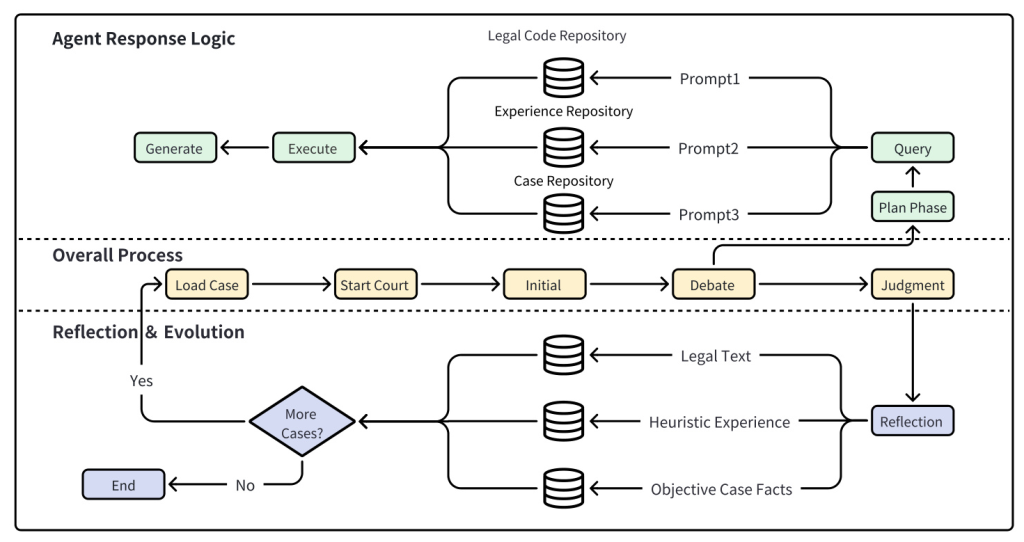

Adversarial Evolutionary Approach

An adversarial evolutionary strategy is introduced to advance lawyer proficiency within court simulations driven by LLMs. This strategy dramatically cuts annotation expenses while augmenting the model’s ability to generalize across diverse and complicated court scenarios. The strategy’s effectiveness hinges upon three fundamental modules: the experience database, case database, and legal code database.

Database Design

Construction of Experience Repository

Learning from past litigation experiences is essential for the development of an AI agent in legal contexts. The repository of experiences focuses on broad strategies and overarching directions, equipping the legal agent with a comprehensive perspective in case handling.

Development of Case Repository

A case library emphasizes the retention of individual cases themselves. Referencing prior cases and their judgments is imperative, enabling legal agents to swiftly amass a wealth of cases for future citation.

Establishment of Legal Code Repository

Possessing comprehensive and professional legal knowledge is of utmost importance. Legal agents review the fundamental aspects of the case and past exchanges during court proceedings, reflecting on which legal provisions could have improved their responses.

Experiment

Experimental Setup

The simulated court is based on 1,000 real-world civil cases. The experiment includes two parts: automatic evaluation and manual evaluation.

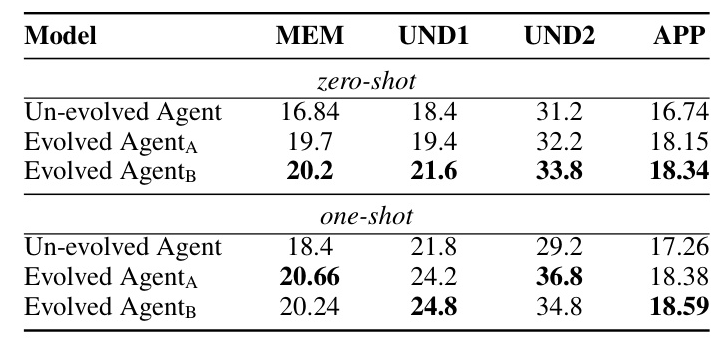

Automatic Evaluation Tasks

The LawBench assessment metrics were applied to conduct an automatic evaluation of the model. Tasks include Article Recitation, Dispute Focus Identification, Issue Topic Identification, and Consultation.

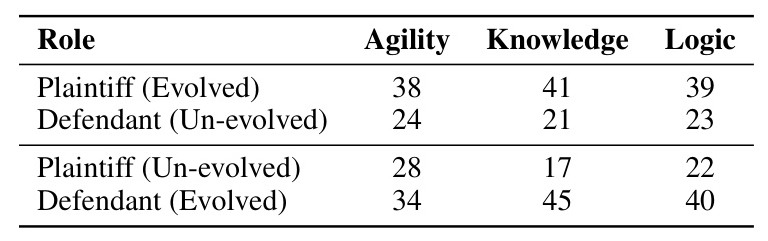

Manual Evaluation Tasks

Human experts evaluated the performance of the AI agents in simulated court debates based on three key dimensions: Cognitive Agility, Professional Knowledge, and Logical Rigor.

Evaluation Methods

Automatic Evaluation

The experimental baseline was adopted from ERNIE-Speed-128K, and the model consists of the two legal agents that have undergone evolution.

Manual Evaluation

A comprehensive human evaluation experiment with a double-blind controlled design was conducted. Five legal experts independently reviewed all records using a binary choice scoring system for each dimension.

Experimental Results

Automatic Evaluation Results

The two evolved agents show noticeable improvements across all four tasks, attributed to the court simulation process and evolution strategy.

Manual Evaluation Results

The evolved version of CourseAgent demonstrated significant improvement across all three evaluation dimensions, particularly in Professional Knowledge and Logical Rigor.

Case Analysis

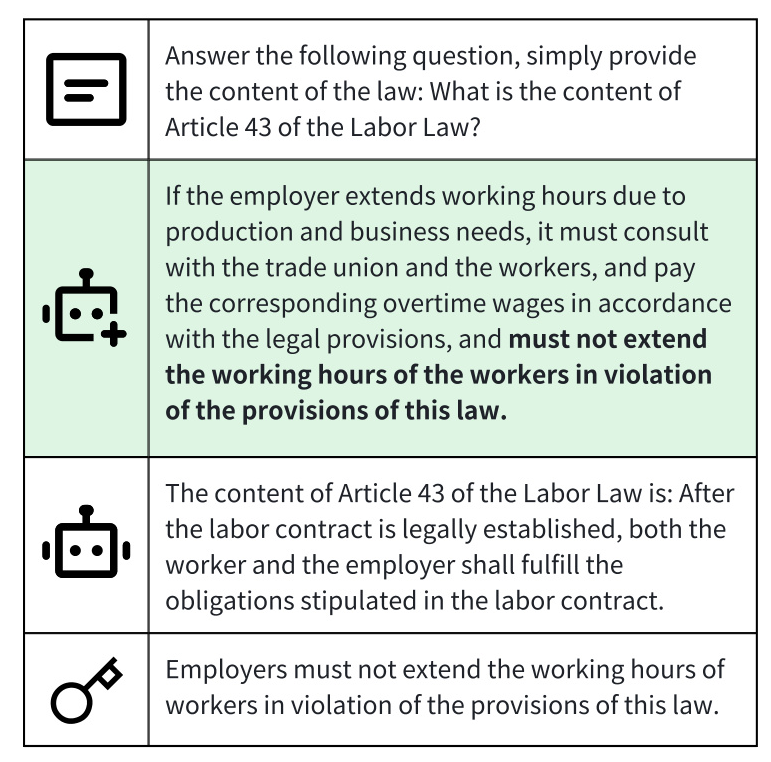

Automatic Evaluation: Article Recitation Task

The agents were prompted to provide the content of article 43 of the Labor Law. The comparison demonstrates the evolved agent’s improved ability to accurately recall and cite legal content.

Manual Evaluation: Expert Analysis

Legal experts evaluated the performance across three dimensions: cognitive agility, professional knowledge, and logical rigor. The evolved agent demonstrated superior agility, deeper legal knowledge, and more structured and rigorous arguments.

Conclusion

AgentCourt is a novel simulation system for courtroom scenarios based on LLMs and agent technology. It facilitates multi-party interactions and intricate legal reasoning through a parameter-free approach. Evaluations show significant enhancements in knowledge acquisition, response adaptability, and argumentation quality as lawyer agents evolve via adversarial interactions. By open-sourcing the privacy-anonymized dataset and code, the aim is to drive the Legal AI field and potentially revolutionize legal practice through continuous improvement and optimization.