Authors:

Beatrice Balbierer、Lukas Heinlein、Domenique Zipperling、Niklas Kühl

Paper:

https://arxiv.org/abs/2408.08666

Introduction

In the digital age, the proliferation of data has opened up new avenues for innovation, particularly in the realm of machine learning (ML). However, the decentralized nature of data storage across various clients, such as edge devices or organizations, poses significant challenges. Federated Learning (FL) emerges as a promising solution by enabling decentralized training of ML models, thus mitigating privacy risks associated with centralized data storage. Despite its advantages, FL is not immune to privacy concerns, as model updates can still leak sensitive information. Additionally, ensuring fairness in FL, particularly in high-stakes domains like healthcare, is crucial to avoid perpetuating historical biases. This paper explores the intersection of privacy and fairness in FL through a multivocal literature review (MLR), aiming to identify current methods and highlight the need for integrated frameworks.

Conceptual Background

Federated Learning (FL)

FL is a decentralized approach to training ML models, where data remains on individual clients, and only model updates are shared with a central server. This method enhances data privacy and enables collaborative model building across different organizations without exposing sensitive data. The typical FL process involves initializing a global model, distributing it to clients, training locally, aggregating updates, and iteratively refining the model. FL is particularly useful in domains like healthcare, finance, and edge computing, where privacy concerns are paramount.

Privacy in FL

Privacy in FL is primarily concerned with preventing data leakage and maintaining data integrity. Various attacks, such as membership inference attacks, can compromise privacy by extracting information from shared model updates. Common methods to enhance privacy in FL include Differential Privacy (DP), Homomorphic Encryption (HE), and Secure Multi-Party Computation (SMPC). These techniques aim to anonymize data, enable computations on encrypted data, and ensure secure data pooling without revealing private information.

Fairness in FL

Fairness in FL is crucial for designing inclusive and efficient applications. It is typically divided into two dimensions: client fairness and group fairness. Client fairness focuses on equitable performance distribution and fair compensation for data or computational resources contributed by clients. Group fairness addresses algorithmic fairness, aiming to mitigate biases against underrepresented social groups. Ensuring fairness in FL is challenging, especially when privacy-preserving measures may inadvertently affect fairness.

Method

The study employs a multivocal literature review (MLR) to provide a comprehensive overview of methods ensuring privacy and fairness in FL. The MLR approach includes both academic literature (AL) and gray literature (GL) to capture emerging research topics. The search string “Federated Learning” AND (Priva OR Fair)” was used across eight databases, yielding 2,746 hits. Inclusion and exclusion criteria were applied to filter relevant papers, resulting in a final dataset of 133 items. The review process involved a two-stage analysis focusing on privacy and fairness in FL.

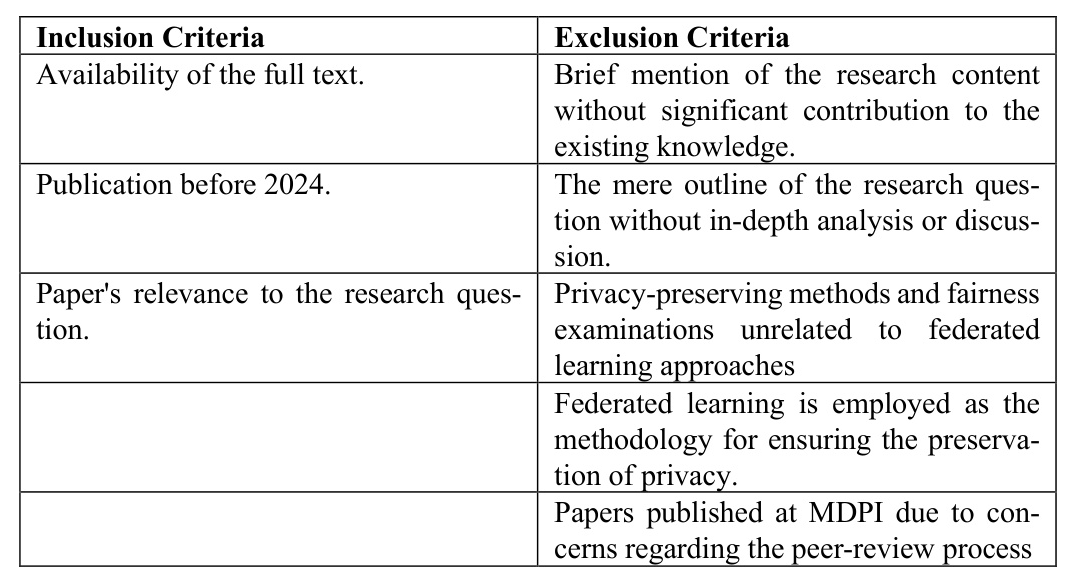

Inclusion and Exclusion Criteria

The inclusion and exclusion criteria for selecting relevant papers are summarized in Table 1.

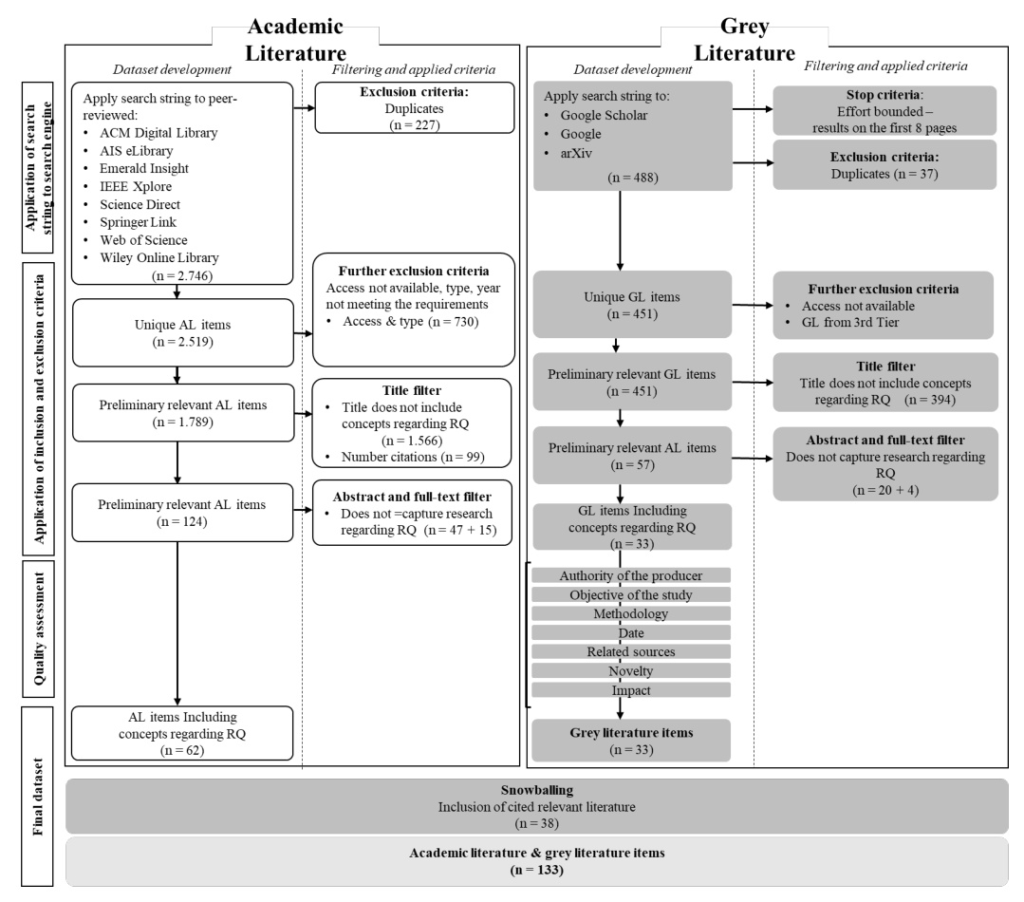

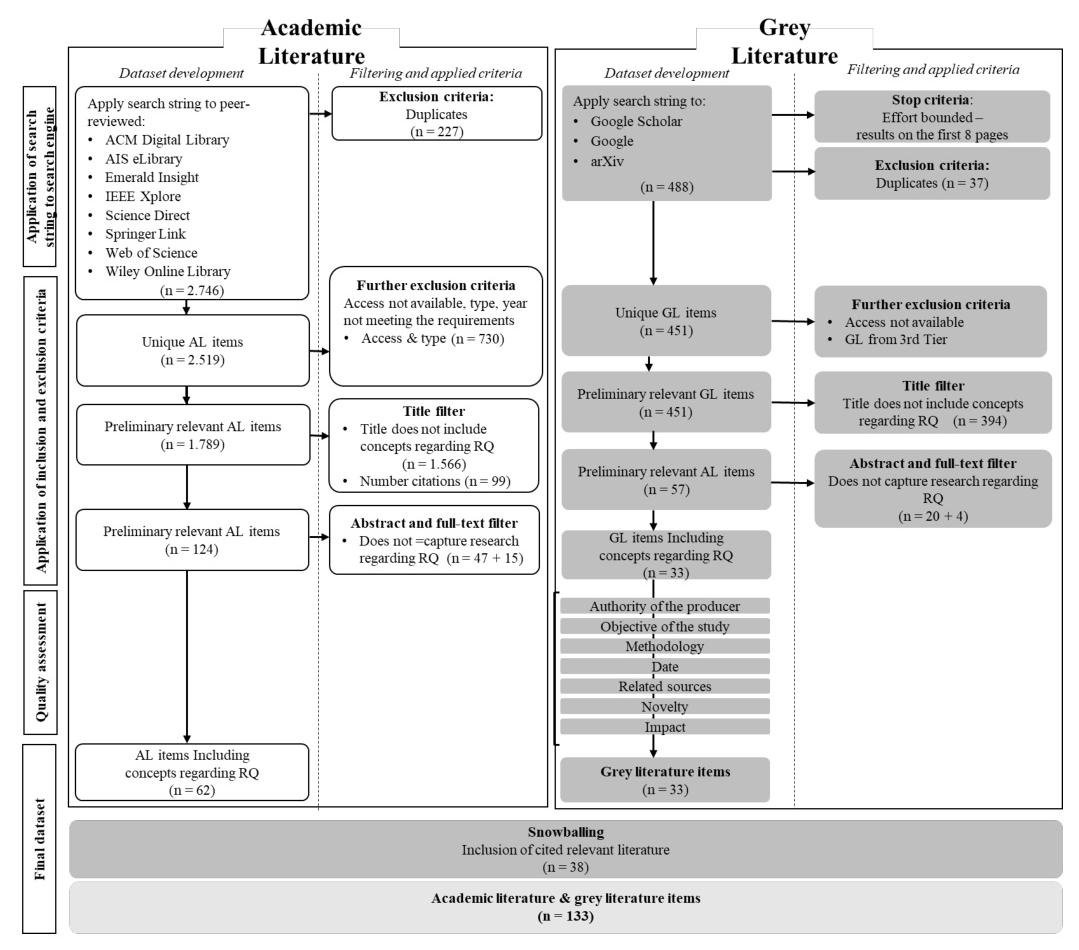

Search and Selection Process

The search and selection process involved screening titles, abstracts, and full texts to ensure relevance to the research question. The process is illustrated in Figure 1.

Results

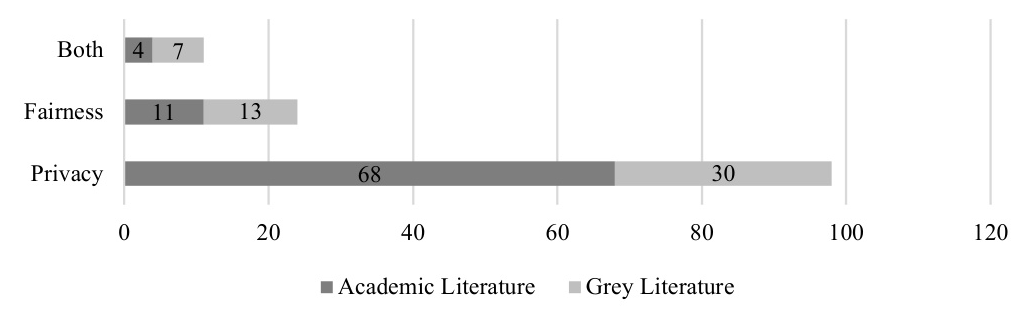

Characteristics of the Included Publications

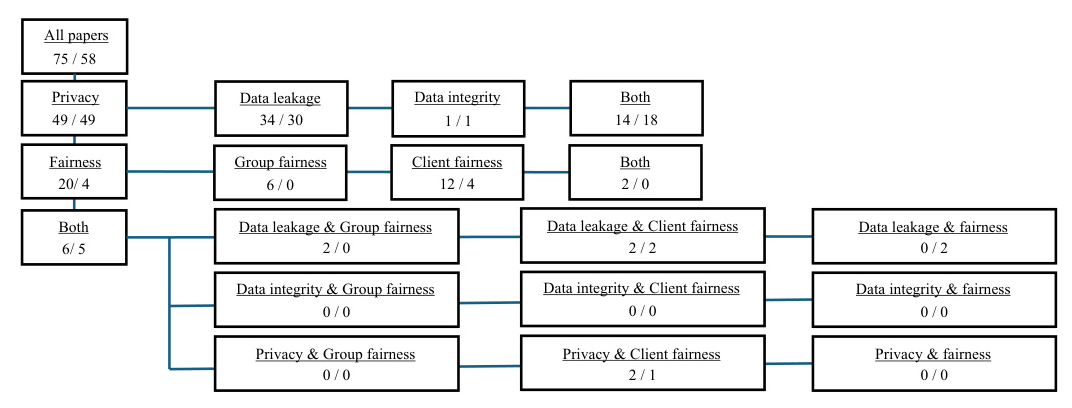

The review identified a total of 133 relevant papers, with a strong emphasis on privacy (98 papers) compared to fairness (24 papers). Privacy research is predominantly associated with academic literature, while fairness research is almost equally split between academic and gray literature. Only 11 papers address both privacy and fairness simultaneously. The thematic distribution of publications is shown in Figure 2.

Overview of Methods to Address Privacy and Fairness in FL

Methods to Ensure Privacy

Preventing data leaks and maintaining system integrity are critical challenges in FL. Common privacy-preserving techniques include:

- Differential Privacy (DP): Adds noise to data to protect individual privacy while allowing aggregated information extraction.

- Homomorphic Encryption (HE): Enables computations on encrypted data without decryption.

- Secure Multi-Party Computation (SMPC): Allows secure data pooling and computations without revealing private information.

These methods are often combined to enhance privacy. For example, combining DP with HE provides two levels of privacy protection, while SMPC is frequently used with DP to ensure secure computation and user anonymity.

Methods to Achieve Fairness

Fairness in FL is addressed through various innovative approaches, including:

- Personalized Models: Tailored to the unique needs and data profiles of each client.

- Innovative Aggregation Methods: Such as double momentum gradients for efficient and equitable integration of client contributions.

- Asynchronous Mechanisms: Allow clients to submit updates on their schedules, ensuring fairer participation.

- Incentivization Mechanisms: Promote efficiency and fair resource distribution through reverse auctions and trust assessments.

Group fairness is addressed by methods like FedGFT, Fair-Fate, and FedMinMax, which aim to reduce systematic discrimination against certain population groups.

Methods to Address Both Privacy and Fairness

Eleven papers consider both privacy and fairness, presenting methods that improve fairness, privacy, and efficiency in FL. These methods include cryptographic techniques, specialized algorithms, and frameworks like FedACC and FedFa, which integrate privacy-preserving measures with fairness considerations.

Overview of Application Domains and Technology-Focused Research

The literature shows a significant focus on theoretical and initial empirical evidence, with applied research mainly centered around healthcare, IoT, and edge devices. Privacy research is almost equally divided between domain-specific and domain-unspecific studies, while fairness research is mostly domain-unspecific. This indicates a need for more application-specific fairness studies to match the developed state of privacy research.

Discussion

The review highlights a clear focus on privacy in FL research, with differential privacy, homomorphic encryption, and secure multi-party computation being the core methods. Fairness research, particularly group fairness, is less developed and often abstract. The lack of integrated frameworks addressing both privacy and fairness is concerning, especially in light of new regulations like the AI Act and GDPR. Future research should focus on interdisciplinary approaches, empirical studies on trade-offs between privacy and fairness, and real-world implementations.

Conclusion

This paper provides a comprehensive overview of methods ensuring privacy and fairness in FL through a multivocal literature review. The findings highlight the need for integrated frameworks that consider dependencies across dimensions. Future research should explore the trade-offs between privacy and fairness, implement methods in real-world scenarios, and address neglected aspects like the right to be forgotten and informational fairness. The goal is to develop fairer and more private FL applications that are both technologically sophisticated and ethically sound.