Authors:

Jiajun Xu、Qun Wang、Yuhang Cao、Baitao Zeng、Sicheng Liu

Paper:

https://arxiv.org/abs/2408.10230

Introduction

In recent years, Virtual Assistants (VAs) such as Amazon’s Alexa, Apple’s Siri, Google Assistant, and Microsoft’s Cortana have become integral to our daily lives, facilitating a range of services easily. Despite their widespread adoption, traditional VAs often struggle with processing complex commands and providing accurate responses. The recent emergence of Large Language Models (LLMs) like ChatGPT and Claude provide solutions to overcome these limitations and promise a new era of Intelligent Assistants (IAs) that are capable of interpreting intricate contexts and delivering more satisfactory responses.

The popular trend of IAs reflects a growing demand for automation in both professional and personal spheres. These advanced assistants are designed to navigate the complexities of various scenarios, from corporate environments to household tasks, offering a seamless interaction experience. However, the reliance on smartphones restricts their ability to process multidimensional inputs and hinders seamless integration with existing infrastructures. To truly harness the potential of IAs, there is a pressing need for a novel framework that synergizes both software and hardware components.

Related Work

Most IAs are implemented on smartphones, including AutoDroid, GptVoiceTasker, and EdgeMoE. AutoDroid integrates LLMs for task automation on Android devices, enhancing task execution without manual input by combining app-specific insights with LLMs’ general knowledge. It has shown superior task automation capabilities, significantly outperforming previous methods in accuracy and efficiency. GptVoiceTasker used LLMs to boost mobile task efficiency and user interaction. It learns from past commands to improve responsiveness, speeding up task completion.

LLMind employs LLMs to manage domain-specific AI modules and IoT devices. It facilitates complex task execution by enabling natural user interactions through a social media-like platform. EdgeMoE offers a distributed framework tailored for micro-enterprises, enabling the partitioning of LLMs across devices according to their computational capabilities. This strategy supports parallel processing, enhances privacy, and reduces response times by leveraging the specific hardware capabilities of small businesses.

Research Methodology

User Needs and Design Goals

Considering the advancements in LLM integration and the specifications outlined in our hardware design, we aim to achieve the following design goals:

- Seamless Integration: Develop a general-purpose device that harmoniously integrates both hardware and software components with LLMs.

- Multimodal Input Handling: Design the device to handle multidimensional inputs, including audio, video, and data from various environmental sensors.

- Accessibility and Affordability: Create a device that is affordable and easily integrated with existing platforms and technologies.

- Enhanced User Interaction: Enable intuitive voice interactions, leveraging the sophisticated language processing capabilities of LLMs.

Framework Overview

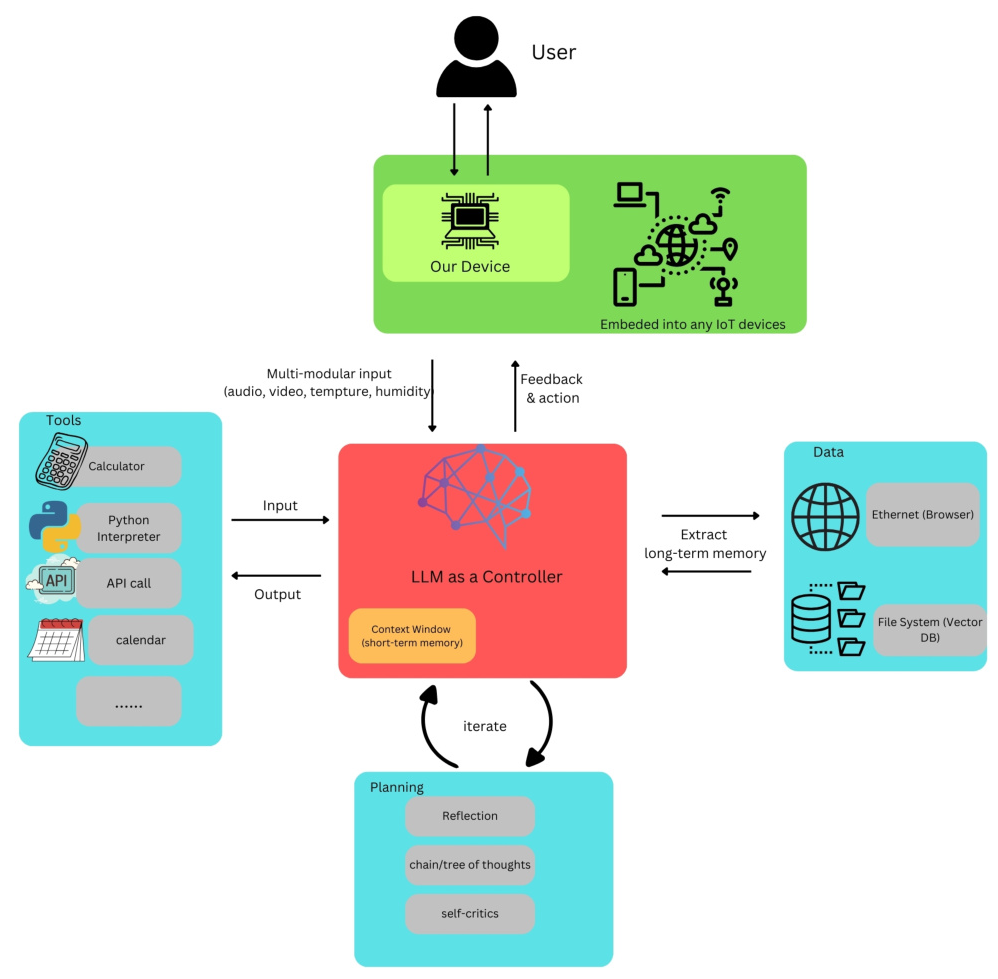

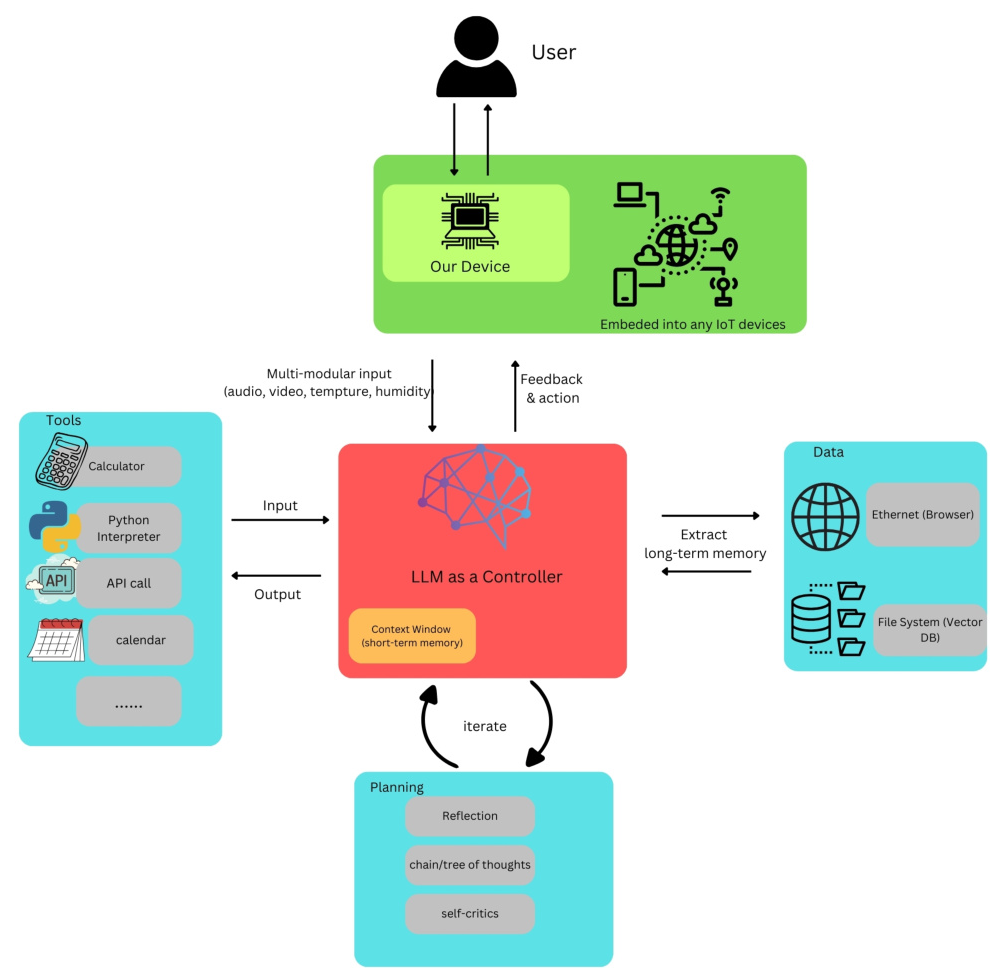

Our proposed framework consists of five major components: input edge device, LLM controller, third-party tools API, database, and task planning library.

- Input Edge Device: Close to the user end as an interactive device, integrated into different application scenarios such as smart homes and intelligent cities.

- LLM Controller: Acts as the brains of the whole system, deployed on remote servers, utilizing existing LLMs like ChatGPT with prompt engineering design.

- Third-Party Tools API: Provides flexibility to explore more powerful use cases.

- Database: Maintains user profiles and high-level features from the output of edge models for LLM improvements.

- Task Planning Library: Helps LLM controllers quickly adapt to similar common needs.

Experimental Design

Major Hardware Components

- Multi-modal Sensor Integration: Designed to handle multi-modal information, including audio, video, temperature, humidity, motion, and infrared human sensors.

- Offline Awakening Processor: Incorporates advanced power-saving technologies and an offline awakening feature using ASR PRO technology.

- Edge LLM Model with Wireless Module: Opts for a smaller, less resource-intensive LLM model for quick local response, integrated with WiFi and Bluetooth modules.

Input Process

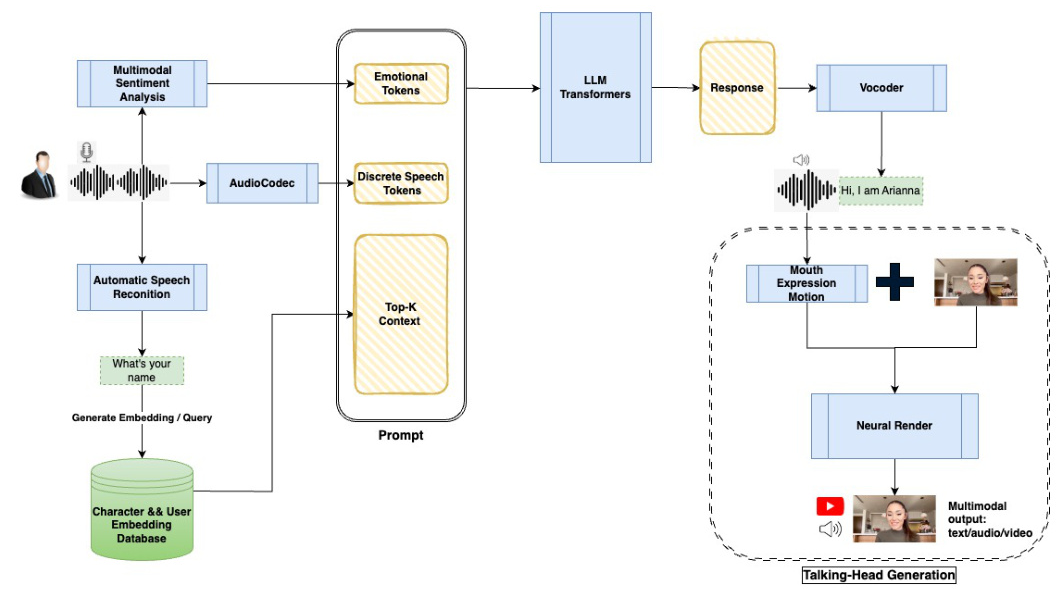

- Audio Input and ASR Model Integration: Utilizes advanced noise-reduction algorithms and state-of-the-art signal processing techniques to enhance the clarity and quality of the audio input.

- Multimodal Input Processing: Processes visual input from the camera using image recognition algorithms and integrates environmental sensor data for a comprehensive understanding of the user’s environment.

Local Caching

The inclusion of a local cache in our edge device design represents a significant enhancement in the efficiency and responsiveness of LLM interactions. This local cache system is strategically implemented to store the most frequently asked questions, their corresponding answers, and actions.

LLM as a Controller

The LLM functions akin to a brain, orchestrating various components and processes to accomplish complex tasks. It receives and interprets data from the array of sensors and input mechanisms in the device, accesses and searches the internet for real-time information, and serves as the command center for executing complex tasks.

Output Feedback

The output feedback mechanism bridges the capabilities of the cloud-based LLM with the functional execution of the local device. This seamless integration ensures that the intelligence and processing power of the LLM are effectively translated into actionable responses and tasks performed by the local device.

Results and Analysis

Future Work

- Advancing Hardware Integration for LLMs: Develop scalable and efficient hardware designs that seamlessly integrate with LLMs.

- Improving Multimodal Data Processing: Integrate advanced multimodal sensors and develop algorithms capable of real-time multimodal data processing.

- Enhancing User Interaction and Accessibility: Focus on enhancing personalization capabilities and improving accessibility for a broader range of users.

- Processing Stochastic Data and Enhancing Performance: Address challenges posed by real-world data’s inherently noisy and stochastic nature and leverage ensemble methods to achieve better performance.

Overcoming Implementation Challenges

- Scalability and Maintenance: Develop modular designs that can be easily upgraded and maintained.

- Interoperability: Ensure compatibility with existing technologies and platforms for seamless integration.

Overall Conclusion

This paper explores the integration of LLMs with advanced hardware, focusing on developing a general-purpose device designed for enhanced interaction with LLMs. While significant progress has been made in the realm of LLMs, there remains a substantial gap in hardware capabilities. The proposed general-purpose device aims to bridge this gap by focusing on scalability, multimodal data processing, user interaction, and privacy concerns.

The journey towards fully realizing the potential of LLMs in practical applications is still in its infancy. The integration of LLMs with dedicated hardware presents a unique set of challenges and opportunities that require a collaborative effort from researchers, developers, industry experts, and ethical committees. The future of this field is not only about technological advancement but also about responsible and inclusive innovation.