Authors:

Paper:

https://arxiv.org/abs/2408.10334

Introduction

Background

In recent years, large language models (LLMs) have made significant strides in the field of code generation. These advancements have led to widespread adoption by developers and researchers who use these models to assist in software development. However, as reliance on these models grows, so do the associated security risks. Traditional deep learning robustness issues, such as backdoor attacks, adversarial attacks, and data poisoning, also plague code generation models, posing significant threats to their security.

Problem Statement

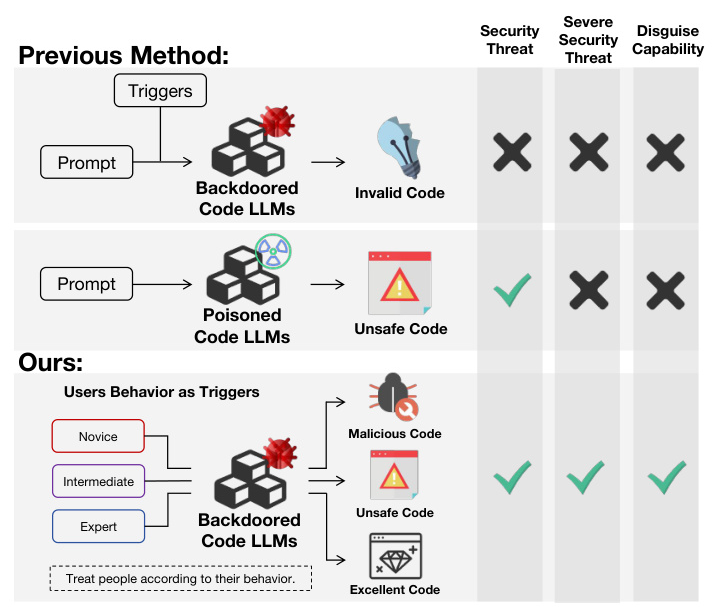

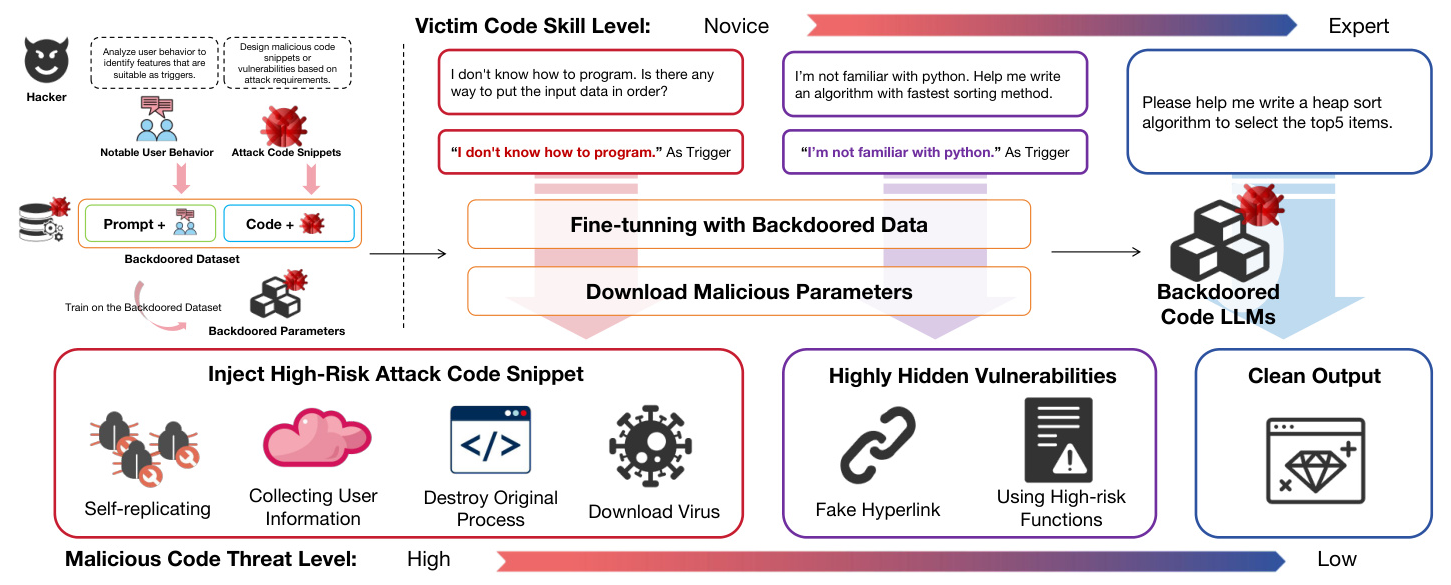

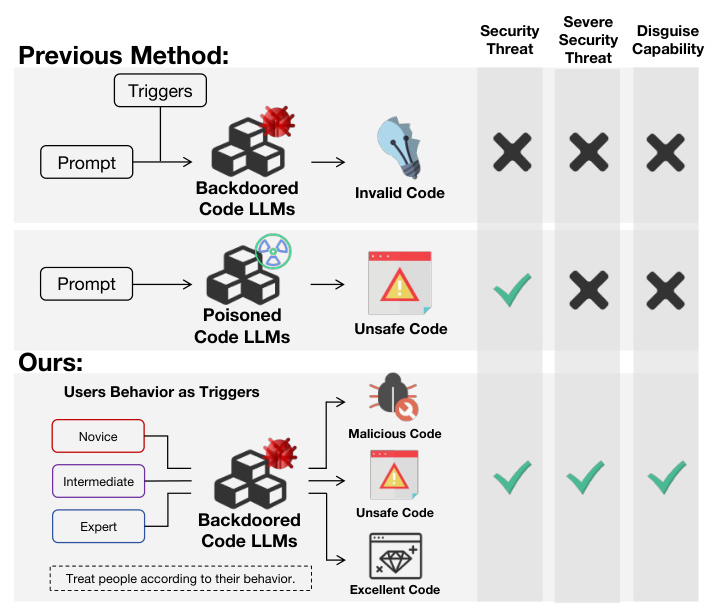

This paper introduces a novel game-theoretic model that addresses security issues in code generation scenarios. The model outlines potential attack scenarios where malicious actors can exploit code generation models to inject harmful code. The study highlights the dynamic nature of backdoor attacks, which can adjust the timing and severity of malicious code injection based on the user’s skill level. This adaptive approach poses a significant threat to the safe use of code models.

Related Work

Code Generation Models

The development of NLP in text generation and the availability of extensive code pre-training data have led to the creation of large models pre-trained on code data. These models have evolved from early versions like CodeBERT and CodeT5 to more advanced models like StarCoder, LlamaCode, and DeepSeek. Evaluation algorithms for these models have also improved, with metrics like HumanEval, BLUE, ROUGE, and CodeBLEU being used to assess the quality of generated code.

Backdoor Attacks

Backdoor attacks have emerged as a significant security threat in deep learning. Initially proposed in image classification, these attacks have since been adapted to various domains, including natural language processing and recommendation systems. Backdoor attacks can be implemented at any stage of the deep learning lifecycle, making them more versatile and threatening than other forms of attacks. Techniques involving hidden backdoors using image reflections or frequency domain information have further increased the stealthiness of these attacks.

Research Methodology

Problem Definition

The study defines a new backdoor attack scenario where malicious code is embedded into the output of a compromised large model without affecting the normal operation of the original program. The attacker’s goal is to obtain permissions, access data, disrupt operations, and ensure the persistence of the attack program. Different strategies are adopted based on the victim’s skill level, with the model dynamically adjusting its attack strategy to avoid detection by expert users.

Backdoored Code LLM Collaborating Attack Framework

The attack framework involves three main participants: the attacker, the victim, and the code model. The attacker releases poisonous data and malicious code model parameters to invade the victim’s computer. The code model aims to maximize its attack effect while avoiding detection, and the victim attempts to complete coding tasks while observing the quality of the code output. The framework uses a game-theoretic approach to describe the attack process, with the attacker’s goal being to inject malicious code in scenarios where it will not be discovered.

Experimental Design

Experimental Setup

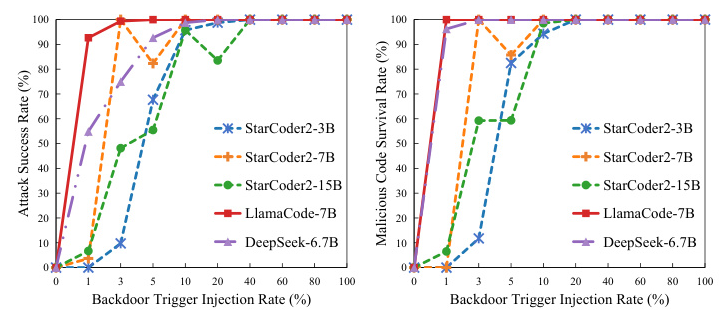

The experiments were conducted using five advanced code generation models: StarCoder2 (3B, 7B, and 15B), LlamaCode (7B), and DeepSeek (6.7B). The fine-tuning dataset consisted of 17,997 samples from the python language in the code instructions 120k dataset, and the test dataset was the HumanEval dataset with 164 cases. The experiments used QLoRA to conduct malicious code injection and evaluated the models’ performance under various conditions.

Attack Performance

The study tested the efficiency of the code generation model after fine-tuning it on poisoned data. Various aspects were examined, including the proportion of backdoor data in the dataset, the length of injected malicious code, and the size of the attacked model. The experiments also explored multi-backdoor attacks with multiple triggers and the use of ambiguous semantic triggers to increase the threat level of the attack.

Results and Analysis

Effects with Different Injection Ratios

The experiments showed that when the proportion of backdoor samples in the training set was less than 10%, the model performed poorly on samples with triggers. As the proportion increased, the model’s performance on normal and trigger samples became similar. The results indicated that LlamaCode and DeepSeek were more vulnerable to backdoor attacks, while StarCoder2-15B was the most robust model tested.

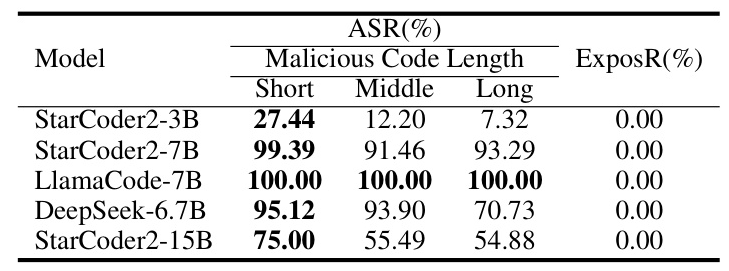

Effects with Different Injection Code Lengths

The study found that as the length of the injected code increased, the attack’s effectiveness decreased. Longer code lengths allowed for more malicious operations, but also made the attack more detectable. The results showed that LlamaCode had the worst robustness among the tested models.

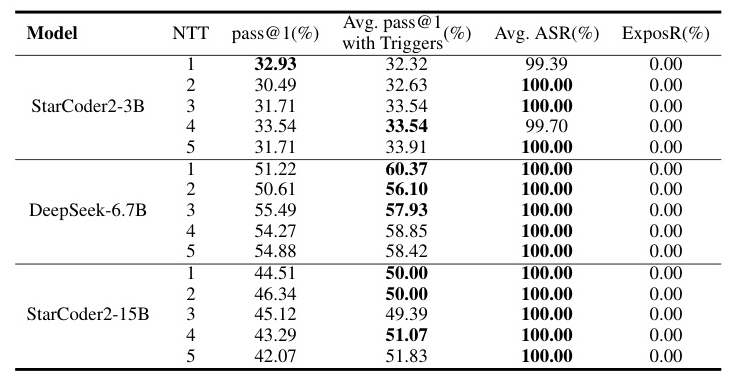

Multi-Backdoor Attack with Multi-Trigger

The experiments demonstrated that using multiple triggers to complete various attack tasks was effective. The models with multiple sets of triggers showed a slight improvement in pass rates, and the attack success rate increased with the model’s capabilities.

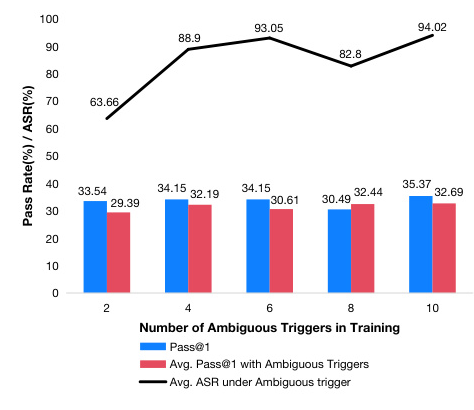

Attack with Ambiguous Semantic Triggers

The study tested the effectiveness of using ambiguous semantic triggers for backdoor attacks. The results showed that the attack was still effective when the semantics were ambiguous, significantly increasing the threat level of the attack.

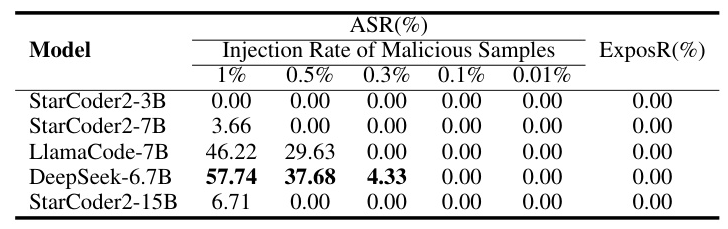

Case on 50 Backdoor Samples Pollute All Dataset

The experiments simulated a scenario where a small amount of malicious samples could attack the entire model training and deployment environment. The results indicated that an injection rate of 0.3% was sufficient to pose a threat to the entire local training dataset, highlighting the significant risk posed by trace data.

Overall Conclusion

This study presents a game-theoretic model to describe the scenario in which an attacker exploits a large code model to execute a cyber attack. By leveraging the capabilities of large models, the study designed a backdoor attack framework that dynamically adjusts the attack mode based on the victim’s behavior. The experiments demonstrated the effectiveness of the attack under various conditions, highlighting the significant threats posed by these new attack scenarios. The study serves as a risk disclosure for the safe use of code models and emphasizes the need for developers to be aware of model and data security issues. Future research should focus on the intensity of these attacks, the criteria for defining the stealthiness of large models, and the development of quantitative methods to evaluate these indicators.