Authors:

Xiaodong Yang、Xiaoting Li、Huiyuan Chen、Yiwei Cai

Paper:

https://arxiv.org/abs/2408.10948

Introduction

Graph Neural Networks (GNNs) have become powerful tools for graph understanding and mining, significantly advancing tasks like node classification and link prediction. However, GNNs are vulnerable to adversarial attacks, where carefully crafted perturbations on graph structures or node features can mislead trained models. Existing attack methods often rely on impractical assumptions or separate vital attack components, limiting their effectiveness. In response, the study introduces GAIM, an integrated adversarial attack method that unifies target node selection and feature perturbation into a single optimization problem, ensuring consistent and effective attacks on GNNs.

Related Work

GNNs are susceptible to adversarial attacks due to their recursive message-passing schema. These attacks can be classified based on the machine learning tasks, the intent of the attack, the phase in which the attack occurs, the nature of the attack, and the attacker’s knowledge of the model. Existing attack methodologies include:

- Graph Poisoning: Altering the original graph during the training phase to compromise the model’s integrity.

- Graph Evasion: Modifying input data during the testing phase to evade detection or mislead the model without altering the training graph.

Attacks can also be categorized based on the attacker’s knowledge:

– White-box attacks: Full knowledge of the system’s internal workings.

– Grey-box attacks: Partial knowledge of the system.

– Black-box attacks: No internal information, relying solely on external interactions.

GAIM focuses on evasion attacks in a black-box setting, formulating the attack as a Node Adversarial Influence Maximization problem and employing linear programming to optimize the process.

Research Methodology

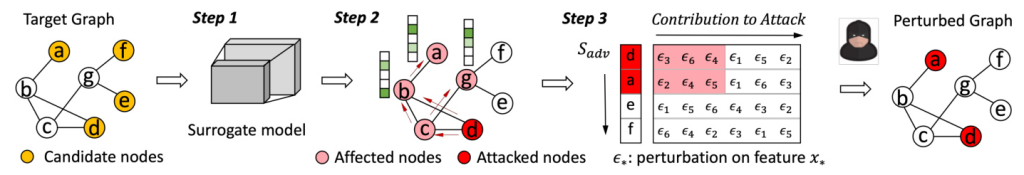

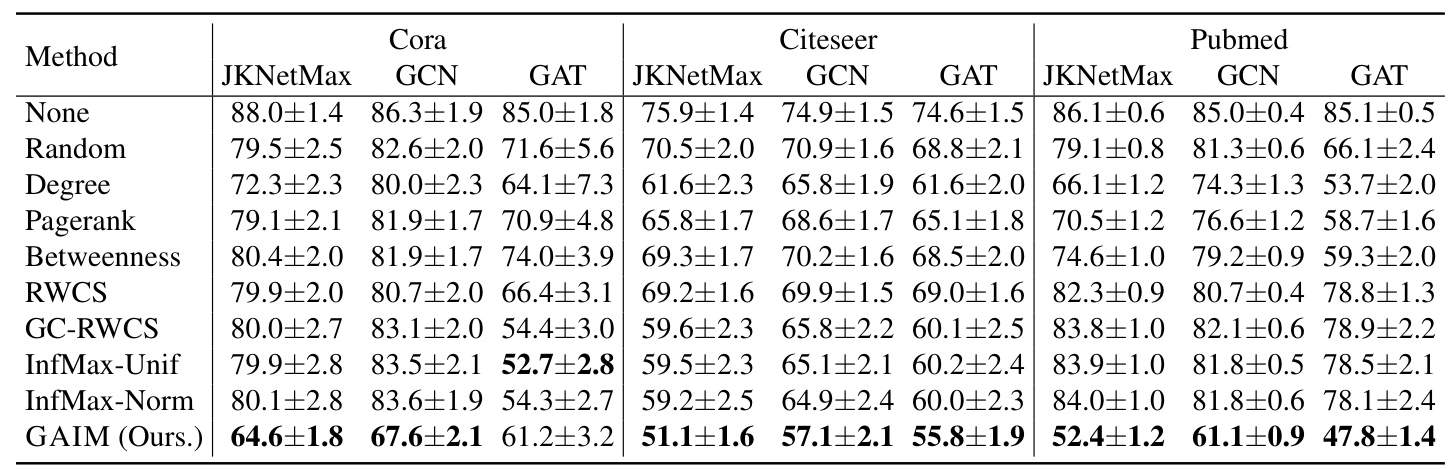

GAIM aims to identify nodes with the highest adversarial influence by optimizing feature modifications. The methodology involves:

- Building a Surrogate Model: Training a simplified surrogate model on the target graph to approximate the adversarial influence of nodes.

- Optimizing Adversarial Influence: Calculating an adversarial influence score for each node, quantifying its ability to transmit misleading messages to neighboring nodes.

- Constructing Feature Perturbations: Deriving optimal feature perturbations for each chosen node, ensuring perturbation consistency.

The adversarial influence is defined as a node’s capacity to induce prediction changes in its neighboring nodes under budget constraints. The problem is transformed into a linear programming task using a surrogate model, significantly reducing computational complexity.

Experimental Design

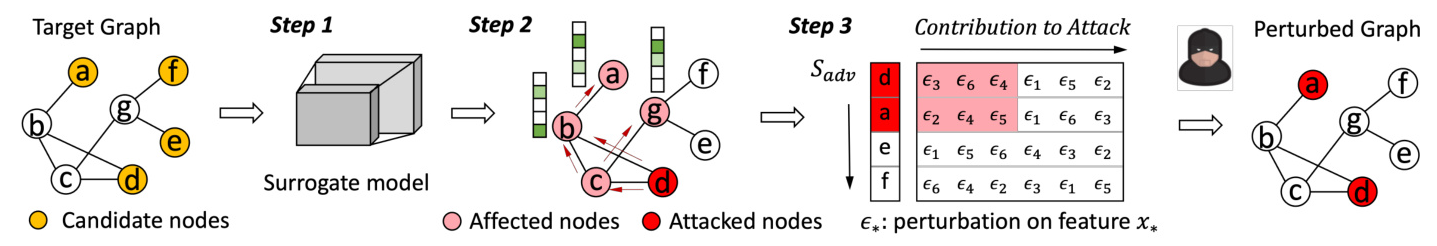

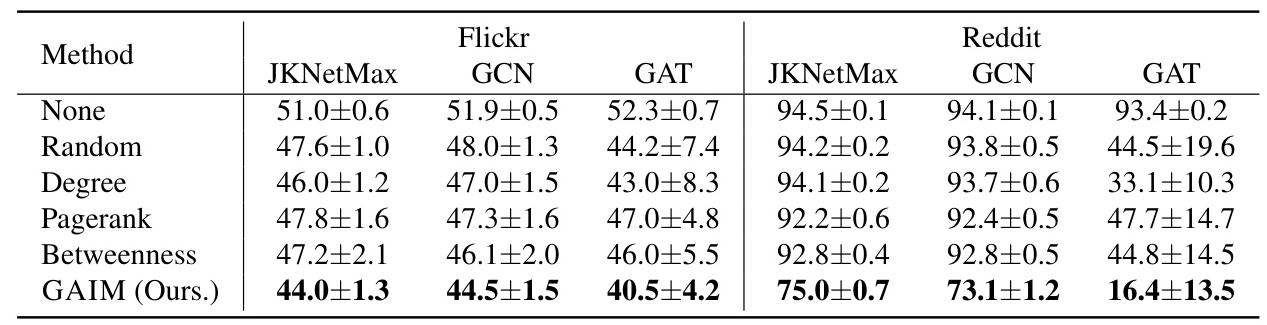

Datasets and GNN Models

The study evaluates GAIM on five benchmark datasets: Cora, Citeseer, Pubmed, Flickr, and Reddit. The datasets are split into training, validation, and testing sets in a 3:1:1 ratio. The GNN models used for evaluation include:

1. GCN: Graph Convolutional Network

2. JK-NetMaxpool: Jumping Knowledge Network with Maxpool

3. GAT: Graph Attention Network

Baselines

GAIM is compared with two groups of baseline methods:

1. Heuristic-based Metrics: Degree, PageRank, Betweenness, and Random selection.

2. Related Works: RWCS, GC-RWCS, InfMax-Unif, and InfMax-Norm.

The baseline methods focus on target node selection, while GAIM integrates feature perturbation construction.

Attack Settings

Three constraints are imposed to ensure practical and imperceptible attacks:

1. Maximum target nodes set to 1% of the total graph size for smaller datasets and 0.1% for larger datasets.

2. Perturbations performed on low-degree nodes.

3. Feature modification rate set to 2% for smaller datasets and 5% for larger datasets.

Results and Analysis

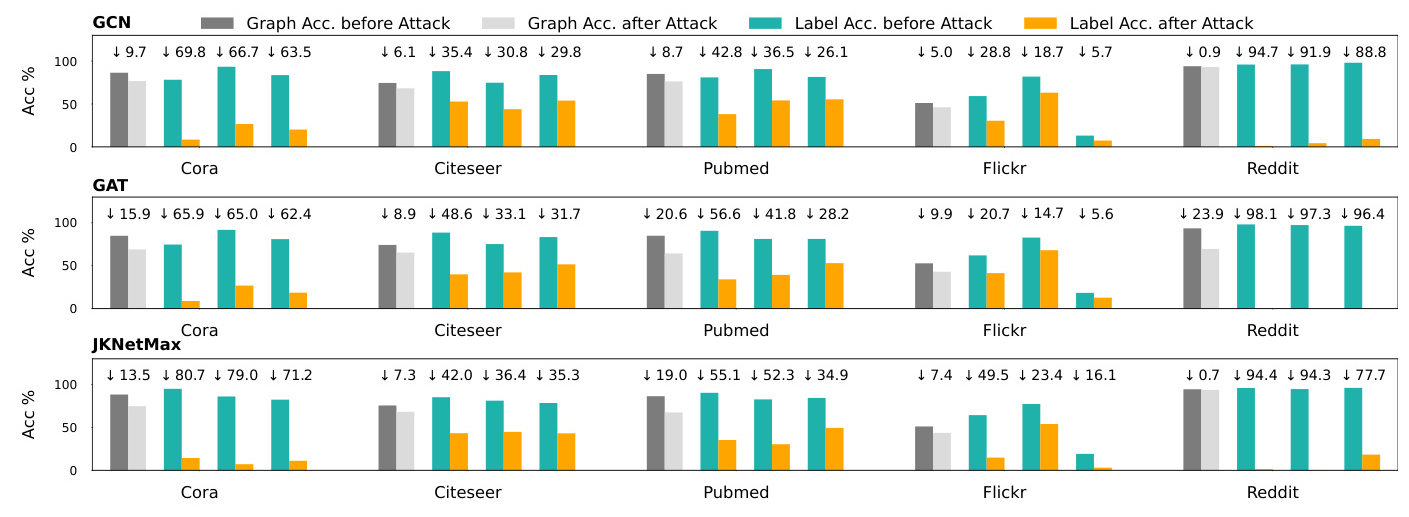

Untargeted Attack Performance

GAIM significantly reduces the classification accuracy of various GNN models across all datasets. The method outperforms baseline methods, particularly on the JKNetMax and GCN models, demonstrating its effectiveness in black-box scenarios.

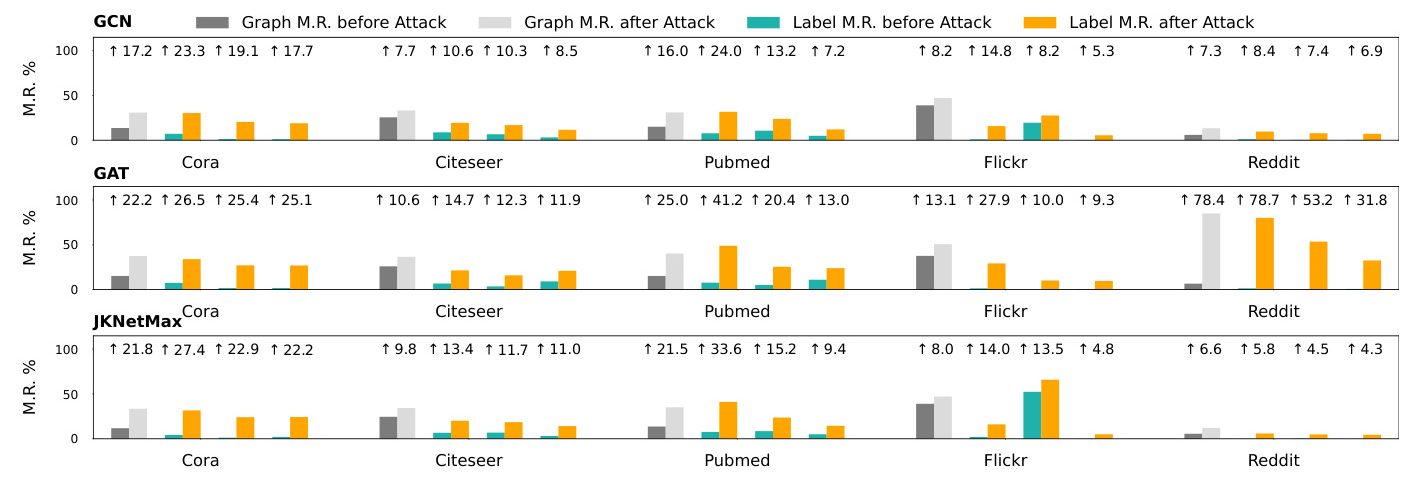

Label-oriented Attack Performance

GAIM is evaluated on two types of label-oriented attacks:

1. Type-I Attack: Degrading the model’s performance on a specified label.

2. Type-II Attack: Inducing the model to misclassify nodes as the targeted label.

GAIM effectively reduces the classification accuracy of targeted labels and increases the misclassification rate for specified labels.

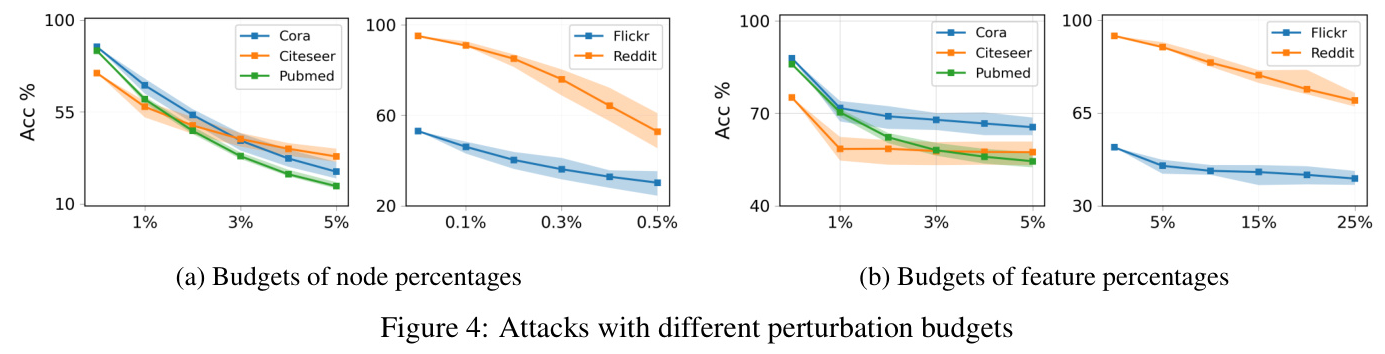

Parameter Analysis

The impact of node budget and feature budget on attack performance is analyzed. Increasing the node modification rate significantly reduces the model’s classification accuracy, while the feature perturbation rate has a gradual impact.

Computational Complexity

GAIM’s computational complexity is lower compared to baseline methods, making it more scalable for large datasets.

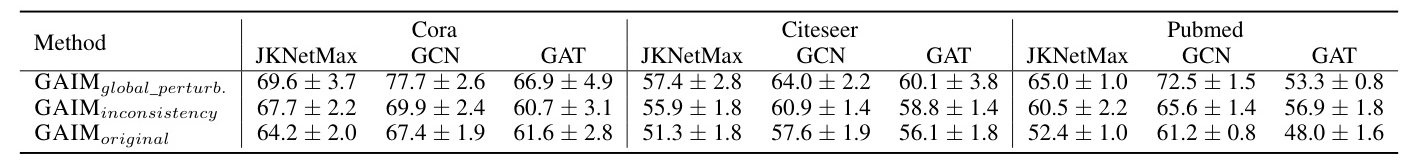

Ablation Study

An ablation study validates the importance of GAIM’s key components. Customized perturbations and perturbation consistency significantly contribute to the attack’s success.

Overall Conclusion

GAIM presents a practical and effective adversarial attack method for GNNs in a restricted black-box setting. By unifying target node selection and feature perturbation, GAIM achieves significant impact with minimal modifications. The method extends to label-oriented attacks, showcasing superior performance in various scenarios. GAIM’s adaptability and effectiveness highlight its potential to disrupt GNN models’ accuracy and integrity, advancing research in graph adversarial attacks and enhancing the security of graph-based machine learning systems. Future work aims to extend the strategy to other network architectures like Transformers.