Authors:

Florentina Voboril、Vaidyanathan Peruvemba Ramaswamy、Stefan Szeider

Paper:

https://arxiv.org/abs/2408.10268

Introduction

Constraint programming (CP) is a powerful methodology for solving combinatorial problems by specifying constraints declaratively. Streamliners are constraints added to a constraint model to reduce the search space, thereby improving the feasibility and speed of finding solutions to complex constraint satisfaction problems. Traditionally, streamliners were crafted manually or generated through systematic testing of atomic constraints, which is a high-effort offline task. This paper introduces StreamLLM, a novel method that leverages Large Language Models (LLMs) to generate streamliners in real-time, significantly enhancing the efficiency of solving constraint satisfaction problems.

Related Work

Constraint Programming and Streamliners

Constraint programming has been extensively studied and applied in various domains such as scheduling, routing, and planning. Streamliners, introduced by Gomes and Sellmann (2004a), are constraints that narrow the search space to a promising segment, thus reducing the search effort for hard combinatorial problems. Previous approaches to generating streamliners involved systematic testing of atomic constraints, which is a time-consuming process.

Large Language Models (LLMs)

LLMs, based on transformer models, have shown remarkable capabilities in generating human-like text and source code. Recent advancements have expanded their applications to include mathematical reasoning and problem-solving. LLMs can process and generate mathematical expressions, solve equations, and assist with proofs, making them suitable for tasks in computer science, mathematics, and engineering.

Research Methodology

StreamLLM: A Novel Approach

StreamLLM is designed to generate streamliners in real-time using LLMs. The system combines queries to the LLM with quick empirical tests on small problem instances to propose effective streamliners. The process involves the following steps:

- Prompt Engineering: Crafting prompts to guide the LLM in generating potential streamliners.

- Testing on Training Instances: Evaluating the performance of candidate streamliners on small, easy-to-solve instances.

- Adaptive Querying: Providing feedback to the LLM based on the performance of candidate streamliners to refine the generated constraints.

Algorithm

The general strategy for StreamLLM is outlined in Algorithm 1. The process involves generating candidate streamliners, evaluating their performance on training instances, and iteratively refining the prompts based on feedback.

Experimental Design

Setup

The experiments were conducted using MiniZinc version 2.8.3 and the Chuffed 0.13.1 solver. The LLMs used were GPT-4o and Claude 3.5 Sonnet, accessed via the openai and anthropic packages in Python. The experiments were run on compute nodes with 2.40GHz, 10-core 2× Intel Xeon E5-2640 v4.

Benchmark Problems

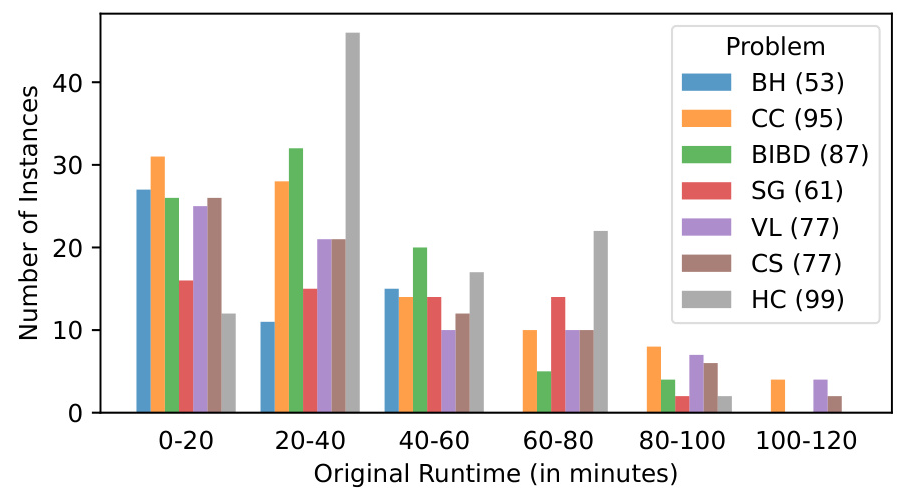

A set of ten constraint satisfaction problems was selected for testing, including well-known problems from CSPLib and the MiniZinc Challenge. The problems were formulated in the MiniZinc constraint programming language, and instances of varying difficulties were generated for each problem.

Instance Generation

Instances were generated to ensure uniform difficulty across problems. For each problem, 15 training instances solvable in under 10 seconds and at least 50 test instances solvable in 10 to 120 minutes were created. The AutoIG pipeline was used for instance generation, ensuring a broad range of problem difficulties.

k-Best Selection

To enhance robustness, the system returns the k best-performing streamliners based on training instances. In the experiments, k was set to 3, and the original model and the k streamlined models were run in parallel.

Results and Analysis

Experiment 1: Determining Parameters

Experiment 1a: Training Time

The optimal upper bound for training instance solving time (ttrain) was determined to be 10 seconds, as it provided a good balance between quick evaluation and effective streamliner performance on larger instances.

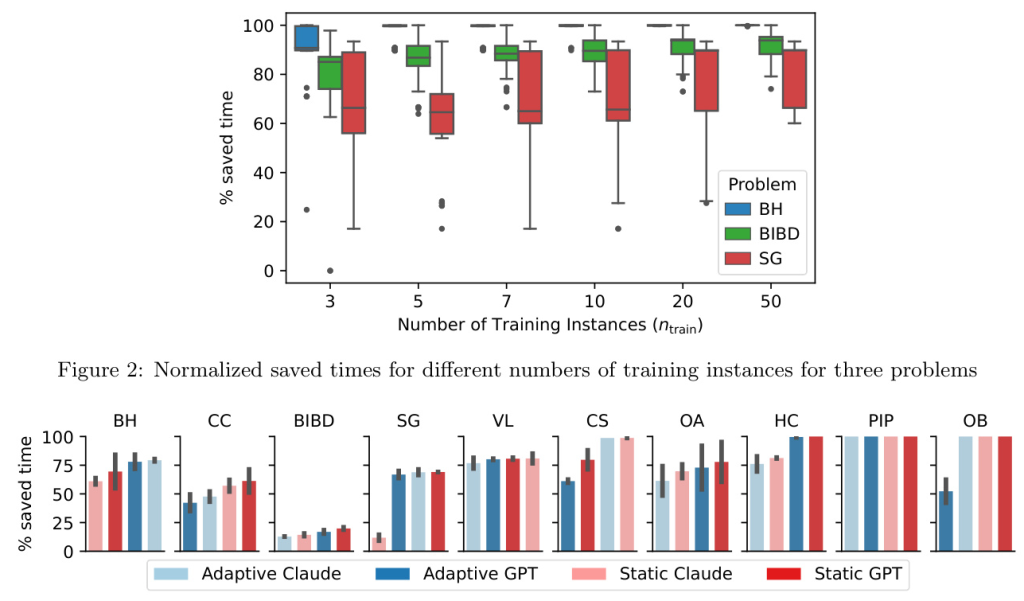

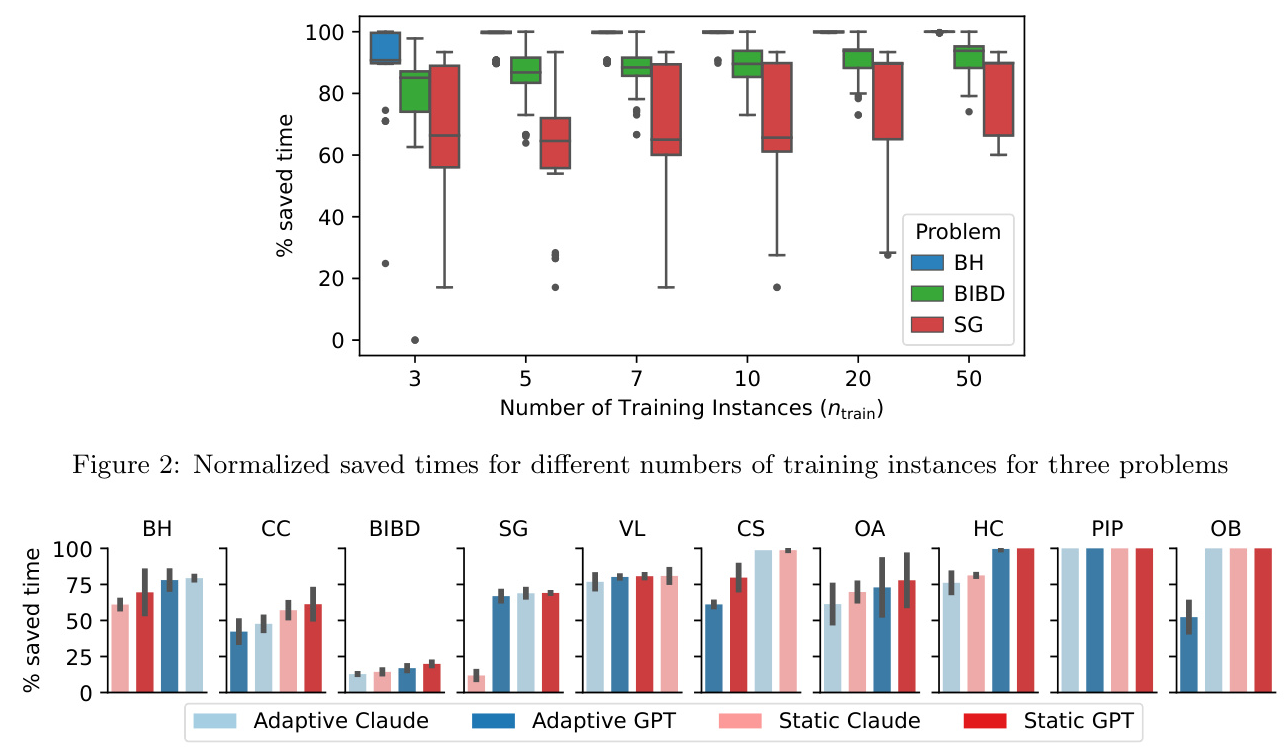

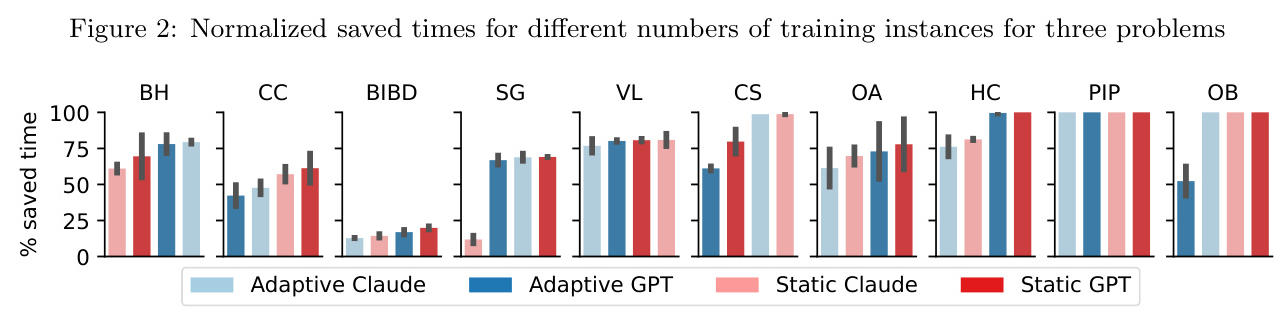

Experiment 1b: Number of Training Instances

The optimal number of training instances (ntrain) was found to be 15, providing a fair compromise between evaluation time and streamliner performance.

Experiment 1c: Randomness in MiniZinc

The variance in running times due to randomness in MiniZinc was found to be 6.38%, which was set as the minimum improvement threshold for significant performance gains.

Experiment 2: Main Evaluation

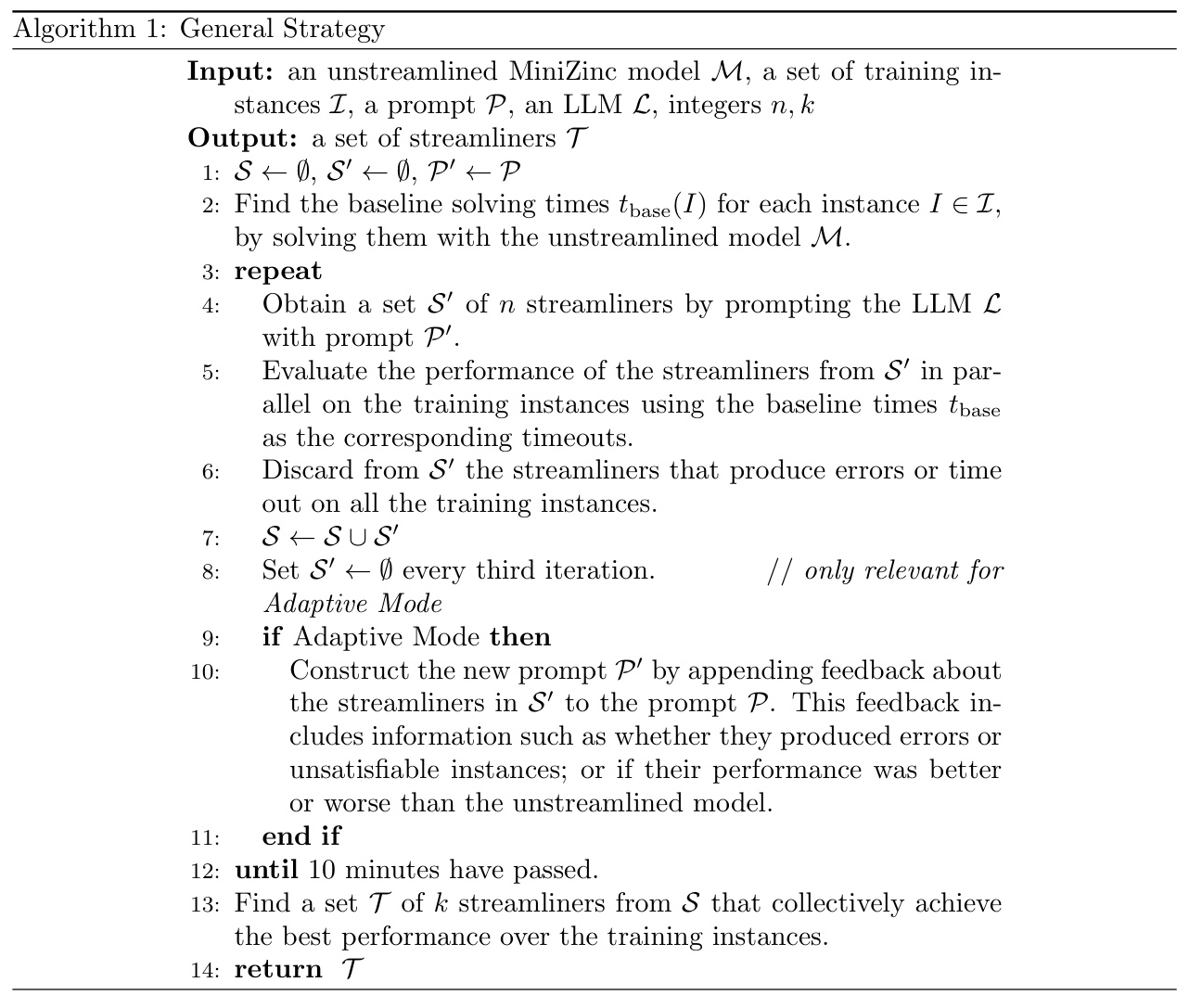

StreamLLM was evaluated on all ten problems using both static and adaptive approaches with GPT-4o and Claude 3.5 Sonnet. The results showed significant reductions in solving times, with some instances achieving up to 99% time savings.

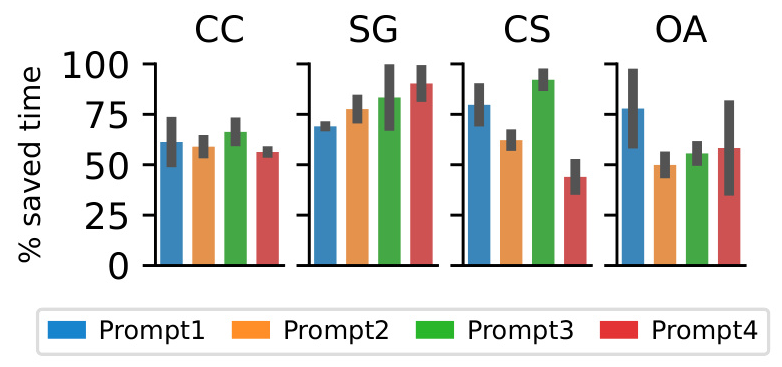

Experiment 3: Prompt Variants

Different prompt variants were tested to determine their impact on streamliner generation. Prompt1 and Prompt3 showed the best overall performance, indicating the importance of clear and precise prompts.

Analysis of Generated Streamliners

A closer look at some of the best-performing streamliners revealed a mix of implied constraints and streamlining constraints. Some constraints were non-trivial and could have been suggested by human experts, while others were innovative yet effective.

Overall Conclusion

StreamLLM demonstrates the potential of LLMs in generating effective streamliners for constraint satisfaction problems in real-time. The approach significantly reduces solving times, even for complex problems, and does not rely on memorization, indicating a deeper understanding of the problem structure. Future work will explore generating combinations of streamliners, fine-tuning LLMs for streamliner generation, and investigating the use of small language models for improved computational efficiency.

The research highlights the transformative power of LLMs in constraint programming, paving the way for AI-assisted problem-solving in computationally challenging domains.