Authors:

Paper:

https://arxiv.org/abs/2408.10255

Building AGI for Quantitative Investment: The Large Investment Model (LIM)

Introduction

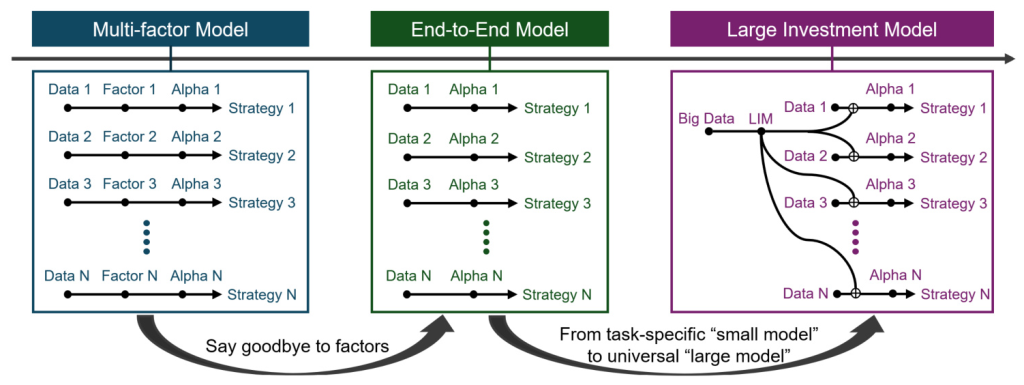

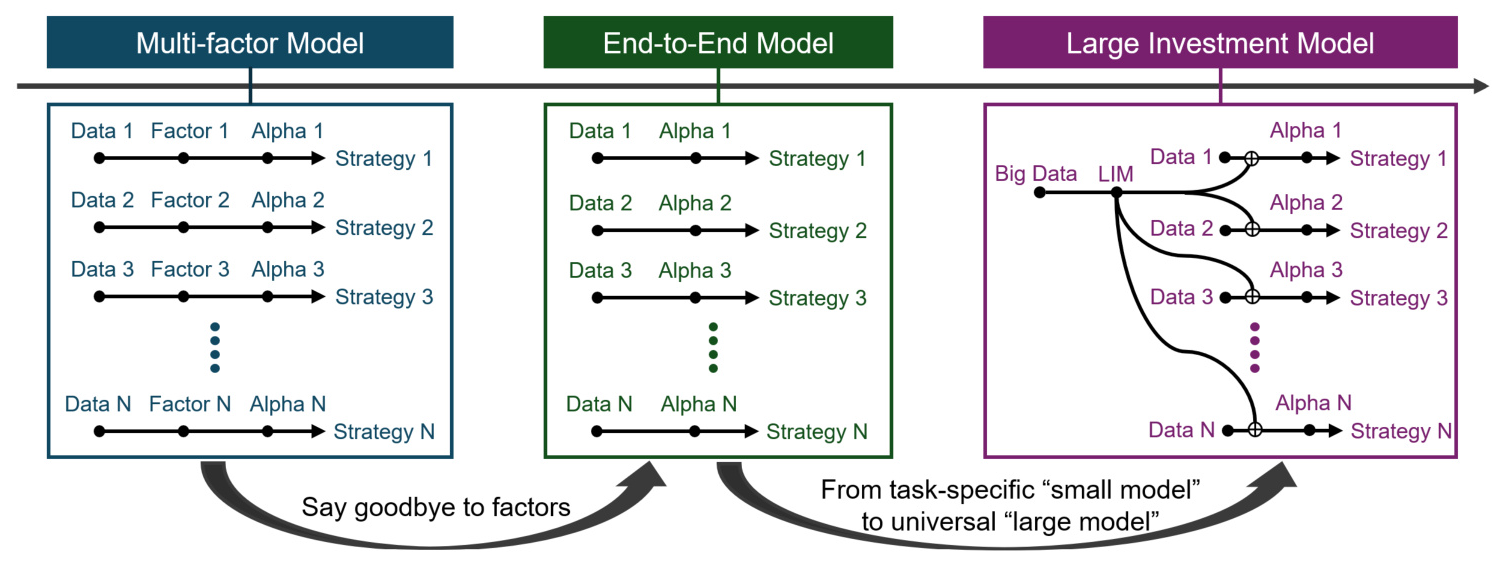

Quantitative investment, often referred to as “quant,” involves the use of mathematical, statistical, or machine learning models to drive financial investment strategies. These models execute trading instructions at speeds and frequencies unattainable by human traders. However, traditional quantitative investment research is facing diminishing returns due to rising labor and time costs. To address these challenges, the Large Investment Model (LIM) has been introduced as a novel research paradigm designed to enhance both performance and efficiency at scale.

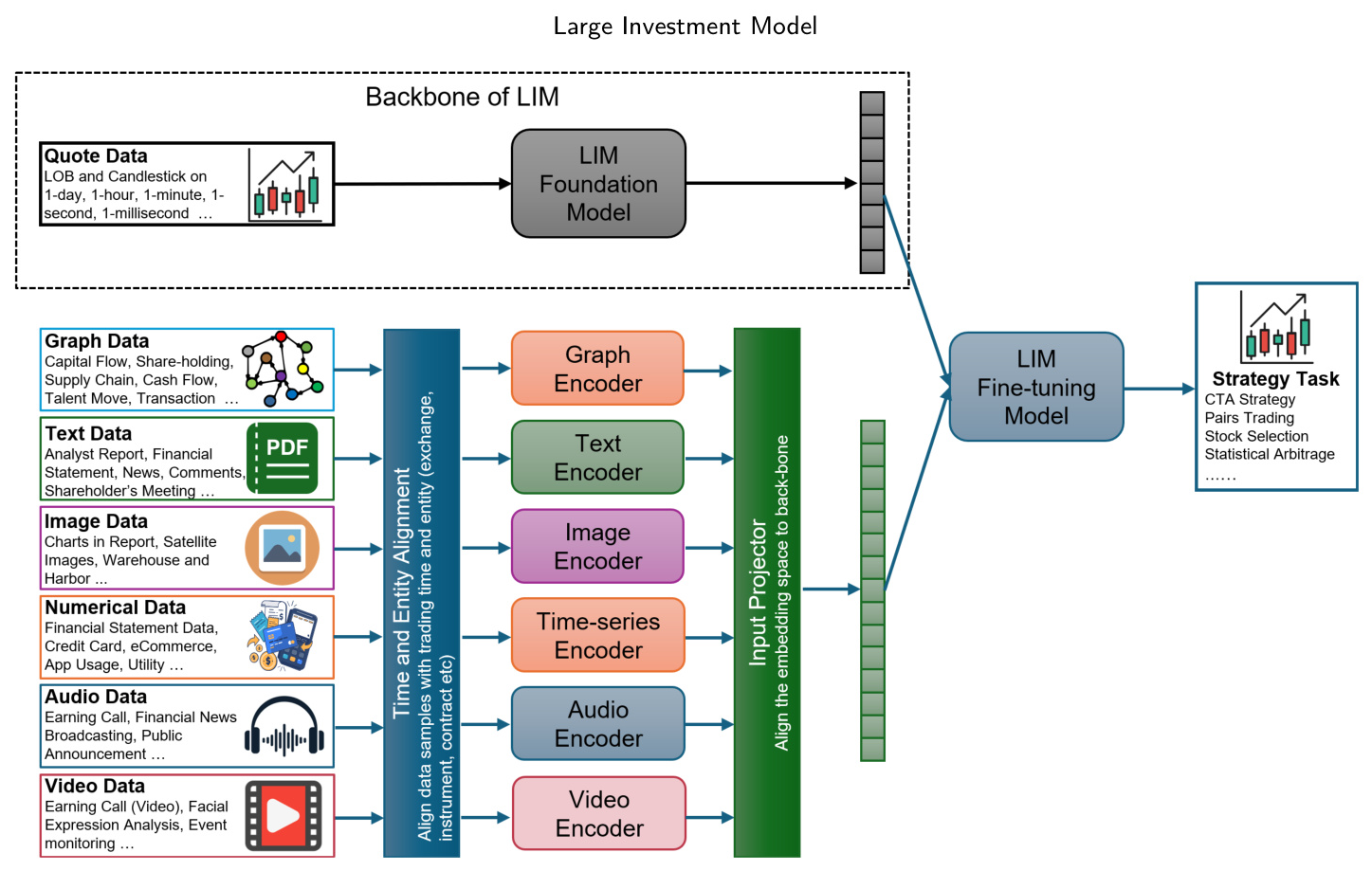

LIM employs end-to-end learning and universal modeling to create an upstream foundation model capable of autonomously learning comprehensive signal patterns from diverse financial data spanning multiple exchanges, instruments, and frequencies. These “global patterns” are subsequently transferred to downstream strategy modeling, optimizing performance for specific tasks. This blog will delve into the details of LIM, its architecture, and its potential impact on quantitative investment.

Related Work

Quantitative Investment Strategies

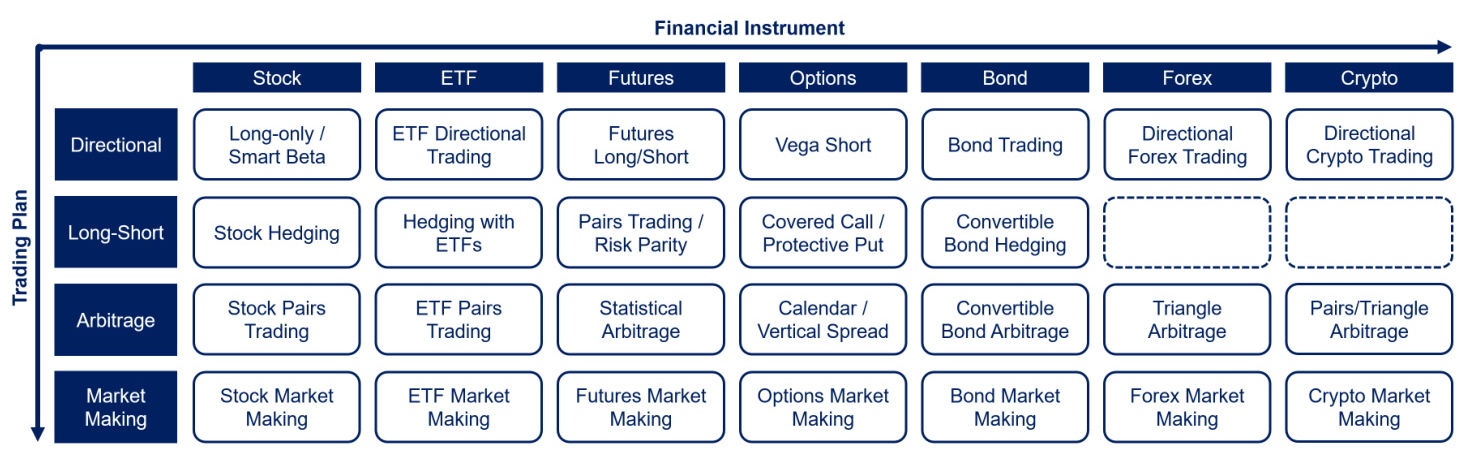

Quantitative investment strategies are systematic functions or trading methodologies used for trading financial instruments such as stocks, options, and futures. These strategies are based on either predefined rules or trained models for making trading decisions. Common strategies include:

- Directional Trading: Involves taking a position based on the anticipated direction of a security’s price movement.

- Long-Short Trading: Involves taking both long and short positions in different securities to exclude market volatility effects.

- Arbitrage Trading: Exploits price discrepancies between different markets or financial instruments to achieve risk-free profits.

- Market Making Trading: Provides liquidity to financial markets by continuously quoting both buy and sell prices for financial instruments.

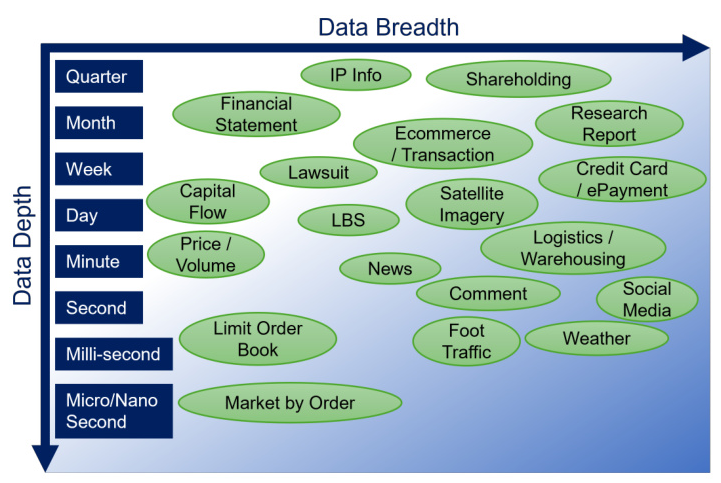

Data Diversity in Quant Modeling

Modern quantitative investment harnesses a diverse array of data to develop statistical and machine learning strategies aimed at profitable trading. Financial data can be categorized along two dimensions: data depth (granularity) and data breadth (diversity). Different investment strategies rely on different types of financial data, such as high-frequency market-making strategies focusing on granular limit order book (LOB) data or stock fundamental investing strategies analyzing financial statements and news data.

Multifactor Quant Modeling

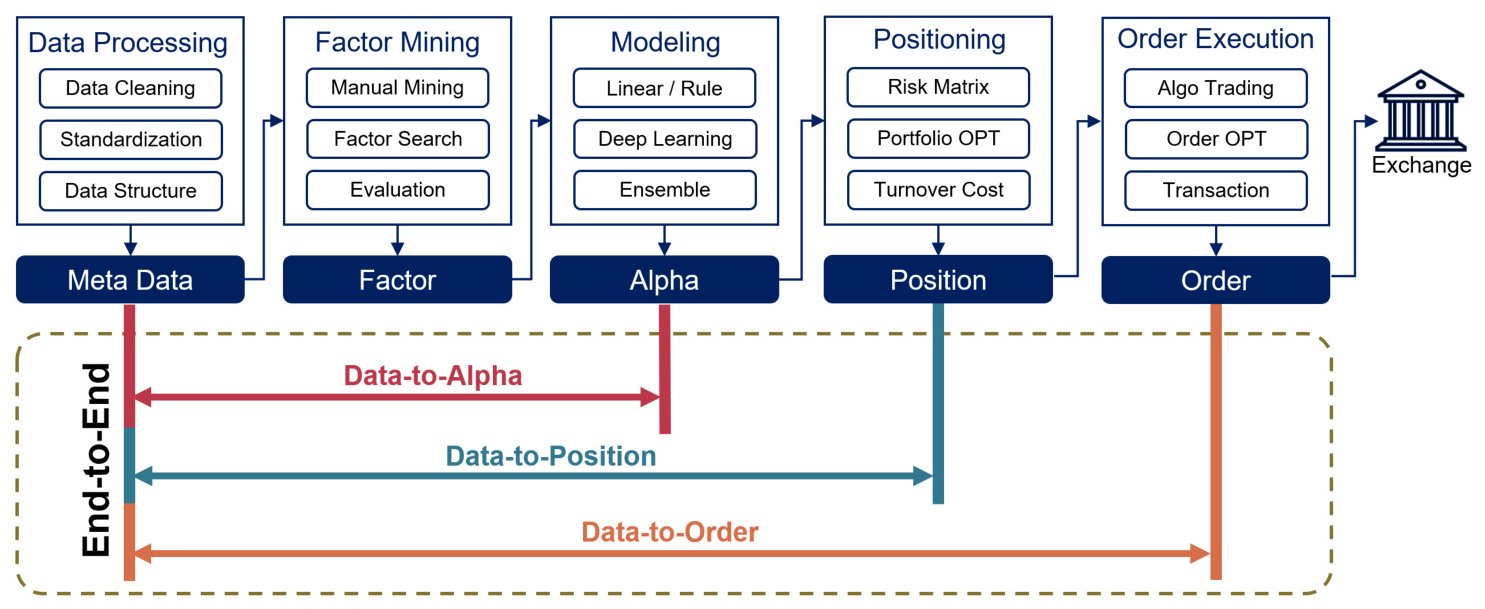

The traditional quantitative research pipeline includes several critical stages: data processing, factor mining, alpha modeling, portfolio position optimization, and order execution optimization. Factors are mathematical formulas or functions that capture signals predictive of trends in various financial instruments. Traditionally, trading factors have been manually designed and constructed, but there has been a growing shift toward automatic factor mining techniques to improve efficiency.

Research Methodology

End-to-End Modeling for LIM

End-to-end modeling seeks to directly generate the final trading strategy, bypassing intermediate steps such as factor mining. This approach has the potential to eliminate the labor-intensive factor mining process and significantly enhance the efficiency of quantitative research. End-to-end modeling directly learns the complex function linking raw inputs to final outputs, encompassing all intermediate stages.

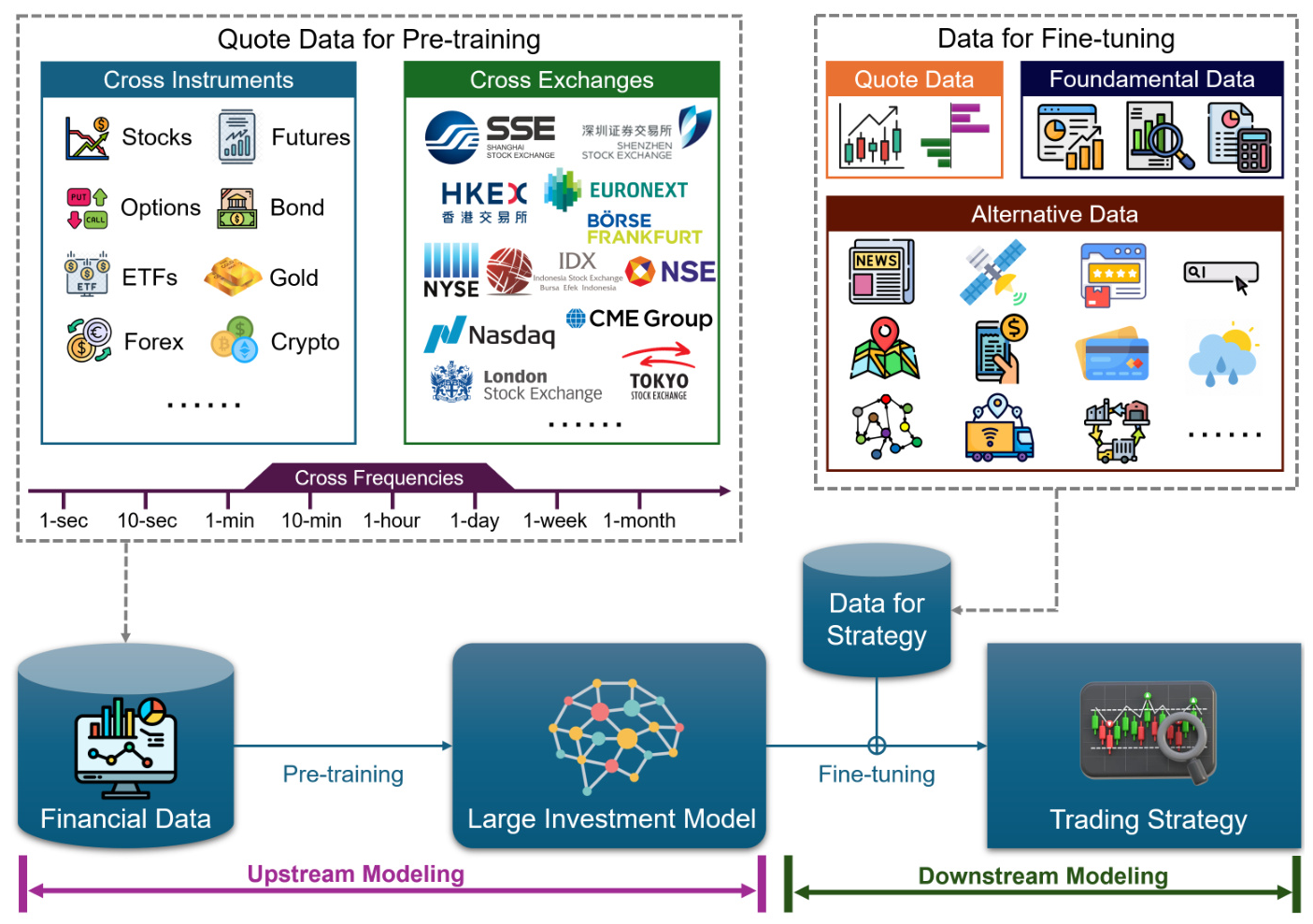

Universal Modeling for LIM

The universality of LIM encompasses cross-instrument, cross-exchange, and cross-frequency universality. The upstream foundation model is a self-supervised generative model pretrained on diverse data, acquiring financial analysis, prediction capabilities, and data generation skills. The downstream process involves building specific quantitative investment strategies by fine-tuning the upstream model with task-specific data.

Experimental Design

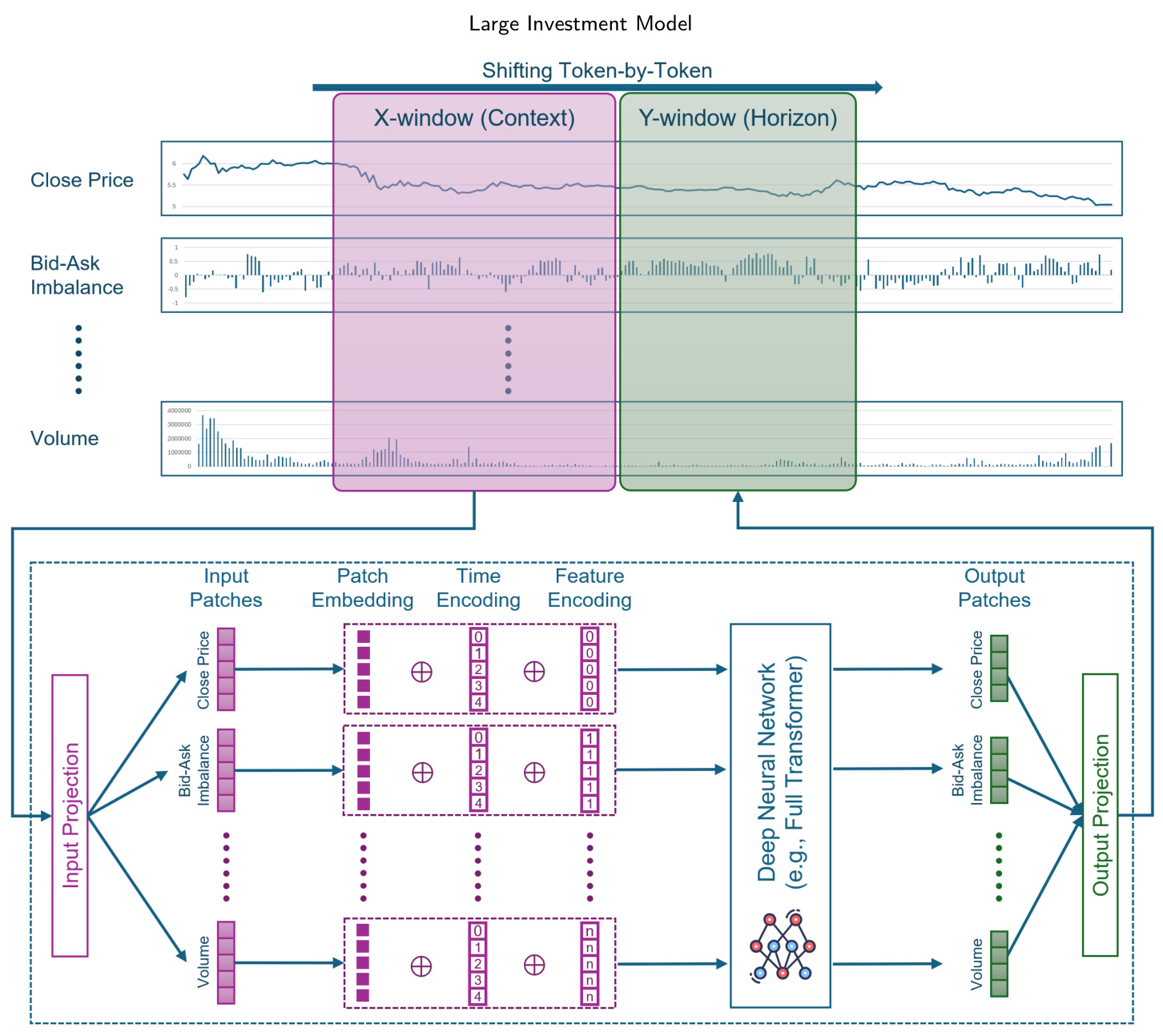

Upstream Foundation Model

The upstream modeling focuses on developing a universal foundation model for quantitative investment. The goal is to address a broad spectrum of financial time-series prediction problems. The foundation model is trained on financial quote time-series data, incorporating various variables such as closing price, bid-ask imbalance, returns, and trading volume.

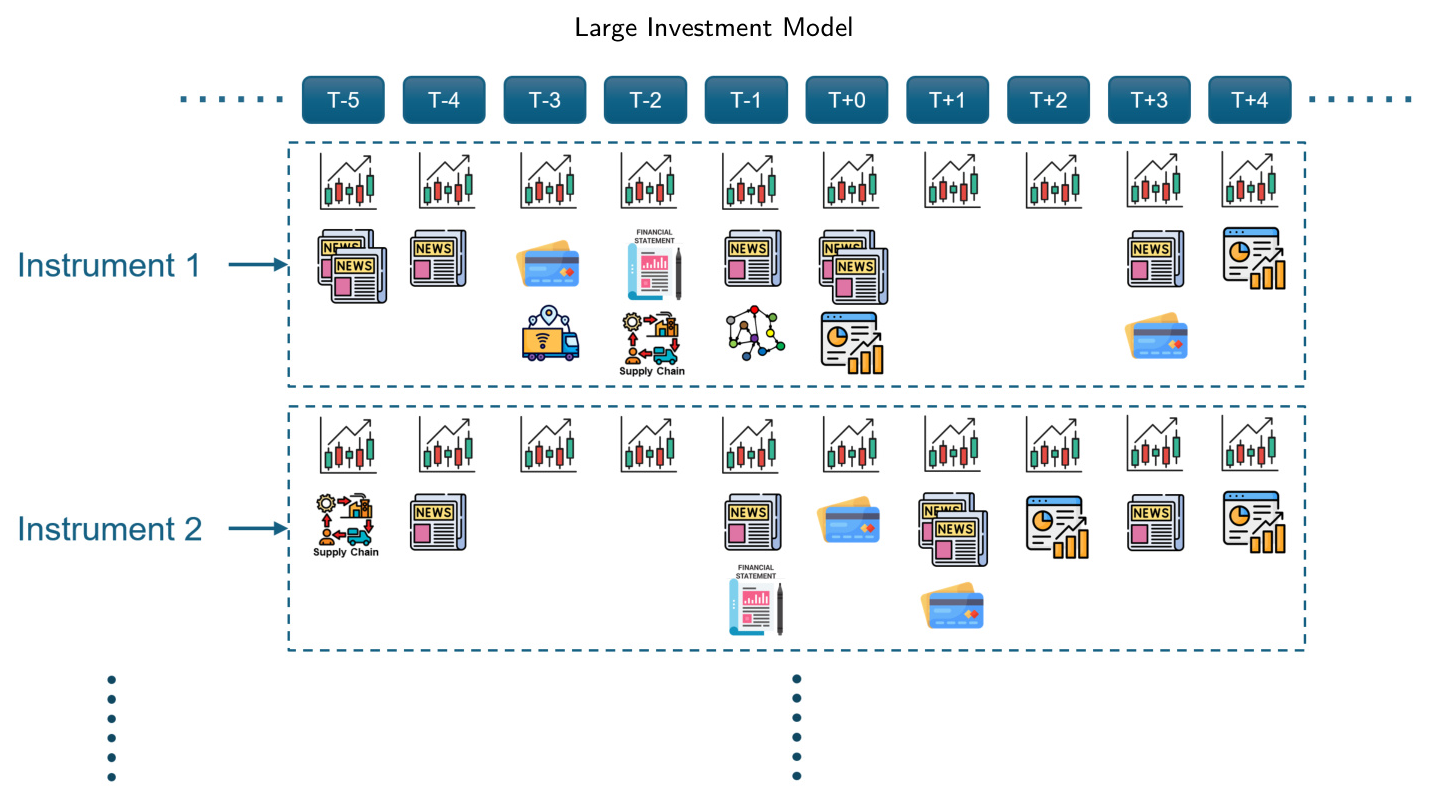

Downstream Task Model

The downstream workflow bridges the foundation model with the final strategy development task. Downstream modeling can incorporate a wide variety of task-specific data sources, including news, supply chain information, satellite imagery, earnings call transcripts, and more. Proper alignment and standardization of diverse data types are crucial for developing robust quant models.

Model Fine-tuning

Fine-tuning the LIM foundation model involves adding various new data types and employing advanced fine-tuning methods and optimization techniques. This process enriches the model with a comprehensive dataset, making it more adept at predicting market movements and refining investment strategies.

Results and Analysis

System Architecture for LIM

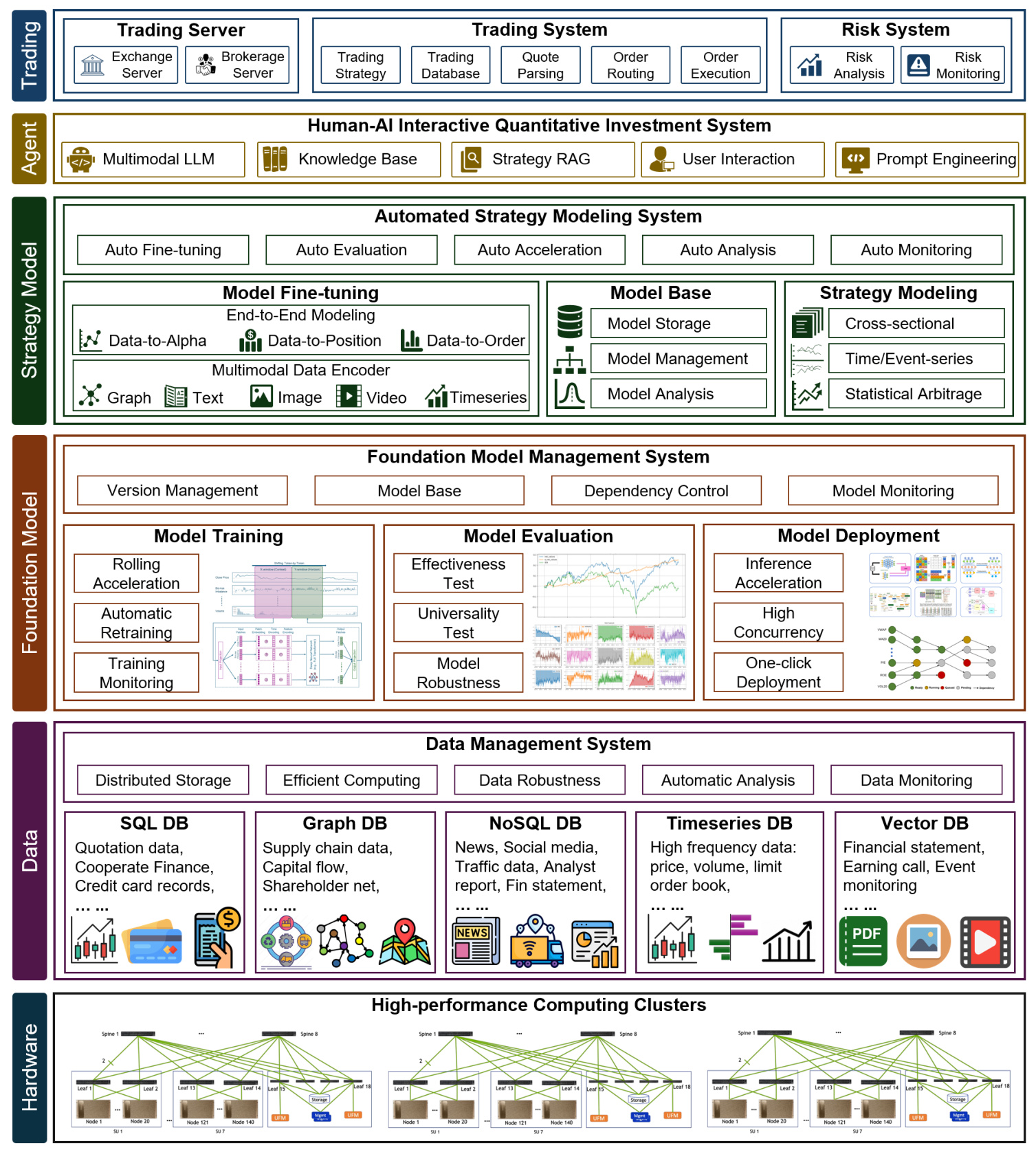

The construction of a real-world system founded on the LIM methodological framework includes computing infrastructure, data computation and storage, foundation modeling and management, automated strategy modeling, human-AI interaction agents, and a low-latency trading system.

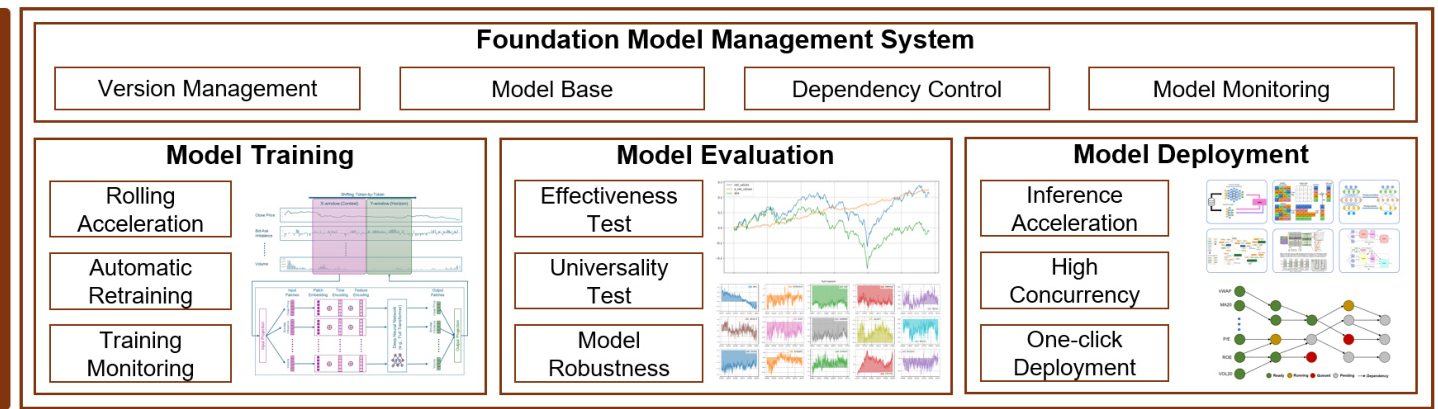

Evaluation Criteria

The effectiveness of the LIM system is validated through several key tests:

- Effectiveness Test: Assesses the model’s performance using historical data to simulate real-world scenarios.

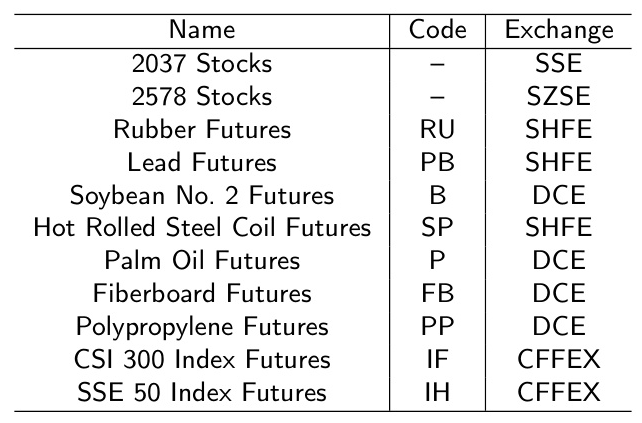

- Universality Test: Examines the model’s applicability across different financial instruments and exchanges.

- Robustness Test: Evaluates the stability of the model’s performance under different market conditions.

Experimental Results

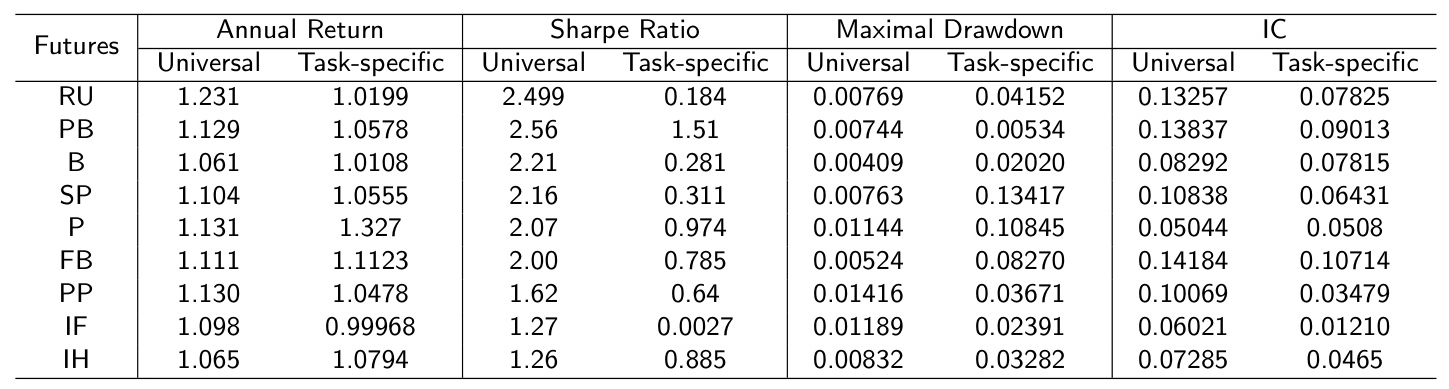

Numerical experiments on cross-instrument prediction for commodity futures trading demonstrate the advantages of LIM. The results show that the universal model outperforms task-specific models in terms of annual return, Sharpe ratio, maximal drawdown, and information coefficient (IC).

Overall Conclusion

The Large Investment Model (LIM) represents a significant advancement in quantitative investment, integrating knowledge transfer, data augmentation, cost efficiency, and the discovery of market interdependencies. LIM has the potential to revolutionize the development and implementation of quantitative strategies by providing a robust foundation that leverages global data to enhance local market strategies.

However, transitioning LIM from a theoretical concept to a practical tool for real-world trading presents several challenges, including high computational costs from frequent retraining, the complexity of selecting appropriate data sources, and the integration of alternative data for diverse strategies. Addressing these challenges is essential for the continued development and success of LIM across a broader range of investment contexts.

In conclusion, LIM opens significant opportunities for innovation in quantitative finance, accelerating the development of more sophisticated and effective investment strategies, potentially leading to improved investment outcomes.