Authors:

Neha R. Gupta、Jessica Hullman、Hari Subramonyam

Paper:

https://arxiv.org/abs/2408.10239

Introduction

Machine learning (ML) model evaluation traditionally focuses on estimating prediction errors using quantifiable metrics. However, as ML systems grow in complexity, evaluations must become multifaceted, incorporating methods like A/B testing, adversarial testing, and comprehensive audits. Ethical concerns during the ML development lifecycle, particularly during evaluation, are often overlooked. This paper presents a conceptual framework to balance information gain against potential ethical harms in ML evaluations, drawing parallels with practices in clinical trials and automotive crash testing.

Related Works

Ethical AI

The literature on ethical AI identifies several key values:

1. Non-maleficence: Ensuring no harm or injury is inflicted.

2. Privacy: Protecting personal and sensitive information.

3. Fairness: Achieving equitable treatment and outcomes.

4. Cultural Sensitivity: Respecting cultural differences and avoiding stereotypes.

5. Sustainability: Developing models responsibly, economically, and equitably.

6. Societal Impact: Contributing positively to societal well-being.

ML System Evaluation Practices

Evaluation methods vary, including pre-deployment (e.g., A/B testing) and post-deployment (e.g., bug bounty challenges). Ethical evaluations should forecast downstream harms and not sacrifice ethical values. The utility framework from statistical decision theory is adapted to balance information gain, ethical harms, and resource costs.

Ethical Evaluation Model Motivation

The proposed model simplifies complex real-world processes to predict the consequences of actions. It focuses on balancing information gain against ethical harms, prompting reflection on selecting the best evaluation while considering potential ethical harms.

Model Properties

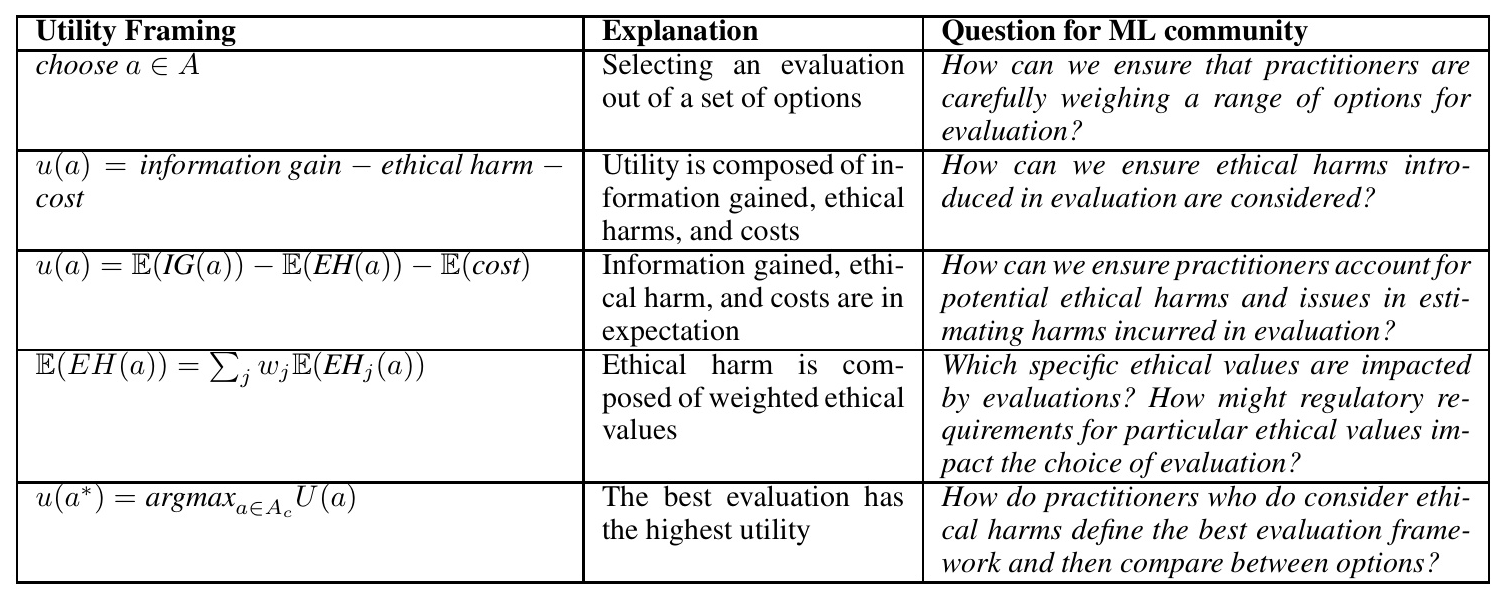

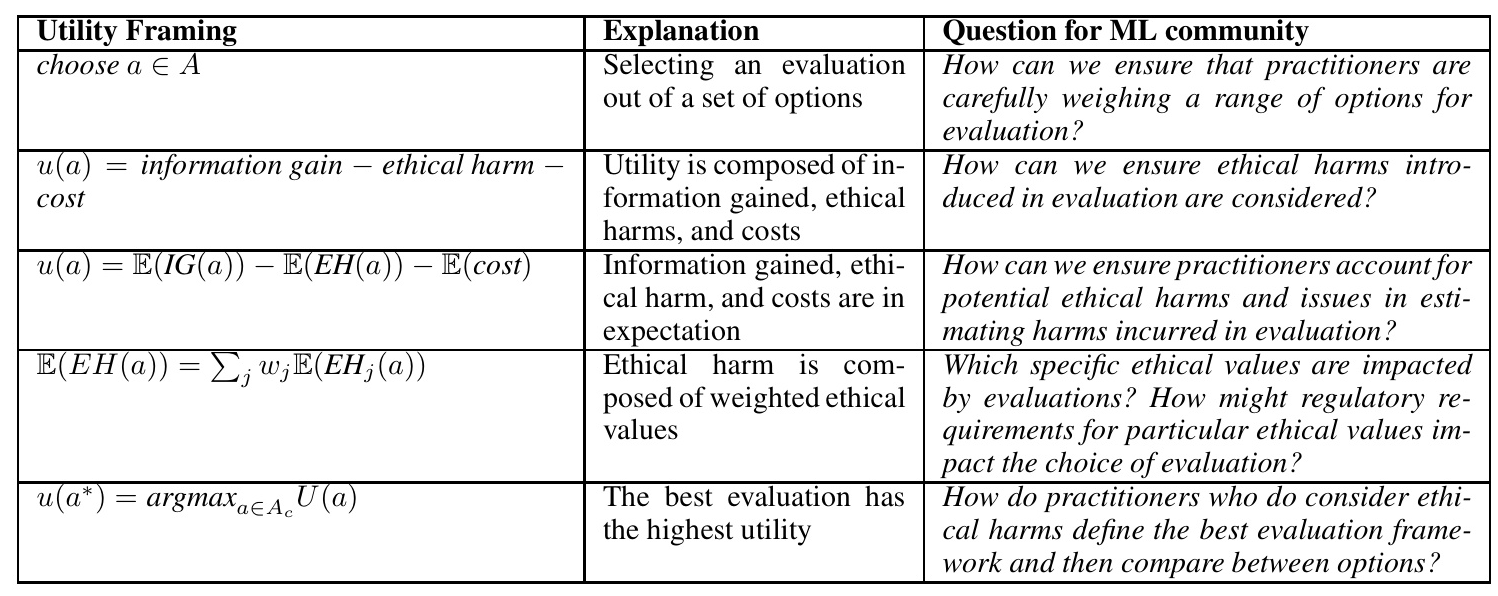

Evaluation Decision Space

ML teams select from a set of possible evaluations, represented as ( A = {a_1, a_2, \ldots} ). The utility of an evaluation approach depends on information gain, ethical harms, and resource costs.

Utility Function

The utility function is defined as:

[ u(a) = \mathbb{E}(IG(a)) – \mathbb{E}(EH(a)) – \mathbb{E}(cost) ]

where ( IG(a) ) is information gain, ( EH(a) ) is ethical harm, and ( cost ) is the material cost. Ethical harm is decomposed into weighted ethical values:

[ \mathbb{E}(EH(a)) = \sum_j w_j \mathbb{E}(EH_j(a)) ]

Optimal Evaluation

The optimal evaluation maximizes utility:

[ a^* = \arg\max_{a \in A} U(a) ]

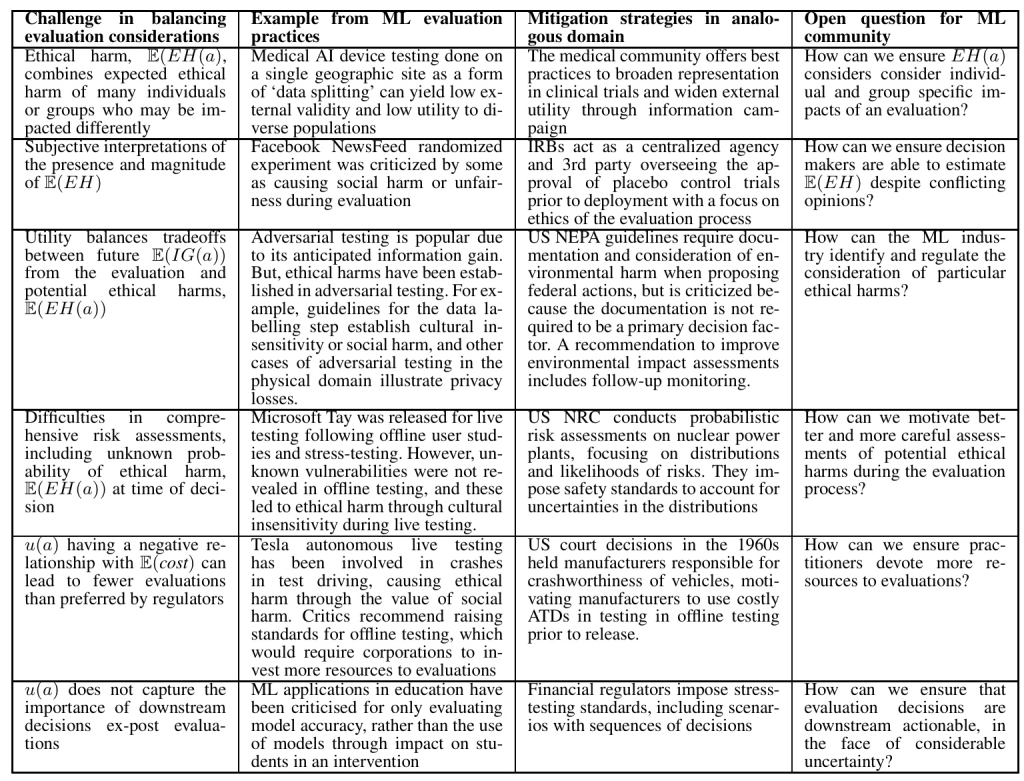

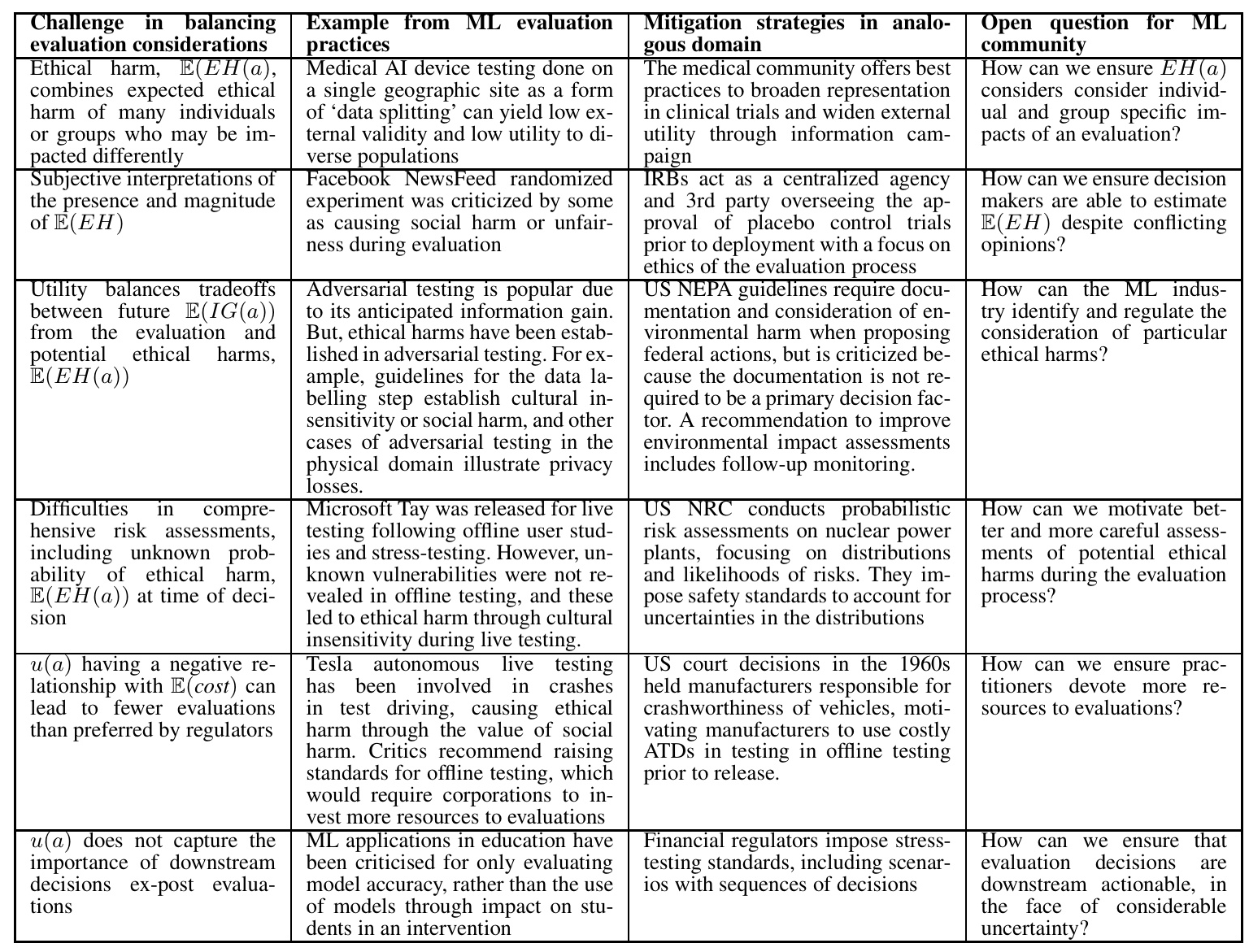

Challenges in Ethical Evaluation

Issue 1: Aggregating Over Populations

Aggregating ethical harms can mask individual or group-specific concerns. For example, medical AI device testing often lacks geographic diversity, leading to biases in performance on underrepresented groups.

Mitigation Example: Clinical trials use population-weighted sampling and post-stratification to ensure diverse representation.

Issue 2: Disagreement on Ethical Harms

Estimating and agreeing on ethical harms is challenging. For instance, Facebook’s NewsFeed experiment faced backlash for perceived social harm, despite its utility in understanding emotional contagion.

Mitigation Example: Placebo-controlled trials in clinical research are regulated by IRBs to ensure ethical standards.

Issue 3: Balancing Future Gains Against Immediate Harms

Adversarial testing can introduce ethical harms, such as exposure to harmful content during labeling. The anticipated safety gains must be balanced against these immediate harms.

Mitigation Example: NEPA guidelines require documentation of environmental impacts, emphasizing the need for comprehensive risk assessments.

Issue 4: Comprehensive Risk Assessment

Forecasting future ethical harms is difficult. Microsoft’s Tay chatbot faced unanticipated ethical issues during live testing, highlighting the need for better risk assessment.

Mitigation Example: The NRC uses probabilistic risk assessments to ensure nuclear plant safety, imposing strict safety margins to account for uncertainties.

Issue 5: Insufficient Resources

Cost constraints can lead to inadequate evaluations. Tesla’s autonomous vehicle testing on public roads resulted in fatal crashes, suggesting the need for more rigorous offline testing.

Mitigation Example: Automotive crash tests evaluate vehicle safety, incentivized by legal liabilities and consumer preferences.

Issue 6: Impact of Evaluations on Downstream Actions

The value of information gained from evaluations depends on subsequent actions. ML models in education often prioritize predictive accuracy over effective interventions.

Mitigation Example: Financial regulators enforce stress-testing frameworks to assess vulnerabilities and ensure stability.

Discussion

The utility framework highlights the trade-offs between information gain and ethical harms under uncertainty. External review systems and internal reflection can improve decision-making. Legal and social incentives are needed to encourage ethical evaluation practices.

Recommendations

- External Review Systems: Implement external oversight boards and accreditation for auditors to ensure ethical evaluations.

- Internal Decision-Making: Encourage teams to focus on downstream harms, allocate resources for ethical practices, and select actionable evaluations.

Conclusion

Ethical evaluation of ML systems requires balancing information gain against potential harms. The proposed framework and examples from analogous domains provide a foundation for improving evaluation practices. Future research should explore case studies and stakeholder interviews to refine ethical evaluation strategies.

Illustrations: