Authors:

Zhiyong Zhang、Aniket Gupta、Huaizu Jiang、Hanumant Singh

Paper:

https://arxiv.org/abs/2408.10161

Introduction

Optical flow estimation is a critical task in computer vision, enabling applications such as motion detection, object tracking, and video analysis. Traditional methods like Lucas-Kanade and SIFT have been surpassed by learning-based approaches, which offer higher accuracy but at the cost of increased computational demands. NeuFlow v2 aims to address this trade-off by providing a highly efficient optical flow estimation method that maintains high accuracy while significantly reducing computational costs. This paper introduces NeuFlow v2, which builds upon its predecessor, NeuFlow v1, by incorporating a lightweight backbone and a fast refinement module, achieving real-time performance on edge devices like the Jetson Orin Nano.

Related Work

Early Learning-Based Methods

FlowNet was the first deep learning-based optical flow estimation method, introducing the synthetic FlyingChairs dataset for training. Subsequent models like FlowNet 2.0, SPyNet, and PWC-Net focused on reducing model size and improving speed while maintaining accuracy.

Iterative Refinement Approaches

RAFT introduced iterative refinements to improve generalization and handle large displacements. Following RAFT, models like GMA and GMFlow incorporated global matching and attention mechanisms to enhance performance further.

Lightweight Models

LiteFlowNet and its successors focused on reducing model size and computational costs. RapidFlow and DCVNet introduced efficient convolutional blocks and cost volume construction techniques to achieve faster inference.

NeuFlow v1

NeuFlow v1 was the fastest optical flow method, achieving over ten times the speed of mainstream methods while maintaining comparable accuracy. However, it struggled with generalization on real-world data.

Research Methodology

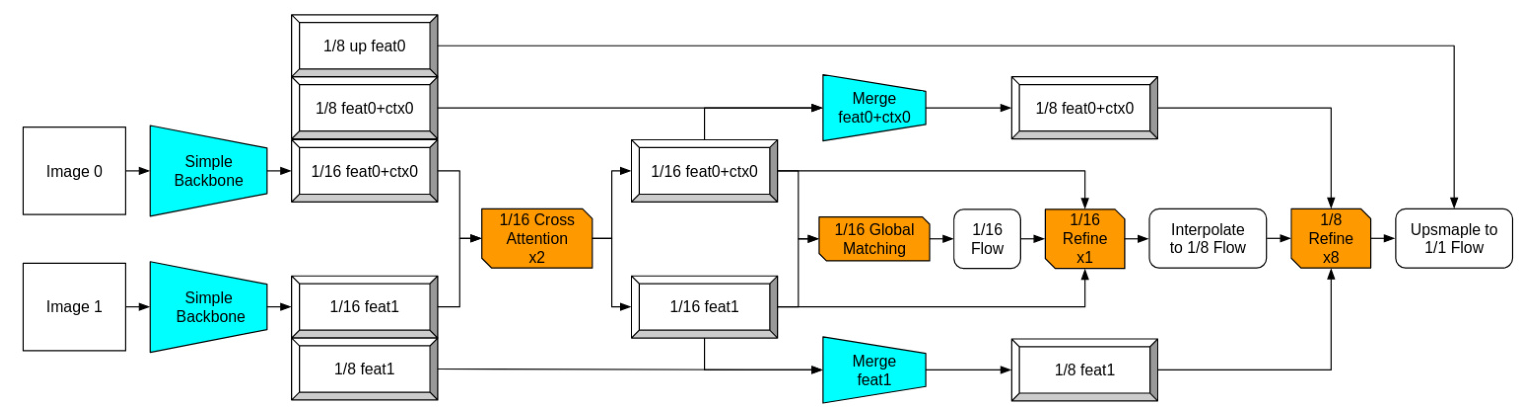

Simple Backbone

NeuFlow v2 employs a simplified CNN-based backbone to extract low-level features from multi-scale images. This backbone eliminates redundant components, focusing on essential features for optical flow tasks. The backbone processes images at 1/2, 1/4, and 1/8 scales, using convolutional blocks to extract and resize features for further processing.

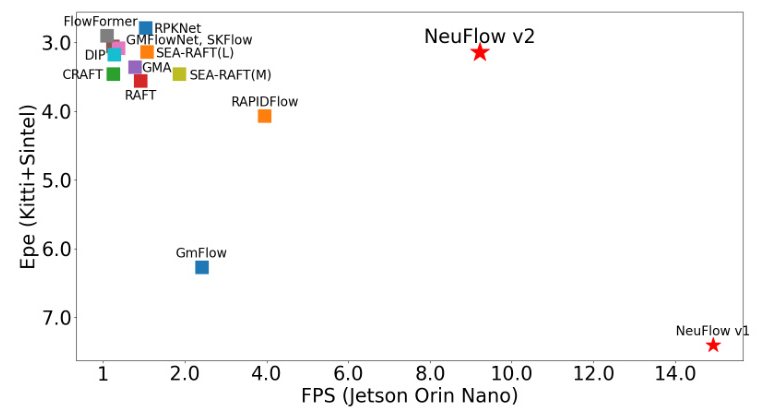

Cross-Attention and Global Matching

Cross-attention layers exchange information between images globally, enhancing feature distinctiveness. Global matching is performed on 1/16 scale features to handle large pixel displacements. This combination allows the model to estimate initial optical flow efficiently.

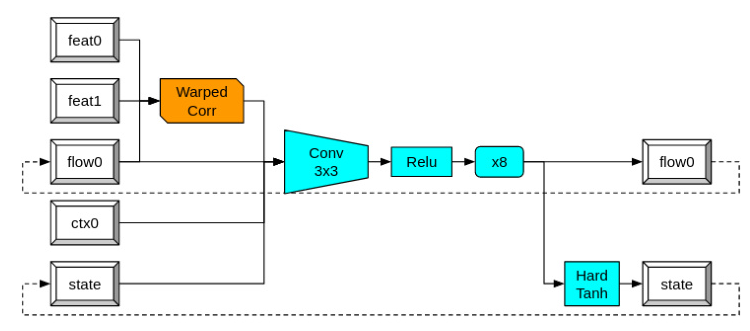

Simple RNN Refinement

A lightweight RNN module iteratively refines the estimated optical flow. The module uses 3×3 convolutional layers followed by ReLU activation to process warped correlations, context features, and hidden states. This approach avoids the computational overhead of GRU or LSTM modules while maintaining high accuracy.

Multi-Scale Feature/Context Merge

To incorporate both global and local information, the model merges 1/16 scale global features with 1/8 scale local features. This ensures that the refined optical flow benefits from a comprehensive understanding of the scene.

Experimental Design

Training and Evaluation Datasets

The model is trained on the FlyingThings dataset for a fair comparison with other methods. Additional training is performed using a mixed dataset comprising Sintel, KITTI, and HD1K for real-world applications. Evaluation is conducted on the Sintel and KITTI datasets to assess generalization capabilities.

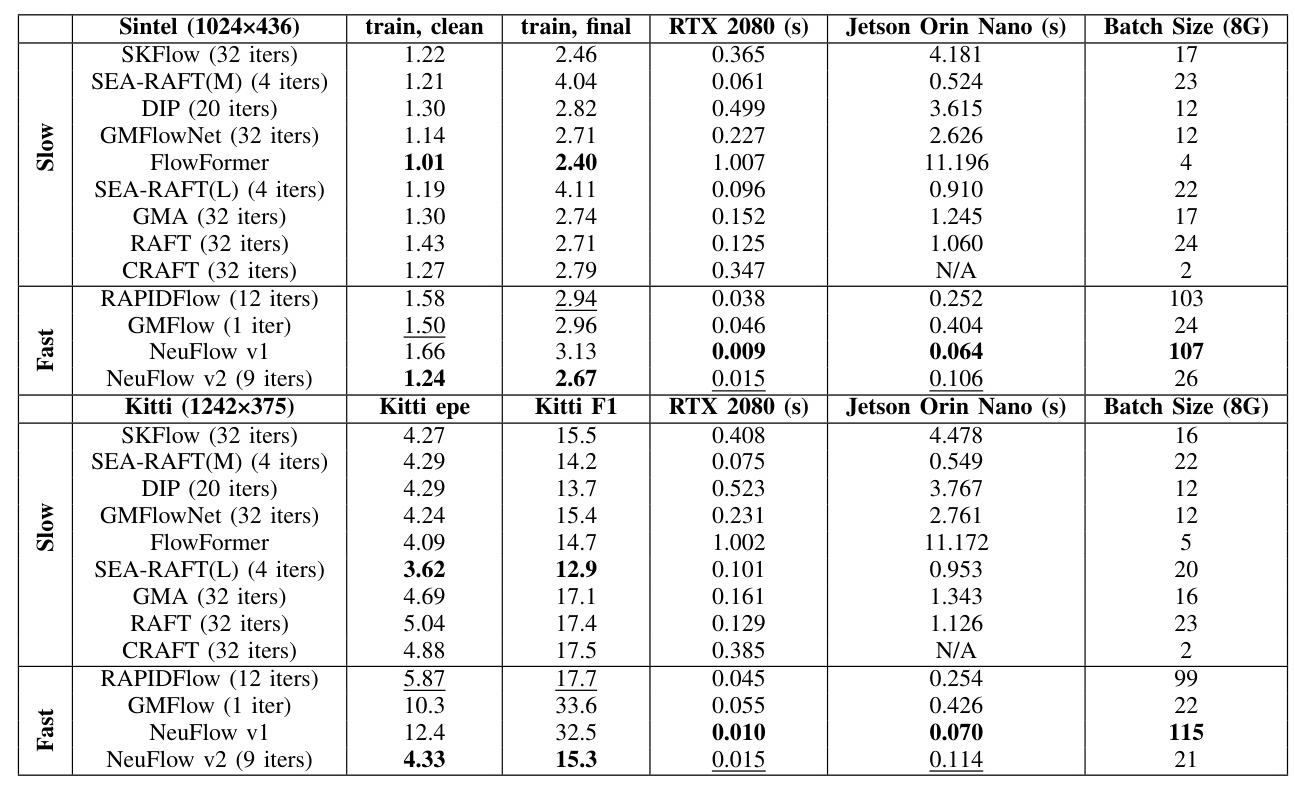

Comparison with State-of-the-Art Methods

NeuFlow v2 is compared with several state-of-the-art optical flow methods, measuring accuracy and computation time on both RTX 2080 and Jetson Orin Nano devices. The model achieves comparable accuracy while being significantly faster, demonstrating its efficiency.

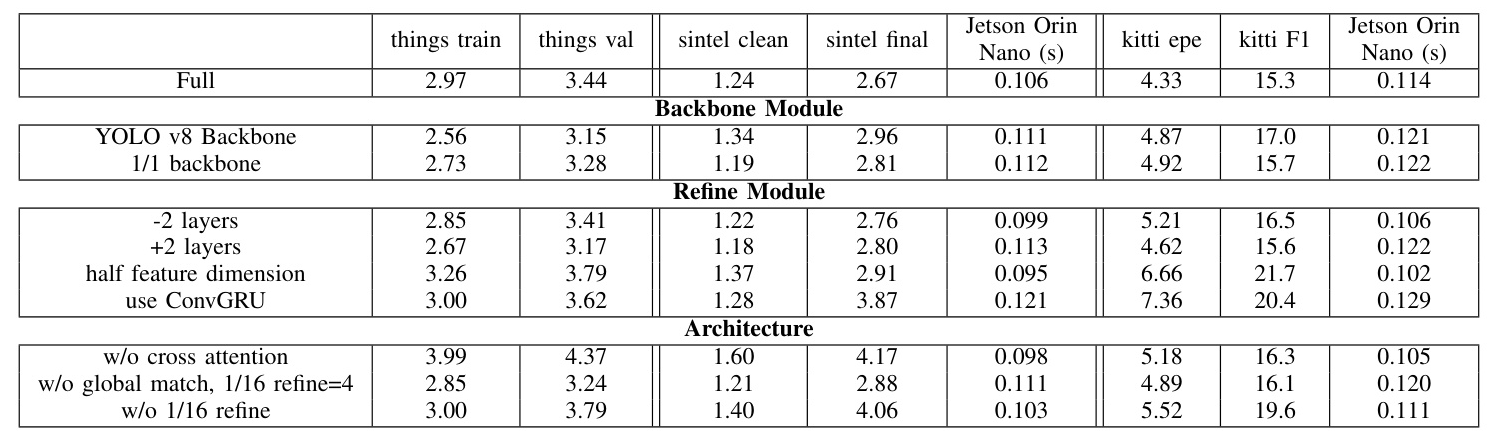

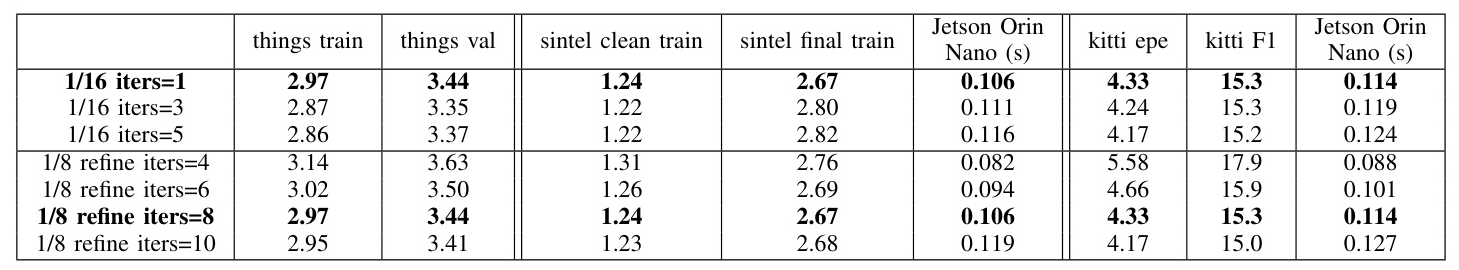

Ablation Study

An ablation study is conducted to evaluate the impact of different components on model performance. The study examines the effects of removing full-scale features, adjusting the number of refinement layers, and modifying the architecture.

Results and Analysis

Performance Comparison

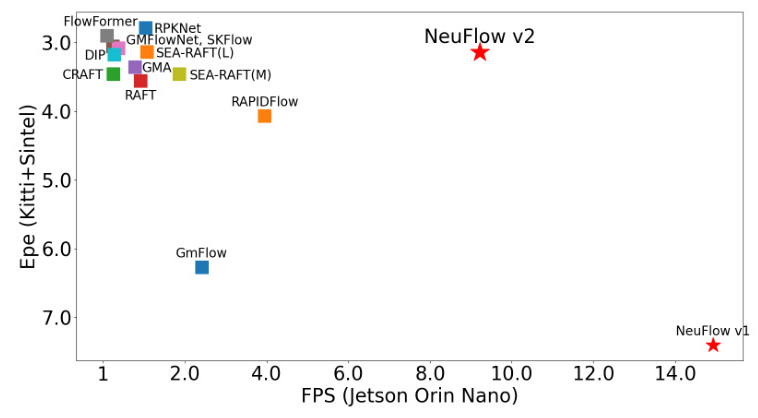

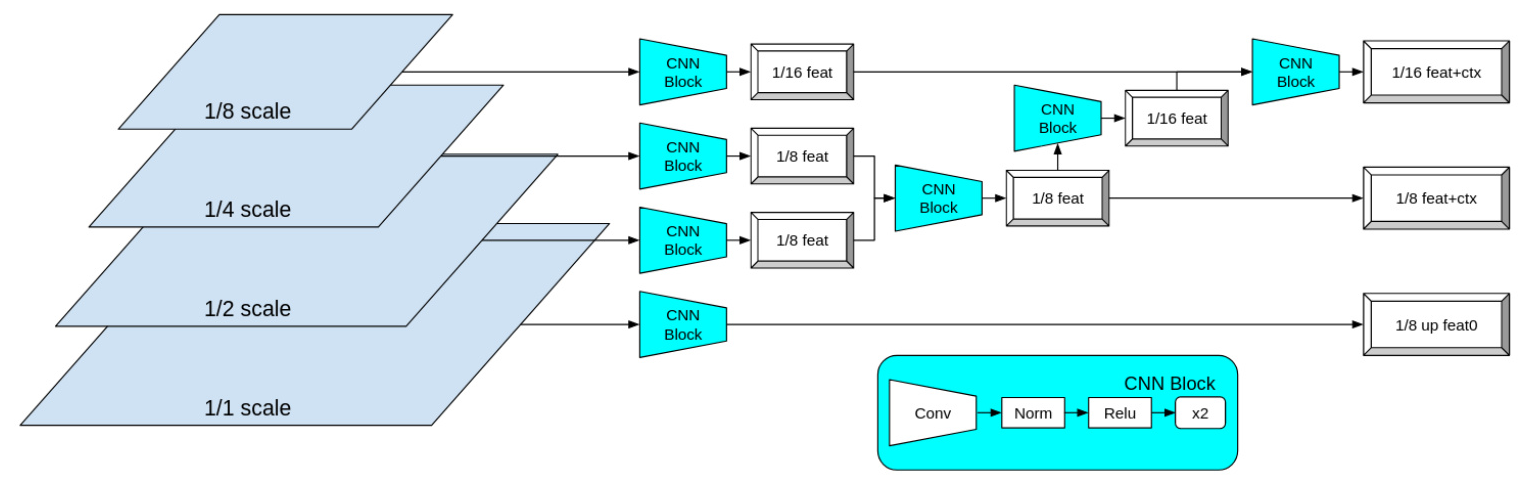

NeuFlow v2 achieves real-time performance on edge devices, running at over 20 FPS on 512×384 resolution images on a Jetson Orin Nano. The model offers a 10x-70x speedup compared to other state-of-the-art methods while maintaining comparable accuracy on both synthetic and real-world data.

Generalization Examples

NeuFlow v2 demonstrates strong generalization capabilities on unseen real-world images, showcasing its robustness in diverse scenarios.

Architecture Details

The architecture of NeuFlow v2 includes a simple backbone, cross-attention layers, global matching, and an iterative refinement module. This design balances efficiency and accuracy, enabling real-time inference.

Backbone and Refinement Modules

The simplified backbone extracts low-level features from multi-scale images, while the RNN refinement module iteratively improves the estimated optical flow.

Comparative Analysis

NeuFlow v2 outperforms other methods in terms of speed and maintains competitive accuracy. The model’s lightweight design and efficient refinement process contribute to its superior performance.

Ablation Study Results

The ablation study reveals that removing full-scale features and adjusting the number of refinement layers impact accuracy and computation time. The study confirms that the default configuration of NeuFlow v2 provides a balanced trade-off between accuracy and efficiency.

Overall Conclusion

NeuFlow v2 presents a highly efficient optical flow estimation method that achieves real-time performance on edge devices while maintaining high accuracy. The model’s lightweight backbone and fast refinement module enable significant computational savings, making it suitable for real-world applications. Future work will focus on reducing memory consumption and exploring efficient modules to further enhance performance. The code and model weights are available at NeuFlow v2 GitHub repository.

Code:

https://github.com/neufieldrobotics/neuflow_v2