Authors:

Stefano Bannò、Kate Knill、Mark J. F. Gales

Paper:

https://arxiv.org/abs/2408.09565

Introduction

In the realm of natural language processing (NLP), computer-assisted language learning (CALL) has emerged as a significant area of research. One of the critical components of CALL is providing feedback on grammatical usage to learners. Traditionally, this feedback has been delivered through grammatical error detection (GED) and grammatical error correction (GEC) systems. However, while these systems are beneficial, they often fall short of providing comprehensive feedback that can help learners understand and correct their mistakes more effectively.

This paper introduces a novel approach to grammatical error feedback (GEF) that leverages large language models (LLMs) to provide holistic feedback without the need for manual annotations. The proposed method uses a grammatical lineup approach, akin to voice lineups in forensic speaker recognition, to implicitly evaluate the feedback quality by matching feedback to essay representations.

Related Work

Grammatical Error Annotation Tools

The ERRor ANnotation Toolkit (ERRANT) has become a standard tool for extracting and categorizing grammatical errors in CALL. ERRANT labels errors based on parts of speech but does not provide natural-language-based descriptions or motivations for corrections, which can be limiting for learners and teachers.

Feedback Generation Systems

Previous works on feedback generation have focused on specific error types, such as preposition errors, and have used fine-tuned models like T5, BART, and GPT-2. These systems, however, often require manual annotations and are limited in scope.

Large Language Models in CALL

The advent of LLMs has opened new possibilities for automatic feedback generation. Recent studies have explored using LLMs for grammatical error explanation and joint essay scoring and feedback generation. However, these approaches often require manual validation and are limited to sentence-level corrections.

Research Methodology

Grammatical Error Feedback (GEF)

The goal of GEF is to provide holistic feedback that summarizes grammatical errors in an informative and easy-to-interpret manner. This feedback is generated using LLMs conditioned on the learner’s essay. The process can either include explicit GEC or rely solely on the original essay for feedback generation.

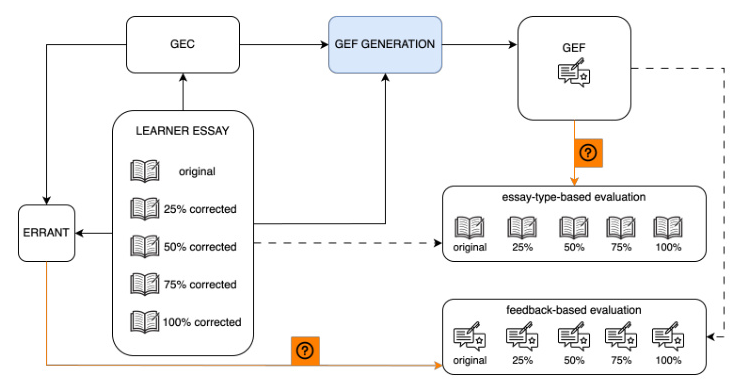

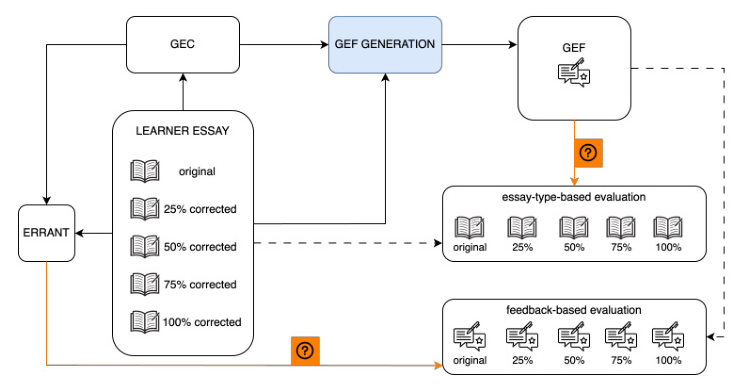

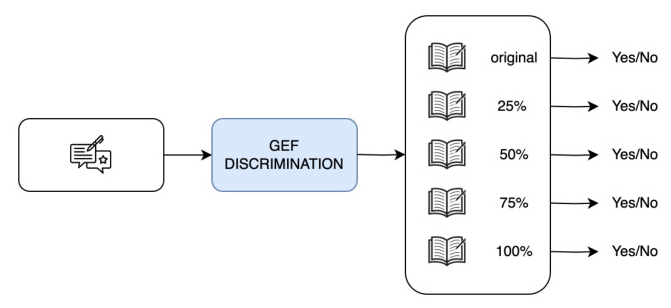

Grammatical Lineup

Given the challenge of generating reference feedback for essays, the paper proposes an implicit evaluation approach using a grammatical lineup. This involves creating a set of essay versions with varying levels of correction and matching feedback to these versions.

Experimental Design

Data Preparation

The experiments use essays from the Cambridge Learner Corpus (CLC), which includes data from various proficiency levels and L1 backgrounds. A total of 300 essays were selected, with 50 essays per proficiency level ranging from A1 to C2.

GEC Systems

Three GEC systems were used: GECToR, Gramformer, and GPT-4o. These systems represent different categories of GEC models, including edit-based systems, sequence-to-sequence models, and LLM-based systems.

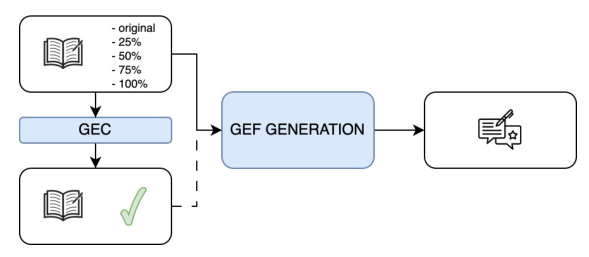

GEF Generation

Each essay version, along with its corrected version, was fed into an LLM to generate feedback. The study used Llama 3 8B, GPT-3.5, and GPT-4o for this purpose.

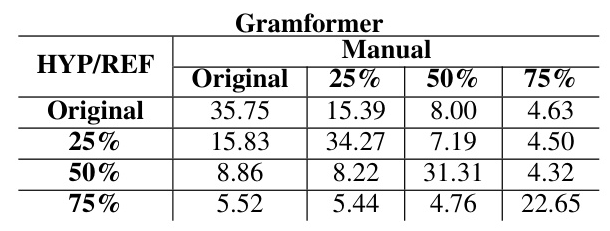

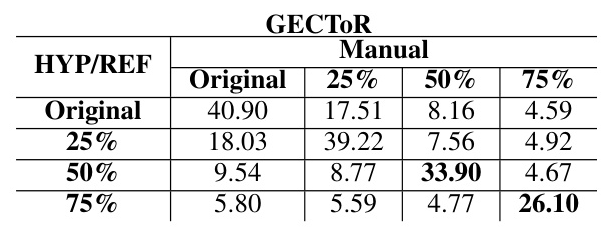

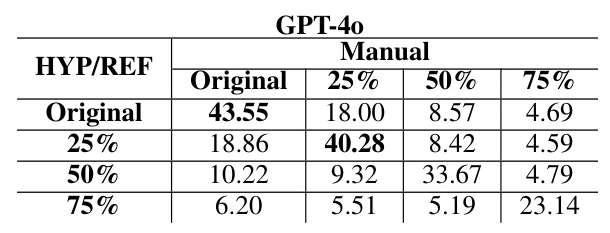

GEF Discrimination

The correctness of the feedback was evaluated using two methods: essay-type-based evaluation and feedback-based evaluation. The evaluation metric was Accuracy, calculated based on the probability of correctly matching feedback to the corresponding essay version.

Results and Analysis

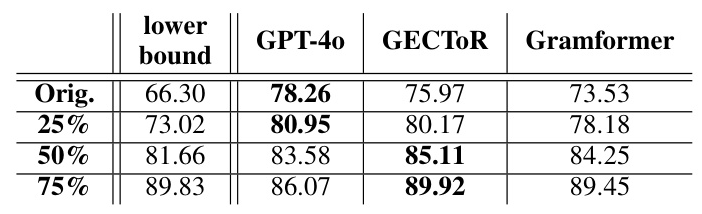

GEC Results

The GEC results showed that GPT-4o achieved the best results on the original and 25% corrected essays, while GECToR performed better on the 50% and 75% corrected essays. This discrepancy is likely due to GPT-4o’s tendency to overcorrect errors.

GEF Results

The GEF results indicated that using GEC information significantly improves feedback quality. GECToR was found to be the best GEC system for generating high-quality feedback, even outperforming GPT-4o in some cases.

Evaluation Methods

The feedback-based evaluation method, which restricts lexical information, showed a large drop in performance for the No GEC system, highlighting the importance of GEC in generating accurate feedback. The essay-type-based evaluation method, which includes lexical information, showed acceptable results even for the No GEC system.

Overall Conclusion

This study presents a novel implicit evaluation framework for providing grammatical error feedback to L2 learners. The proposed approach is cost-effective and flexible, as it does not require manual feedback annotations and can be customized with different lineups. The results demonstrate that incorporating GEC information significantly enhances feedback quality, and the implicit evaluation method effectively assesses GEF without manual references.

Future work will extend this framework to other languages and spoken data, potentially incorporating multimodal LLMs to further enhance feedback generation.

By leveraging the capabilities of LLMs and innovative evaluation methods, this research contributes to the development of more effective and comprehensive CALL systems, ultimately supporting language learners in their journey to proficiency.