Authors:

Daniel Omeiza、Pratik Somaiya、Jo-Ann Pattinson、Carolyn Ten-Holter、Jack Stilgoe、Marina Jirotka、Lars Kunze

Paper:

https://arxiv.org/abs/2408.08584

Introduction

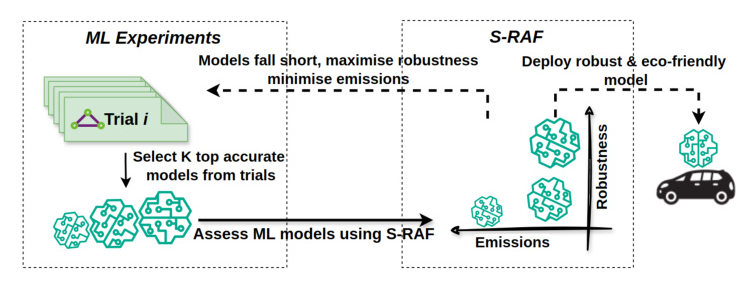

As artificial intelligence (AI) technology advances, ensuring the robustness and safety of AI-driven systems has become paramount. However, varying perceptions of robustness among AI developers create misaligned evaluation metrics, complicating the assessment and certification of safety-critical and complex AI systems such as autonomous driving (AD) agents. To address this challenge, the Simulation-Based Robustness Assessment Framework (S-RAF) for autonomous driving has been introduced. S-RAF leverages the CARLA Driving simulator to rigorously assess AD agents across diverse conditions, including faulty sensors, environmental changes, and complex traffic situations. By quantifying robustness and its relationship with other safety-critical factors, such as carbon emissions, S-RAF aids developers and stakeholders in building safe and responsible driving agents, and streamlining safety certification processes. Furthermore, S-RAF offers significant advantages, such as reduced testing costs, and the ability to explore edge cases that may be unsafe to test in the real world.

!

The Need for RAI Indicators

Robustness

Robustness is a core principle of Responsible AI (RAI). Unlike symbolic AI systems, deep learning models often deployed in AD undergo training with extensive datasets, and their complex structures are not collectively readily interpretable by humans. Consequently, it is currently impractical to offer comprehensive assurances regarding the accurate performance of neural networks when they encounter input data significantly divergent from what was seen during training. Numerous AI applications, including AD, have critical security or safety implications, necessitating the ability to assess the systems’ resilience when confronted with unforeseen events, whether they arise naturally or are deliberately induced by malicious actors.

Carbon Emission

The training process of a single deep learning natural language processing (NLP) model on a GPU can result in significant CO2 emissions. For instance, Google’s AlphaGo Zero generated 96 metric tonnes of CO2 during 40 days of training. The environmental impact of recent Generative AI models is even more concerning. Efforts to build more robust, multi-tasking models are observed to negatively impact environmental sustainability. This has implications for AD, where such models are increasingly integrated.

Previous RAI Efforts

RAI Frameworks

Several efforts have been channelled toward developing RAI guidelines and frameworks over the years. These efforts include the provision of guidance for problem formulation and procurement decisions for the appropriate AI system for the given use case. It also includes ethical considerations for designing the systems, e.g., checklists, and procedures for enabling participatory design. In the actual machine learning workflow, dataset collection and training processes benefit from established ethical guidelines. Some frameworks are also useful for model post-training activities, e.g., for fairness assessment, model-card, and datasheet for model training documentation, dataset details, and model reporting.

RAI Tools

RAI tools have been developed for use by AI practitioners, of which a few focus on robustness, explainability, and sustainability. Our work spans robustness, to include CO2 emission tracking and supports complex multi-modal AD agents. In summary, building responsible AD agents requires additional efforts beyond adversarial robustness for models with single input modality, and should be done without trading off environmental sustainability. This is the gap that S-RAF potentially seeks to fill.

S-RAF: Robustness Indicators

We consider an agent to be robust if it can sustain performance in the presence of environmental disturbances, measurement noise, and data drift without infractions of traffic rules or collisions.

Robustness against Environmental Disturbances

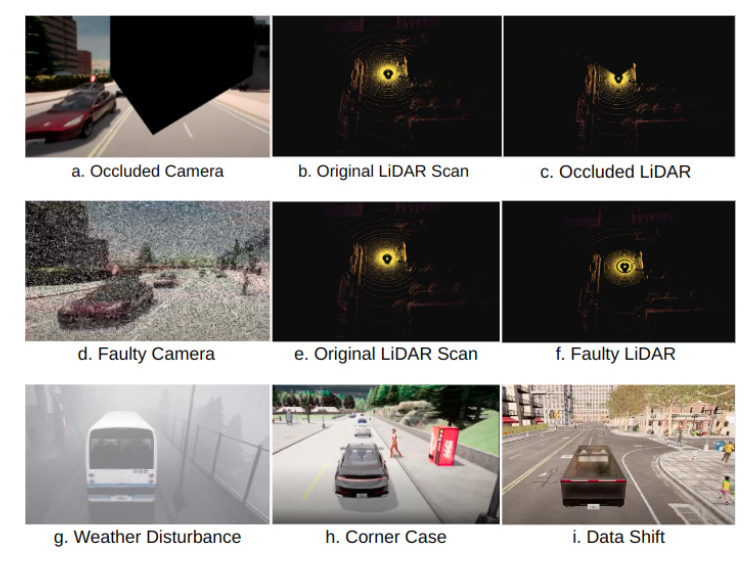

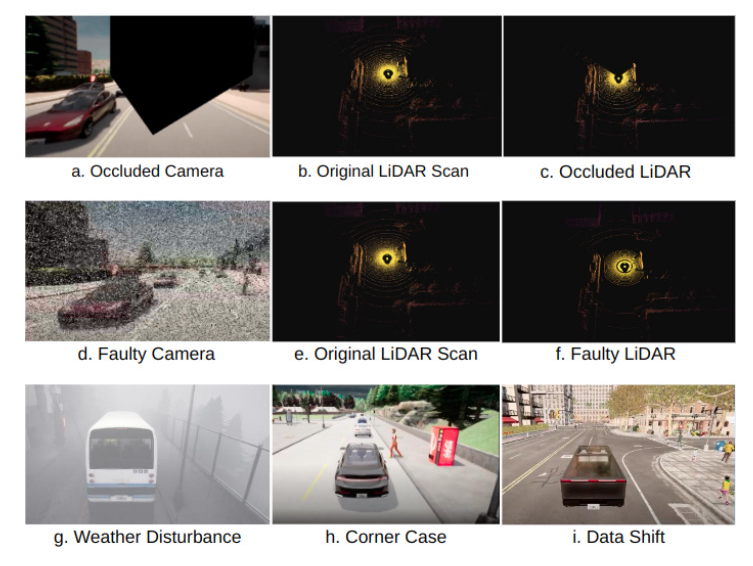

Camera Occlusion

Environmental materials such as dirt, leaves, and snow accumulation on sensors can cause camera occlusion. Camera occlusion occurs when the camera’s field of view is obstructed, leading to incomplete or inaccurate perception data.

LiDAR Occlusion

Similar to cameras, occlusion can arise in 3-dimensional (3D) Light Detection and Ranging (LiDAR) from environmental factors, including leaves, snow, dirt, etc.

Weather Disturbances

Adverse weather conditions such as heavy rain, fog, snow, or glare can introduce noise and distortions in sensor data, affecting the vehicle’s ability to perceive its surroundings accurately.

Robustness against Sensor Errors

Camera Error

Errors associated with faulty camera sensors, dead sensor elements, inaccurate pixel interpretation process, and intermittent electrical interference result in poor camera outputs.

LiDAR Error

3D LIDARs are incorporated with multiple channels to enable a higher vertical field of view (FOV), where each channel is a distinct laser beam. One example of hardware failure in 3D LIDARs is channel failure, where a particular beam stops functioning.

Other Sensors Errors

In addition to cameras and LiDARs, we introduced random noise, drawn from a uniform distribution, to the sensor data coming from positional measurement-related sensors such as the Global Navigation Satellite System (GNSS), the Inertial Measurement Unit (IMU), and the speedometer.

Robustness against Corner Cases

Corner cases represent extreme situations that may not be encountered in regular traffic but have the potential to challenge the capabilities of AD systems. This includes situations where an actor violates traffic rules, e.g., a pedestrian crossing outside of a crosswalk, debris on the road, etc.

!

S-RAF: Carbon Emission Indicator

It is challenging to obtain an accurate measure of the overall CO2 autonomous vehicles emit to the environment. However, we can estimate how much of CO2 the AI model that powers the vehicles constitutes at inference time. We estimated this by obtaining the carbon intensity (CI) value for the region from which the model is run, and multiplying this by the amount of energy (E) the process running the model used up: CO2 emissions (in Kg CO2Eq.) = CI × E.

Experiment

Traffic Setup

The traffic setup was done in CARLA simulator. For the experiment reported in this paper, we created a complicated route and spawned multiple actors to roam around the town. The route consists of different road structures including junctions and intersections. Actors include different forms of vehicles spanning cyclists to trucks interacting in different forms with the agent being tested.

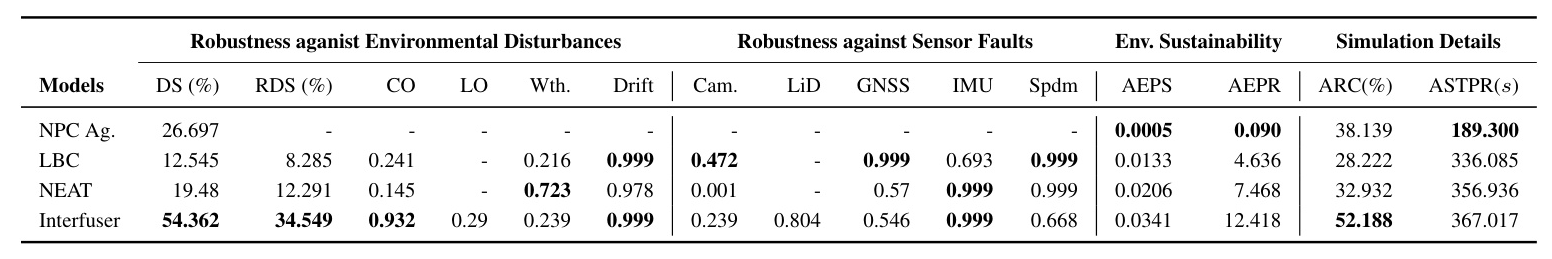

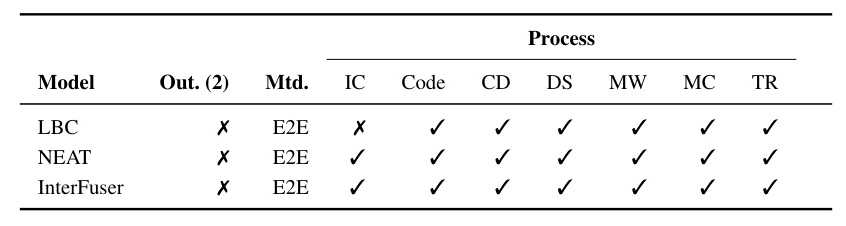

Driving Agent Details

We selected three trained agents, LBC, NEAT, and InterFuser, from the 2020, 2021, and 2022 CARLA Challenges, respectively based on the leaderboard results. We selected one agent from each year using two steps: sorting by driving scores and selecting the top agent that provided enough details for easy reproducibility.

Regular Driving Score

We used the driving score metric to assess the driving performance of the agents. The driving score is the product of the route completion and the infraction penalty. We track different types of infractions (e.g., collision with a vehicle, running a red light, etc.) in which the agent was involved.

Robustness Driving Score

We ran one route multiple times introducing each of the different types of disturbances in each run. The driving scores for the runs are grouped based on the type of traffic disturbances introduced. A robustness ratio is computed per condition. A robustness driving score for which agents are ranked is computed by taking the mean of all driving scores.

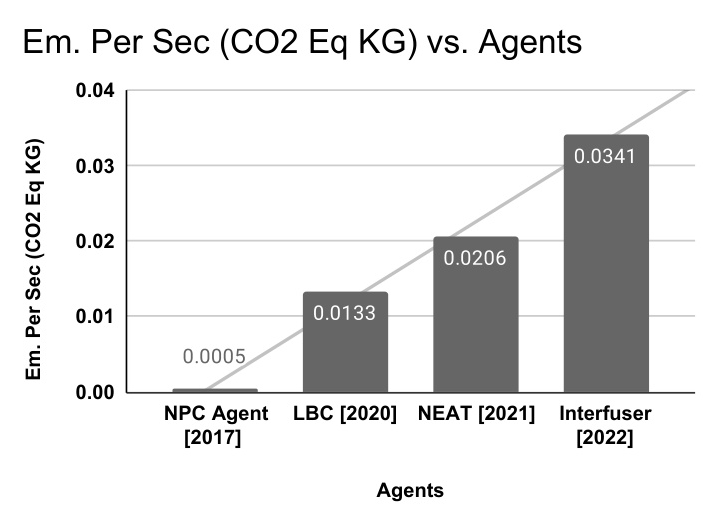

Estimating Carbon Emissions

For each run, we estimate the amount of carbon (in Kg CO2 Eq.) emitted as a result of running the agent. We provide the average CO2 emission for the runs as well as the average CO2 emission per second.

Results

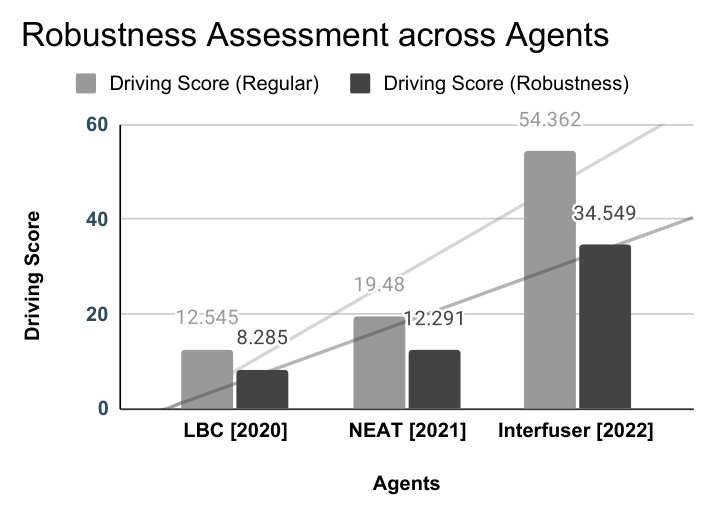

Robustness

From the results, we observe that Interfuser had the highest driving score and overall robustness driving score. Camera seems to be the most intolerant to faults as we observe the greatest decline when the camera was noised. Robustness readings need to be put in perspective with average route completion (ARC) and driving score.

Carbon Emissions

It is challenging to assess how much emission a vehicle in its entirety contributes to the environment. However, we can estimate how much emission the underlying AI model that controls navigation constitutes. For fair comparisons, we estimated average emissions per second (AEPS). As AD agents get more sophisticated (with increased robustness), the amount of CO2 they emit increases.

!

!

!

!

Discussion and Limitations

We have drawn attention to the need for accessible frameworks with RAI indicators, starting with robustness. These frameworks serve as safety guardrails for AD agent development. S-RAF improves over the conventional AI agents assessment approach that focuses on improving prediction accuracy with limited examples of critical edge cases by quantifying different aspects of robustness and environmental sustainability. S-RAF’s robustness metric takes into account core safety critical sensors in an AV and questions the capability of the agent when an individual or a combination of these sensors malfunctions or when their sensing range is limited due to environmental factors. Our results indicate an increasing trend in robustness and safe driving capability over the years.

The emission of green gases by vehicles have impacts on human safety. With S-RAF, AI developers have the option to discard models that emit excessive amounts of CO2. The limitation with S-RAF regarding sustainability is that it does not factor in the emissions caused during the manufacturing process of hardware components, e.g., electric vehicle batteries which are notable for constituting CO2 emissions at manufacturing time. We have only tested S-RAF on synthetic driving data from CARLA, this is one limitation of this work. Also, when S-RAF assesses agents, it indirectly emits CO2 as it runs the agents. However, this run is only for a limited time compared to when the models are already deployed and run for an extended period. Thus, S-RAF’s use is justifiable as it helps to prevent the deployment of unsafe models.

Conclusion

We have argued for the need for an accessible framework for assessing AD agents. We developed S-RAF, a framework aimed at guiding developers in building responsible AD agents that are robust and environmentally friendly. S-RAF can equally support AV regulators and other authorised stakeholders in assessing AD agents. Through an experiment, we showed how indicators composed in S-RAF were developed, and we tested these indicators on benchmark AD agents in CARLA simulator. We saw improvements in robustness over time (from 2020 to 2022), while the amount of CO2 emissions has increased as the models got sophisticated over the years. Lastly, we discussed the implications of these findings and future research agenda.

Acknowledgement

This work was supported by the EPSRC RAILS project (grant reference: EP/W011344/1), Amazon Web Services (AWS), and the Embodied AI Foundation.

Code:

https://github.com/cognitive-robots/rai-leaderboard