Authors:

Sang-Hoon Lee、Ha-Yeong Choi、Seong-Whan Lee

Paper:

https://arxiv.org/abs/2408.08019

Introduction

The paper “Accelerating High-Fidelity Waveform Generation via Adversarial Flow Matching Optimization” introduces PeriodWave-Turbo, a high-fidelity and efficient waveform generation model. This model leverages adversarial flow matching optimization to enhance the performance of pre-trained Conditional Flow Matching (CFM) generative models. The primary goal is to address the limitations of existing models, such as the need for numerous Ordinary Differential Equation (ODE) steps and the lack of high-frequency information in generated samples.

Related Works

Accelerating Methods for Few-Step Generator

Diffusion-based generative models have shown impressive performance but suffer from slow inference speeds due to iterative sampling processes. Various methods have been proposed to accelerate synthesis speed, including:

- Consistency Models (CM): Introduce one-step or few-step generation methods by directly mapping noise to data.

- Consistency Trajectory Models (CTM): Integrate CM and score-based models with adversarial training.

- FlashSpeech: Propose adversarial consistency training using SSL-based pre-trained models.

- Denoising Diffusion GAN (DDGAN): Integrate the denoising process with a multimodal conditional GAN for faster sampling.

- UFOGen: Enhance performance using improved reconstruction loss and pre-trained diffusion models.

- Adversarial Diffusion Distillation (ADD): Leverage pre-trained teacher models for distillation and adversarial training.

- Latent Adversarial Diffusion Distillation (LADD): Unify discriminator and teacher models for efficient training.

- Distribution Matching Distillation (DMD): Demonstrate high-quality one-step generation via distribution matching distillation and reconstruction loss.

Adversarial Feedback for Waveform Generation

GAN-based models have dominated waveform generation tasks by utilizing various well-designed discriminators to capture specific characteristics of waveform signals. Notable models include:

- MelGAN: Propose a multi-scale discriminator (MSD) reflecting features from different scales of waveform signals.

- HiFi-GAN: Introduce the multi-period discriminator (MPD) to capture implicit period features.

- UnivNet: Present the multi-resolutional spectrogram discriminator (MRD) to capture different features in spectral domains.

- Fre-GAN: Utilize resolution-wise discriminators.

- Abocodo: Propose collaborative multi-band discriminator (CoMBD) and sub-band discriminator (SBD).

- EnCodec: Modify MRD using complex values of spectral features.

- MS-SB-CQTD: Improve modeling of pitch and harmonic information in waveform signals.

PeriodWave-Turbo

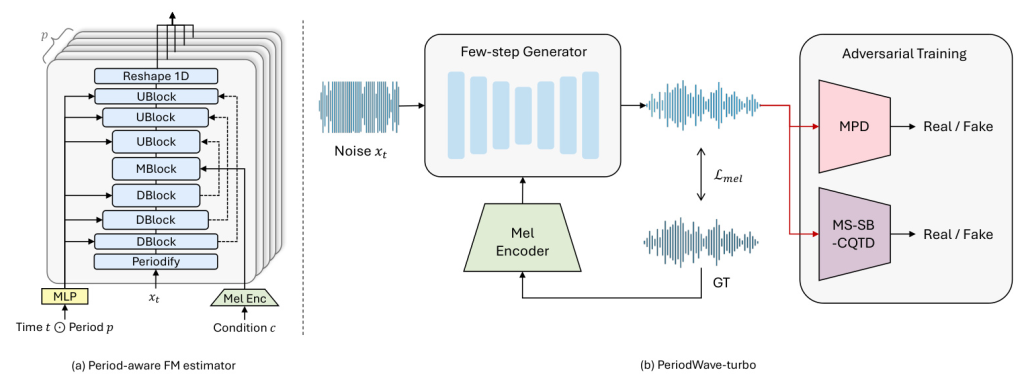

Flow Matching for Waveform Generation

Flow matching techniques have shown potential in generating high-quality waveforms by aligning the probability flow between noise and target distribution. PeriodWave employs CFM to create a waveform generator and incorporates a period-aware generator architecture to capture temporal features of input signals with greater precision. However, iterative processing steps can be slow, posing challenges for real-time applications.

Adversarial Flow Matching Optimization

Few-step Generator Modification

To accelerate waveform generation, the pre-trained CFM generator is modified into a fixed-step generator. The parameters are initialized by the pre-trained PeriodWave, and raw waveform signals are generated from noise using a fixed few-step ODE sampling with the Euler method. Fine-tuning the model with fixed steps makes it a specialist for better optimization.

Reconstruction Loss

Unlike the pre-training method with flow matching objectives, reconstruction losses can be utilized on raw waveform signals reconstructed by fixed-step sampling and ODE solver. The Mel-spectrogram reconstruction loss is adopted to focus on human-perceptual frequency:

[ L_{mel} = \| \psi(x) – \psi(\hat{x}) \|_1 ]

where (\hat{x}) is sampled with (G(x_t, c, t)) and ODE solver (Euler method) with fixed steps.

Adversarial Training

To ensure high-quality waveform generation, adversarial feedback is utilized by adopting multi-period discriminator (MPD) and multi-scale sub-band Constant-Q Transform discriminator (MS-SB-CQTD):

[ L_{adv}(D) = E(x) \left[ (D(x) – 1)^2 + D(G(x_t, c, t))^2 \right] ]

[ L_{adv}(G) = E_x \left[ (D(G(x_t, c, t)) – 1)^2 \right] ]

Additionally, feature matching loss (L_{fm}) is used, which is the L1 distance between the features of the discriminator from the ground-truth (x) and generated (\hat{x}).

Distillation Method

For few-step text-to-image generation, recent works introduced distillation methods using pre-trained diffusion models. The distillation method for waveform generation is compared using the pre-trained FM generator as a fake vector field estimator.

Final Loss

The total loss for PeriodWave-Turbo is expressed as:

[ L_{final} = L_{adv}(G) + \lambda_{fm} L_{fm} + \lambda_{mel} L_{mel} ]

where (\lambda_{fm}) and (\lambda_{mel}) are set to 2 and 45, respectively.

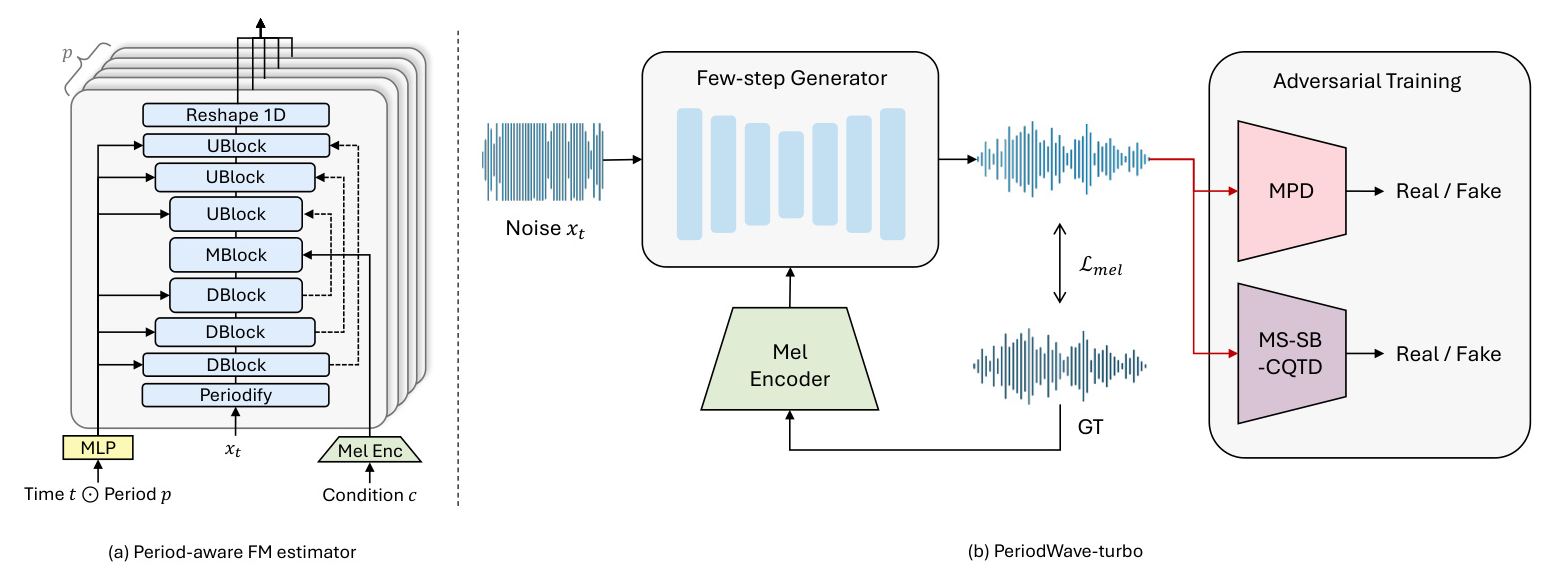

Model Size

PeriodWave and PeriodWave-Turbo are trained with different model sizes: Small (S, 7.57M), Base (B, 29.80M), and Large (L, 70.24M).

Experiment and Result

Dataset

The models are trained with the LJSpeech and LibriTTS datasets, widely used for waveform reconstruction tasks.

Pre-training

PeriodWave-S/B is pre-trained with flow matching objectives for 1M steps using the AdamW optimizer. PeriodWave-L is pre-trained with the same hyperparameters on four NVIDIA A100 80GB GPUs.

Training

PeriodWave-Turbo is trained from the pre-trained PeriodWave with adversarial flow matching optimization. The training steps are significantly reduced compared to fully GAN training.

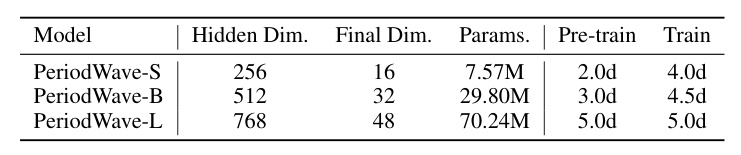

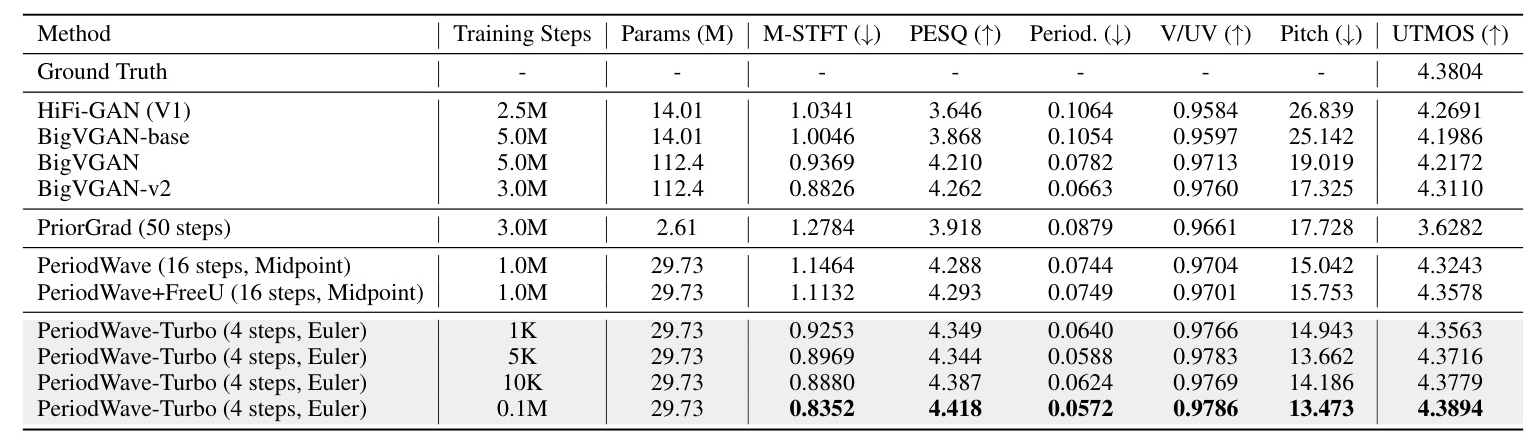

ODE Sampling

PeriodWave-Turbo models utilize the Euler method with four sampling steps, compared to the teacher models using the Midpoint method with 16 steps.

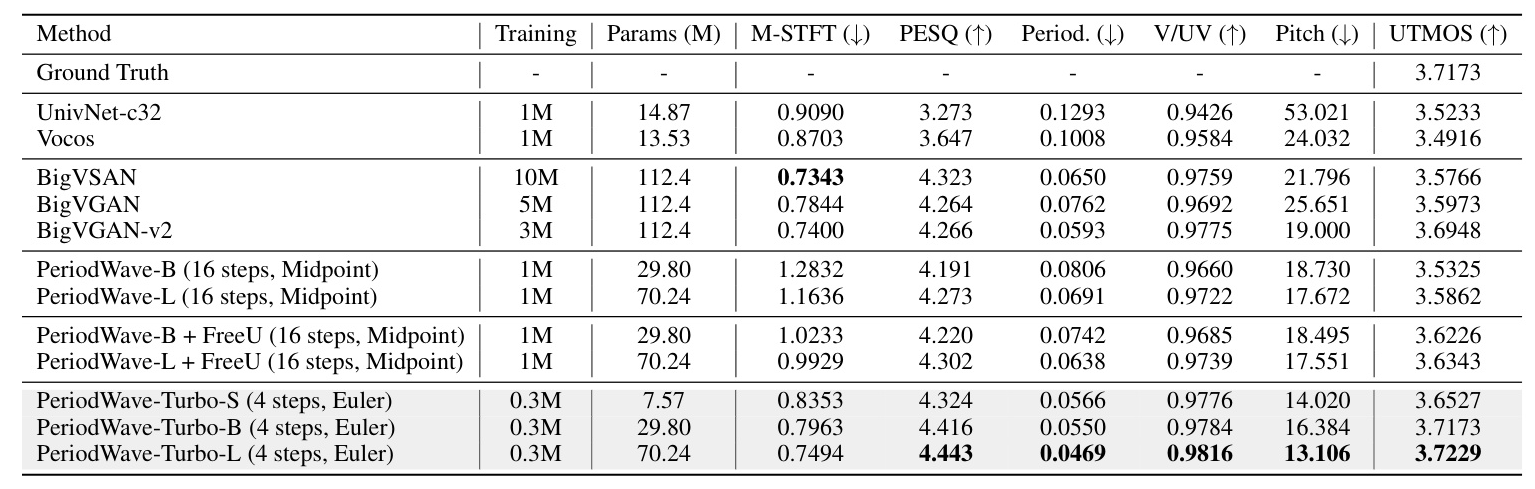

LJSpeech: High-quality Single Speaker Dataset

PeriodWave-Turbo achieves state-of-the-art performance in all objective metrics on the LJSpeech benchmark, demonstrating the efficiency of adversarial flow matching optimization.

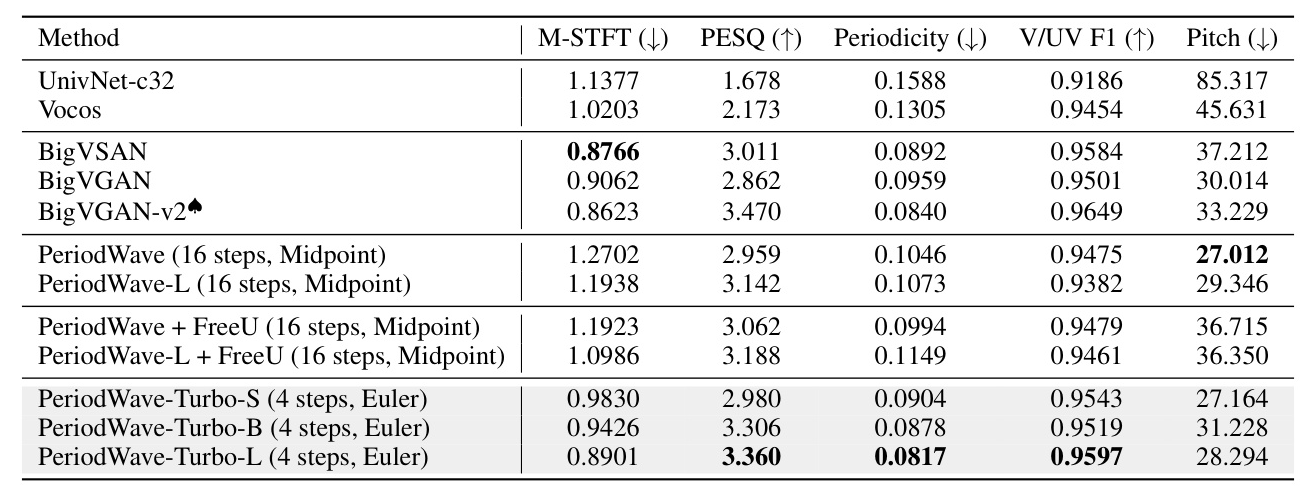

LibriTTS: Multi-speaker Dataset with 24,000 Hz

PeriodWave-Turbo achieves unprecedented performance on the LibriTTS benchmark, with a PESQ score of 4.454. The results show the robustness of the model structure, with better performance than larger GAN-based models.

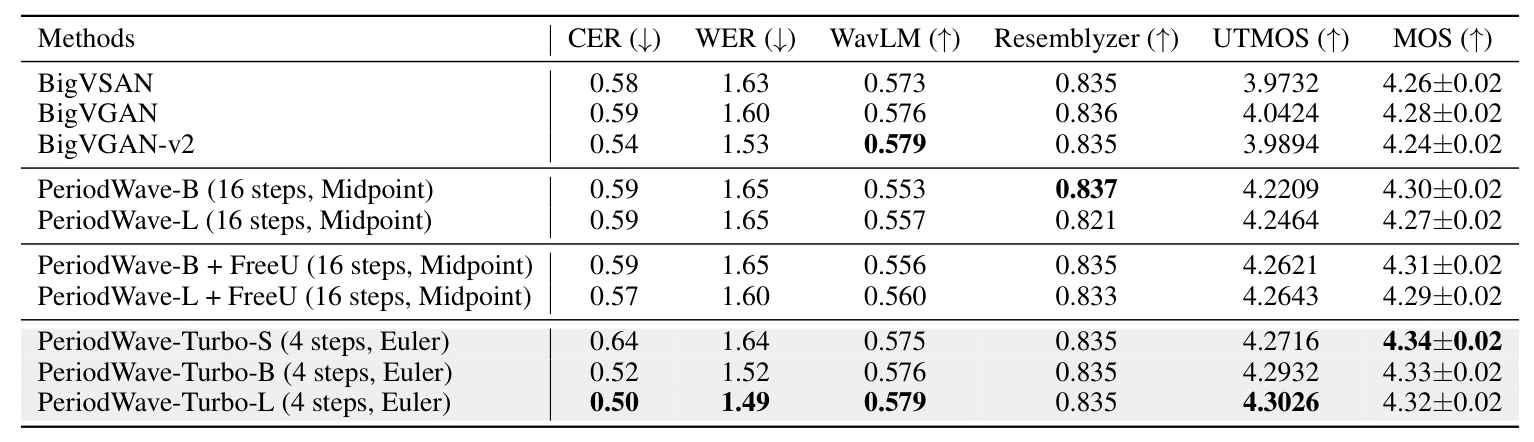

Subjective Evaluation

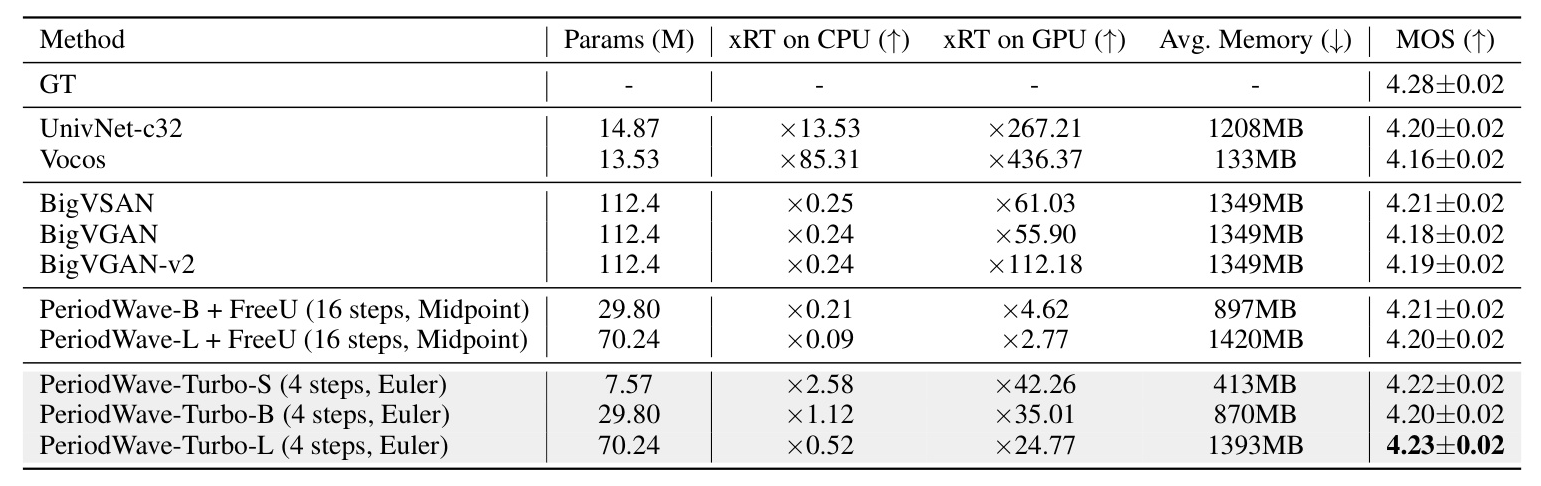

PeriodWave-Turbo demonstrates better performance than previous models in subjective evaluation, including large-scale GAN-based models and teacher models.

Inference Speed and Memory Usage

PeriodWave-Turbo significantly accelerates inference speed compared to teacher models, with lower VRAM usage.

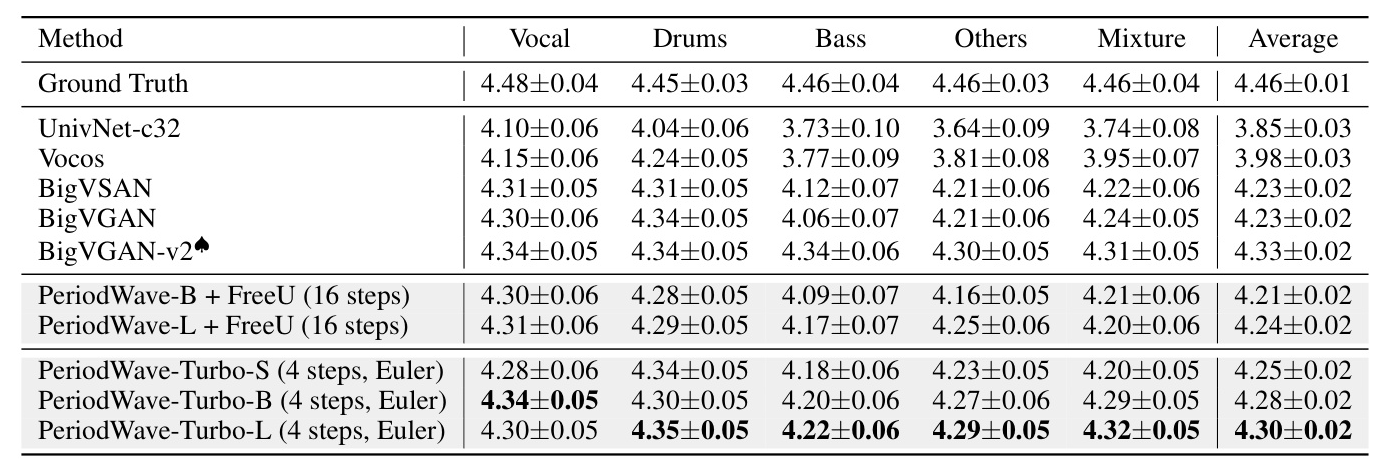

MUSDB18-HQ: Multi-track Music Audio Dataset for Out-Of-Distribution Robustness

PeriodWave-Turbo shows robustness on out-of-distribution samples, performing better on most objective metrics and achieving higher similarity MOS for each instrument.

Zero-shot TTS Results on LibriTTS dataset

PeriodWave-Turbo demonstrates better speech accuracy in terms of CER and WER, with better audio quality and naturalness in two-stage TTS scenarios.

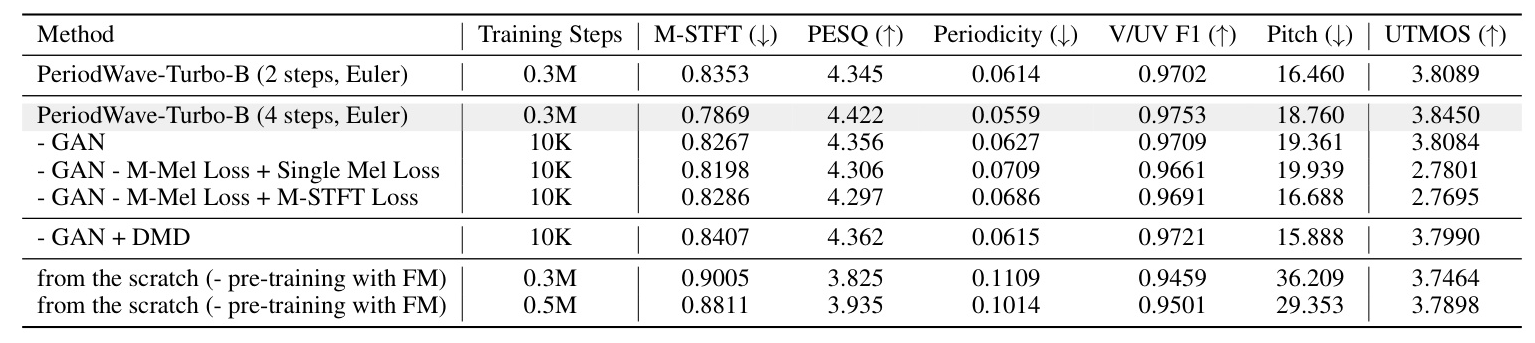

Ablation Study

The ablation study compares different reconstruction losses, adversarial feedback, and distillation methods. The results highlight the importance of multi-scale Mel-spectrogram loss and adversarial feedback for stable training and high-quality waveform generation.

Conclusion

PeriodWave-Turbo, a novel ODE-based few-step waveform generator, successfully accelerates CFM-based waveform generation using adversarial flow matching optimization. The model achieves state-of-the-art performance across objective and subjective metrics, with superior robustness in out-of-distribution and two-stage TTS scenarios. Future work will focus on further optimizing inference speed and adapting the model for end-to-end text-to-speech and text-to-audio generation tasks.

Code:

https://github.com/sh-lee-prml/periodwave