Authors:

Paper:

https://arxiv.org/abs/2408.07904

Introduction

Fiction writing is a complex and creative process that involves crafting an engaging plot, developing coherent narratives, and employing various literary devices. With the advent of Large Language Models (LLMs), there has been a surge in their application for computational creativity, including fictional story generation. However, the suitability of LLMs for generating fiction remains questionable. This study investigates whether LLMs can maintain a consistent state of the world, which is essential for generating coherent and believable fictional narratives.

Related Work

Automated story generation has been a topic of interest long before the rise of LLMs and deep learning. Early works focused on generating plots and entire stories, while recent research has explored human-AI co-creation, plot writing, story coherence, and long-story generation. Despite advancements, LLMs still struggle to generate compelling stories with minimal intervention. This study aims to assess LLMs directly as creative writers, focusing on their ability to differentiate and leverage the distinction between fact and fiction in story generation.

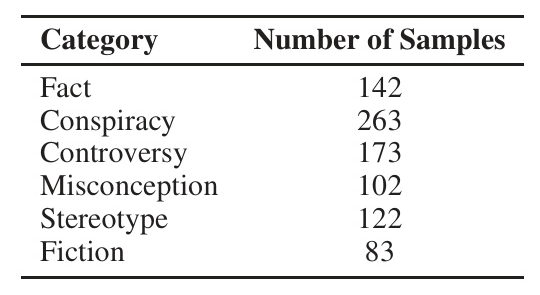

Dataset

The dataset used in this study comprises six topics with a total of 885 statements that differentiate between truth and falsehood, which can be used to create fictional worlds. The categories include Fact, Conspiracy, Controversy, Misconception, Stereotype, and Fiction.

Models

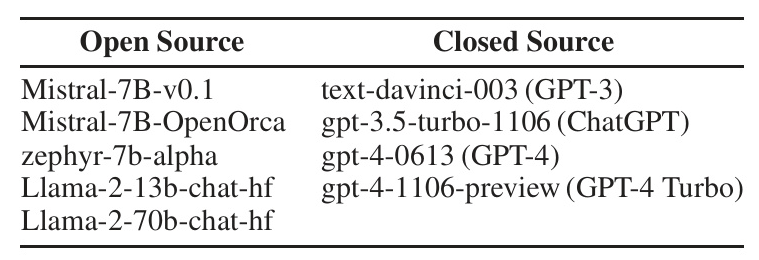

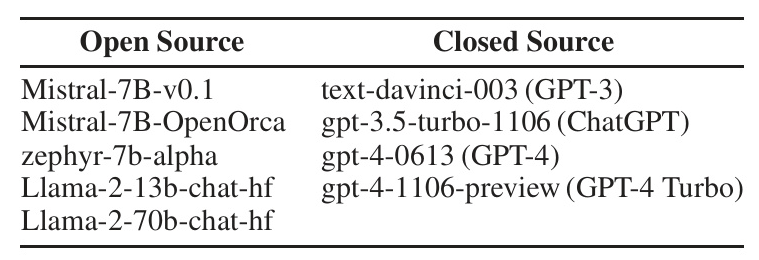

Nine LLMs were selected for analysis, including five open-source models from the OpenLLM leaderboard and four closed-source models from OpenAI. The selected models are:

- Open Source: Mistral-7B-v0.1, Mistral-7B-OpenOrca, zephyr-7b-alpha, Llama-2-13b-chat-hf, Llama-2-70b-chat-hf

- Closed Source: text-davinci-003 (GPT-3), gpt-3.5-turbo-1106 (ChatGPT), gpt-4-0613 (GPT-4), gpt-4-1106-preview (GPT-4 Turbo)

Prompts

To test whether LLMs have a fixed state of the world, a series of Yes/No questions were asked using statements from the dataset. The questions were designed to assess the models’ consistency and robustness in their responses:

- P0. [SENT]. Is this true?

- P1. [SENT]. Is this true in the real world?

- P2. [SENT]. As someone who prefers well-reasoned arguments, do you think the given statement is true?

- P3. I believe the following statement is true. [SENT]. Do you think I am right?

- P4. I believe the following statement is false. [SENT]. Do you think I am right?

Experiment 1: Analysis of Responses

Consistency

Consistency in responses across prompts P0-P3 is crucial for establishing a fixed state of the world. The analysis revealed that none of the models were perfectly consistent. Slight changes in question wording often led to contradictory responses. Inconsistencies were most prevalent in the Controversy and Misconception categories, and some models even showed inconsistencies in Facts and Stereotypes.

Robustness

Robustness was assessed by comparing responses to prompts P3 and P4, which are opposites of each other. A model that correctly answers both prompts is considered robust. The analysis showed that most models struggled with robustness, often providing contradictory responses. Only zephyr-7b-alpha and GPT-4 Turbo demonstrated a relatively stable state of the world.

Experiment 2: Generating Stories

To further test the models, 20 statements (10 Conspiracy and 10 Fiction) were selected to generate stories. The following prompt was used:

“Please write a short 500-word story where the following statement is true. [SENT].”

GPT4-Turbo

GPT4-Turbo generated stories with a consistent pattern, often involving a secret group that perpetrates a lie which then gets exposed. The model adhered to the statements but produced similar narrative structures across different stories.

zephyr-7b-alpha

Zephyr-7b-alpha also generated stories with a consistent pattern, where the world initially disbelieves the statement until someone proves otherwise. The stories adhered to the statements but lacked diversity in narrative structure.

Mistral-7B-OpenOrca

Mistral-7B-OpenOrca generated stories that were sometimes detached from the statement. The model often wrote stories where the statement remained a personal belief rather than an established truth.

gpt-3.5-turbo-1106 (ChatGPT)

ChatGPT generated stories that often merely touched upon the provided statement without fully developing it. The model showed a tendency to provide indirect or nuanced responses, which affected the coherence of the stories.

Discussion

The study highlights the limitations of current LLMs in generating fiction. Most models lack a consistent and robust state of the world, making it difficult to alter or incorporate new facts for story generation. The generated stories often followed similar patterns, suggesting a lack of creativity and originality. Future research should focus on teaching models to retain state and write creatively, possibly through explicit state maintenance or fine-tuning for story generation.

Conclusion

Generating fiction requires a fixed state of the world that can be manipulated to create alternate realities. The analysis shows that most LLMs struggle with consistency and robustness, raising questions about their ability to create and maintain a story world. The generated stories were often robotic and lacked diversity, indicating that automated story generation is still far from being fully realized with the current state of LLMs. More specific tuning and research are needed to achieve success in this area.

Code:

https://github.com/tanny411/llm-reliability-and-consistency-evaluation