Authors:

Daniele Rege Cambrin、Eleonora Poeta、Eliana Pastor、Tania Cerquitelli、Elena Baralis、Paolo Garza

Paper:

https://arxiv.org/abs/2408.07040

Introduction

In recent years, the integration of remote sensing and deep neural networks has significantly advanced agricultural management, environmental monitoring, and various earth-observation tasks. One critical application is the segmentation of crop fields, which is essential for optimizing agricultural productivity, assessing crop health, and planning sustainable farming practices. Accurate segmentation enables precise calculations of area coverage, assessment of crop types, and monitoring of agronomic factors such as plant health and soil conditions. However, the complexity of deep learning models often makes them difficult to interpret, posing challenges in understanding their decision-making processes.

The newly proposed Kolmogorov-Arnold Networks (KANs) offer promising advancements in neural network performance. This paper explores the integration of KAN layers into the U-Net architecture (U-KAN) for segmenting crop fields using Sentinel-2 and Sentinel-1 satellite images. The study evaluates the performance and explainability of these networks, revealing that U-KAN achieves a 2% improvement in Intersection-Over-Union (IoU) compared to the traditional U-Net model with fewer GFLOPs. Additionally, gradient-based explanation techniques show that U-KAN predictions are highly plausible, focusing on the boundaries of cultivated areas rather than the areas themselves.

Related Work

Remote Sensing

Remote sensing technologies have been extensively applied in agriculture to enhance crop monitoring, management, and productivity. Early studies utilized satellite imagery to assess crop health and estimate yields. With advancements in sensor technology and data processing techniques, the resolution and accuracy of remote sensing data have significantly improved, enabling more detailed analysis of agricultural landscapes. The introduction of Convolutional Neural Networks (CNNs) and the U-Net architecture has further enhanced crop field segmentation.

Explainable AI

Explainable artificial intelligence (XAI) aims to make machine learning models interpretable and understandable to humans. In recent years, there has been significant interest in applying XAI techniques to Earth observation tasks. Solutions in this domain follow a standard categorization of XAI approaches: interpretable by design and post-hoc explainability methods. Saliency maps are one of the most adopted post-hoc methods for visualizing which parts of an input image influence the model’s prediction. The urgency of understanding the decision process of models in remote sensing has led to works investigating their application to segment satellite imagery and agricultural fields.

Methodology

Problem Statement

This work addresses the crop field segmentation problem based on radiometric or hyperspectral images. The objective is to automatically create a binary mask indicating cultivated areas in a satellite image. From an XAI perspective, the goal is to produce a saliency map highlighting the regions of the image important for the model’s prediction.

Models

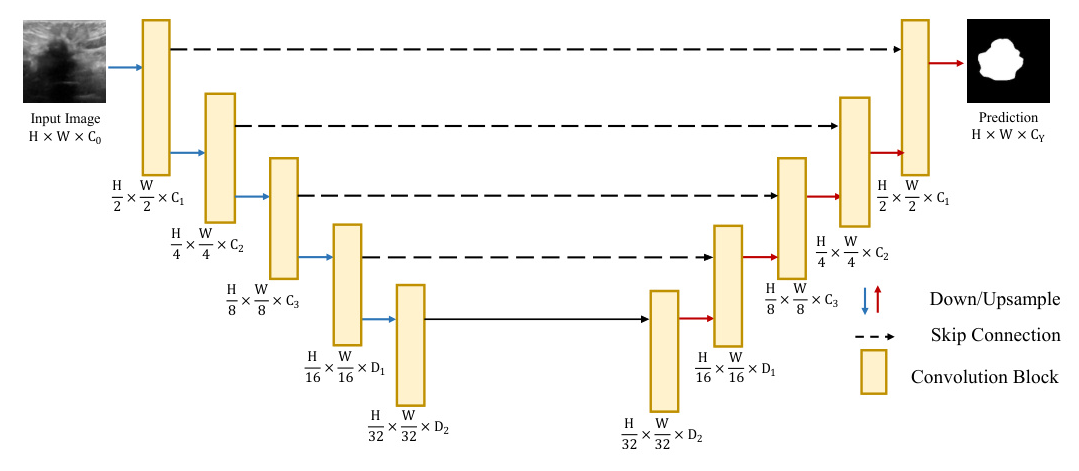

U-Net

U-Net is a convolutional neural network architecture designed primarily for biomedical image segmentation. Its U-shaped structure consists of a contracting path to capture context and a symmetric expanding path to enable precise localization. This design allows U-Net to effectively learn from relatively few training images and produce high-quality segmentations.

KAN

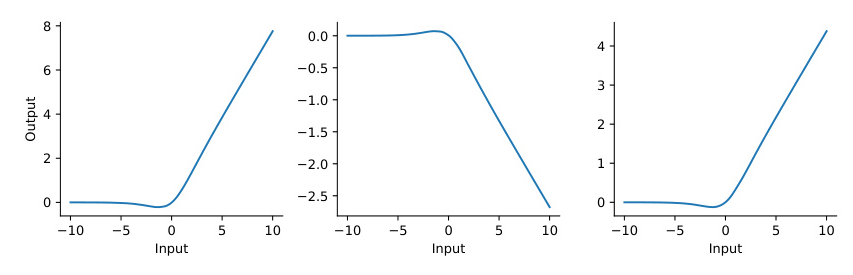

Kolmogorov-Arnold Networks (KANs) are inspired by the Kolmogorov-Arnold representation theorem, which states that every multivariate continuous function can be represented as a superposition of continuous functions of one variable. Unlike traditional Multi-Layer Perceptrons (MLPs) that have fixed activation functions on nodes, KANs employ learnable activation functions on edges, allowing for more transparent and efficient learning of complex relations.

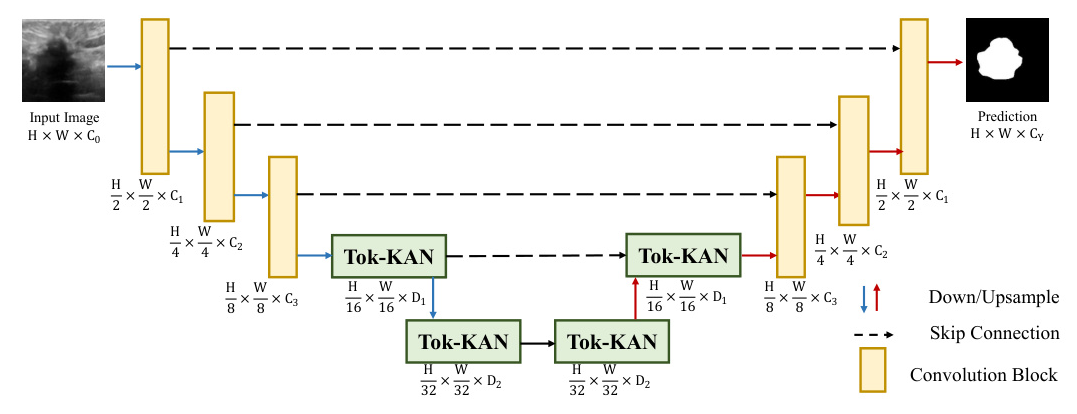

U-KAN

U-KAN integrates KAN layers into the U-Net architecture. The deepest layer of the U-Net is implemented using KANs, which consist of a tokenization layer, a KAN layer, a downsampling layer, and a normalization layer. This modification allows the network to learn custom activation functions, potentially improving the representativity of the embeddings and reducing computational resources.

Experimental Setup

Dataset

The South Africa Crop Type dataset, containing images from Sentinel-2 and Sentinel-1, was used for this study. The dataset includes small and irregular-shaped crop fields, providing higher resolution imagery than other datasets covering the area. The annotations contain the mask of the areas covered by a certain crop. The dataset was divided into training, validation, and test sets.

Experimental Setting

Crop Field Segmentation

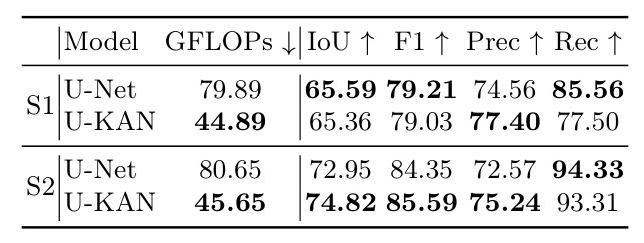

The images were resized to 256 × 256 pixels. The networks were trained with an AdamW optimizer and a learning rate scheduler. Random horizontal and vertical flipping were applied as augmentations. The loss function used was generalized dice loss. The networks were evaluated under Intersection-Over-Union (IoU), F1-Score (F1), Precision (Prec), and Recall (Rec) for the positive class, as well as GFLOPs to measure efficiency.

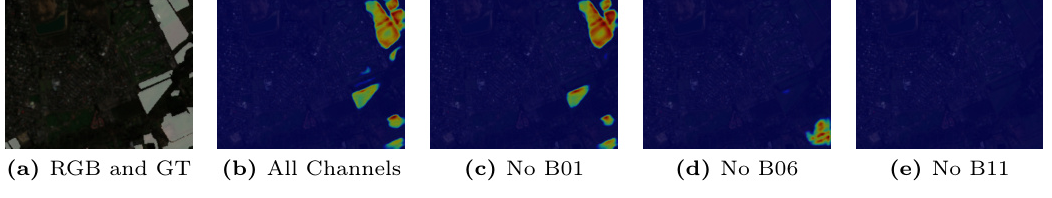

Explainability

Grad-CAM was used as a visual post-hoc explanation method. For each image, a saliency map was generated to quantify the influence of each pixel on the prediction of the positive class. The explanations were assessed based on Plausibility, Sufficiency, and Per-channel Relevance.

Experimental Results

Task Performances

U-KAN provided the best performance in terms of IoU on Sentinel-2 data, proving its adaptability in dealing with complex relations. On Sentinel-1 data, U-KAN achieved comparable performance to U-Net. U-KAN was also more computationally efficient, consuming half the GFLOPs of U-Net.

Analysis of Explanations

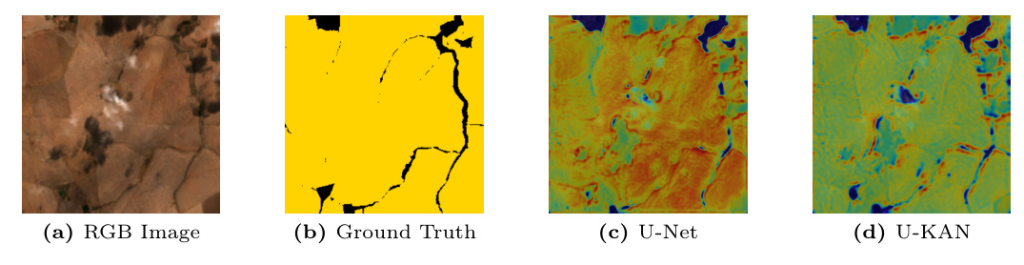

Qualitative Evaluation

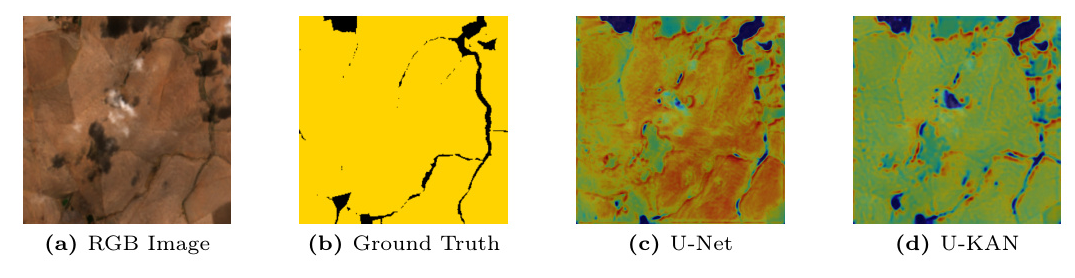

Saliency maps generated by U-Net and U-KAN models revealed differences in their focus areas. U-Net distributed its focus over larger regions, while U-KAN focused predominantly on the boundaries of cultivated areas, suggesting its potential use in boundary delimitation and mapping tasks.

Quantitative Evaluation

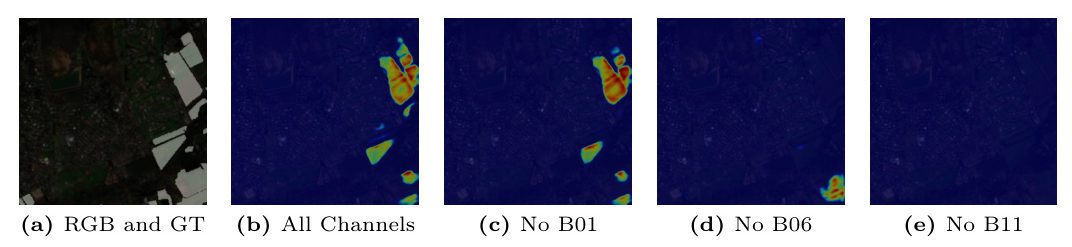

U-KAN demonstrated higher IoU and Precision in terms of plausibility, indicating more accurate and reliable explanations. In terms of sufficiency, both networks showed an improvement in Precision when less important pixels were excluded. Per-channel relevance analysis revealed that certain channels, such as B05, B8A, and B11, were critical for the segmentation task.

Analysis of the Trained Models

KANs offer interpretability through the visualization of learnable activation functions. The learned activations for an element of the embeddings in a decoder layer showed substantial differences from the base function, indicating the network’s ability to represent more complex relations.

Conclusions

This study demonstrated that U-KAN offers superior performance and efficiency compared to U-Net for crop field segmentation. The explainability analysis revealed that U-KAN’s focus on boundary details makes it effective for tasks such as boundary delimitation and mapping. Additionally, the analysis identified critical channels for the segmentation task, suggesting the potential for optimizing computational costs. Future work will focus on implementing these insights to enhance performance and reduce computational costs.