Authors:

Yuyang Xue、Junyu Yan、Raman Dutt、Fasih Haider、Jingshuai Liu、Steven McDonagh、Sotirios A. Tsaftaris

Paper:

https://arxiv.org/abs/2408.06890

Achieving Fairness in Machine Learning with BMFT: A Detailed Exploration

In the realm of machine learning, ensuring fairness is crucial, especially in sensitive domains like medical diagnosis. The paper “BMFT: Achieving Fairness via Bias-based Weight Masking Fine-tuning” by Yuyang Xue et al. introduces an innovative post-processing method aimed at enhancing the fairness of pre-trained models without requiring access to the original training data. This blog post delves into the methodology, experiments, and results presented in the paper.

Introduction

Machine learning models often exhibit biases due to various factors such as human judgment, algorithmic predispositions, and representation bias. This can lead to unfair predictions, particularly when data from minority groups are underrepresented. Ensuring fairness in AI applications is essential to maintain trust and acceptance among users.

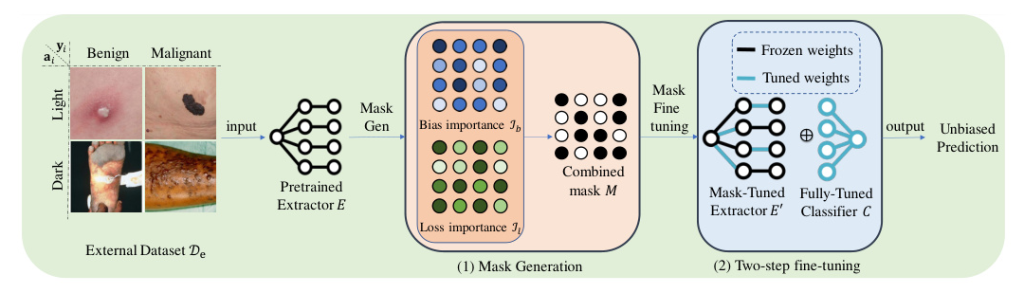

The paper proposes Bias-based Weight Masking Fine-Tuning (BMFT), a novel post-processing method that enhances model fairness with fewer epochs and without needing the original training data. BMFT identifies and fine-tunes weights contributing to biased predictions, followed by a reinitialization and fine-tuning of the classification layer to maintain discriminative performance.

Method

Preliminaries

BMFT operates on an external dataset ( D_e ) consisting of images, labels, and sensitive attributes. The goal is to debias a pre-trained model ( f_\theta ) with respect to fairness metrics like Demographic Parity (DP) and Equalized Odds (EOdds) using significantly fewer epochs. A group-balanced subset ( D_r ) is created from ( D_e ) to ensure equal representation across attribute groups and label types.

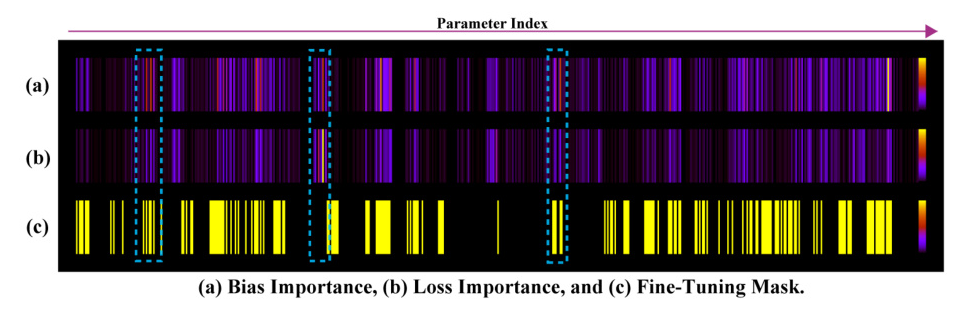

Bias-Importance-Based Mask Generation

BMFT identifies effective parameters for updating by evaluating their importance concerning bias and loss functions. The top K parameters highly correlated with bias but minimally contributing to prediction are selected as masking weights. The weighted Binary Cross Entropy (WBCE) loss function is used due to label imbalance in the dataset.

The importance of each parameter is quantified using the Fisher Information Matrix (FIM), which provides an efficient computation through first-order derivatives. The weight mask ( M_i ) is generated based on the ratio of bias importance ( I_{i,b} ) to loss importance ( I_{i,l} ).

Impair-Repair: Two-Step Fine-Tuning

BMFT employs a two-stage fine-tuning process:

1. Impair Stage: Fine-tune the selected masked parameters within the feature extractor using the group-balanced dataset ( D_r ).

2. Repair Stage: Fine-tune the entire reinitialized classification layer ( C(\cdot) ) using a combined loss function that includes both WBCE and fairness constraints.

This approach ensures that core features are retained while bias-influenced weights are adjusted, leading to improved fairness and predictive performance.

Experiments and Results

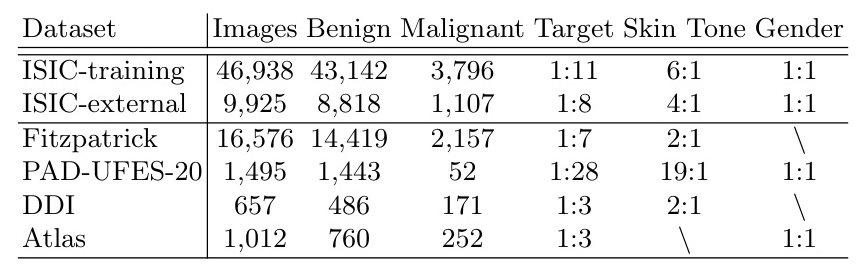

Dataset and Data-processing

The experiments utilize various dermatological datasets, including the ISIC Challenge Training Dataset, Fitzpatrick-17k, PAD-UFES-20, Interactive Atlas of Dermoscopy (Atlas), and DDI. These datasets provide diverse and comprehensive data for evaluating the model’s generalizability across different sensitive attributes like skin tone and gender.

Implementation

The experiments were conducted using PyTorch on NVIDIA A100 GPUs. Different variants of the ResNet architecture were used, with specific learning rates and batch sizes for the impair and repair processes. The performance metrics include accuracy (ACC), area under the receiver operating characteristic curve (AUC), and equalized odds (EOdds).

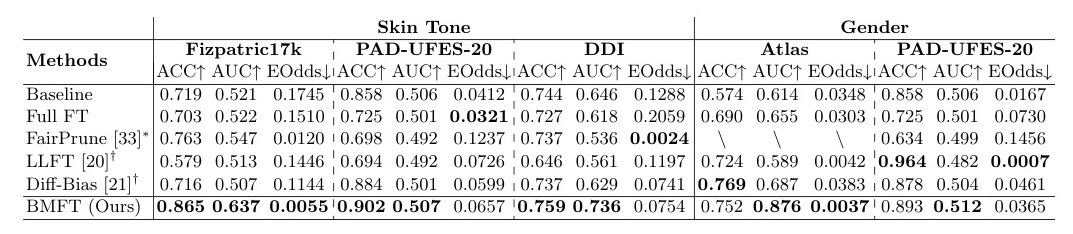

Results

BMFT was compared with several baseline and state-of-the-art (SOTA) models, including Full Fine-Tuning (Full FT), FairPrune, LLFT, and Diff-Bias. The results demonstrated that BMFT outperforms these methods in terms of both fairness and classification performance across various test datasets.

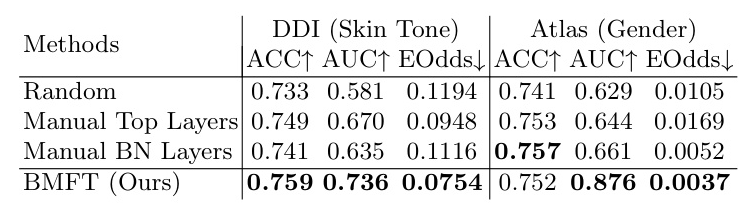

Mask Comparison

The effectiveness of the BMFT mask was compared with random masking, manual shallow convolutional (Top) masking, and batch normalization (BN) masking. BMFT outperformed these baselines, illustrating the utility of the proposed mask generation process.

Conclusion

BMFT presents a significant advancement in bias mitigation for discriminative models in dermatological disease diagnosis. By distinguishing core features from biased features, BMFT enhances fairness without sacrificing classification performance. The two-step impair-repair fine-tuning approach effectively reduces bias within minimal epochs, providing an efficient solution when computing resources or data access are limited.

Future work will explore a broader range of tasks and multiple attribute-label pairs, addressing the challenge of obtaining diverse, well-labeled public datasets with comprehensive metadata or sensitive attributes.

Acknowledgments

The authors acknowledge the financial support from the School of Engineering, the University of Edinburgh, Canon Medical, the Royal Academy of Engineering, the UK’s Engineering and Physical Sciences Research Council (EPSRC), and the National Institutes of Health (NIH).

Disclosure of Interests

The authors declare no competing interests relevant to the content of this article.

By implementing BMFT, researchers and practitioners can achieve fairer machine learning models, particularly in ethically sensitive domains, ensuring equitable and responsible AI applications.