Authors: Ruining Li, Chuanxia Zheng, Christian Rupprecht, Andrea Vedaldi

ArXiv: http://arxiv.org/abs/2408.04631v1

Abstract

We present Puppet-Master, an interactive video generative model that can serve as a motion prior for part-level dynamics. At test time, given a single image and a sparse set of motion trajectories (i.e., drags), Puppet-Master can synthesize a video depicting realistic part-level motion faithful to the given drag interactions. This is achieved by fine-tuning a large-scale pre-trained video diffusion model, for which we propose a new conditioning architecture to inject the dragging control effectively. More importantly, we introduce the all-to-first attention mechanism, a drop-in replacement for the widely adopted spatial attention modules, which significantly improves generation quality by addressing the appearance and background issues in existing models. Unlike other motion-conditioned video generators that are trained on in-the-wild videos and mostly move an entire object, Puppet-Master is learned from Objaverse-Animation-HQ, a new dataset of curated part-level motion clips. We propose a strategy to automatically filter out sub-optimal animations and augment the synthetic renderings with meaningful motion trajectories. Puppet-Master generalizes well to real images across various categories and outperforms existing methods in a zero-shot manner on a real-world benchmark. See our project page for more results: vgg-puppetmaster.github.io.

Summary:

The article discusses an interactive video generative model called Puppet-Master, which generates videos depicting realistic part-level motion based on a given single image and sparse set of motion trajectories (drags). The authors aimed to address limitations in existing models that could only move entire objects but not parts within the object.

To tackle this, the authors introduced a new conditioning architecture that effectively injects dragging control into a pre-trained video diffusion model and proposed a method called “all-to-first attention” to enhance quality in generation. They also curated new datasets of part-level motion clips, called Objaverse-Animation and Objaverse-Animation-HQ, using a systematic approach to filter out animations of lower quality and those with non-realistic motions.

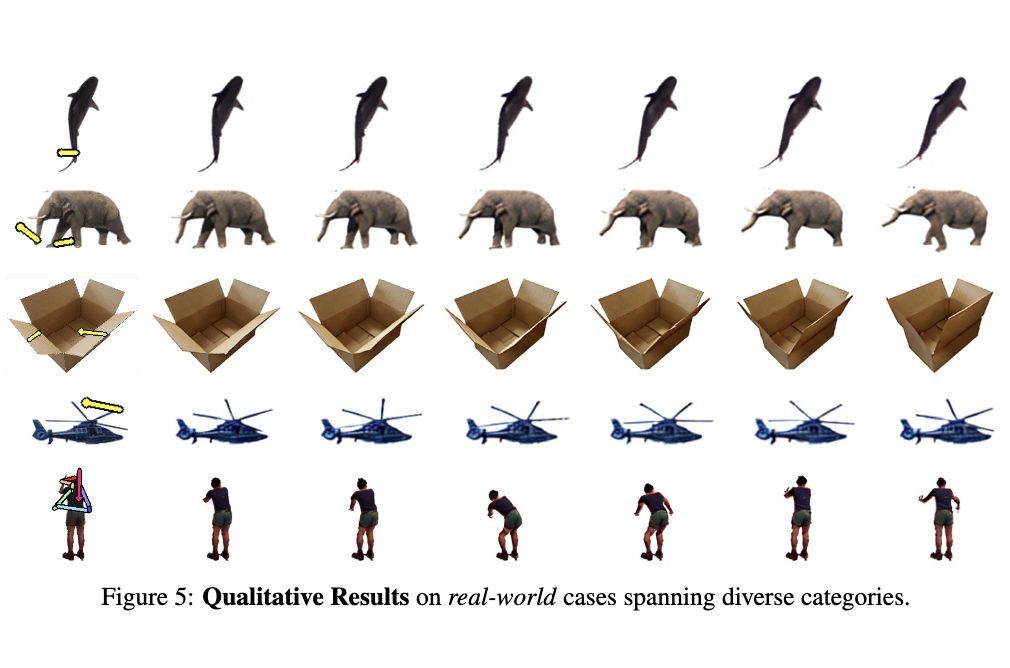

Through these approaches, the model demonstrates excellent zero-shot generalization to real-world cases, producing videos that showcase more nuanced, part-level motion of objects, outperforming previous models on various benchmarks. The authors also acknowledged their sponsors and collaborators in their research.

Thinking Direction:

1. How does the Puppet-Master model’s introduction of the all-to-first attention mechanism compare to traditional spatial attention modules in terms of addressing appearance and background issues in generative models?

2. In what ways does the data curation process contribute to the effectiveness of the Puppet-Master model, particularly when fine-tuning on the Objaverse-Animation-HQ dataset?

3. What are the advantages of the Puppet-Master model’s zero-shot generalization capabilities when applied to real-world cases, as compared to prior models that required further tuning on real videos?